Zaid Khan

@codezakh.bsky.social

270 followers

530 following

9 posts

PhD student @ UNC NLP with @mohitbansal working on grounded reasoning + code generation | currently interning at Ai2 (PRIOR) | formerly NEC Laboratories America | BS + MS @ Northeastern

zaidkhan.me

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Mohit Bansal

@mohitbansal.bsky.social

· May 5

Reposted by Zaid Khan

Reposted by Zaid Khan

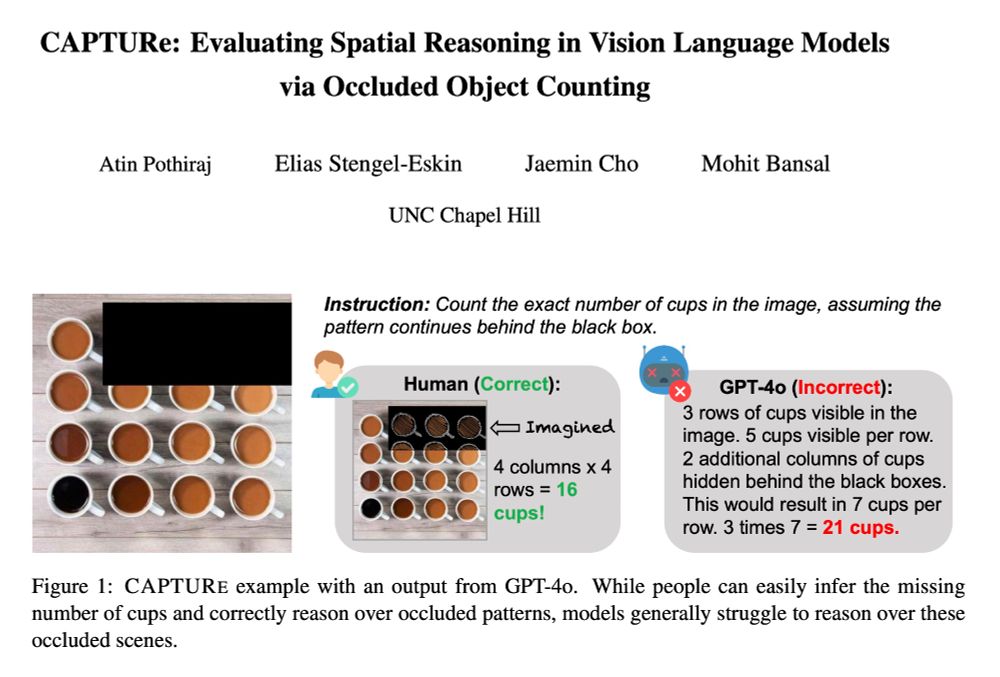

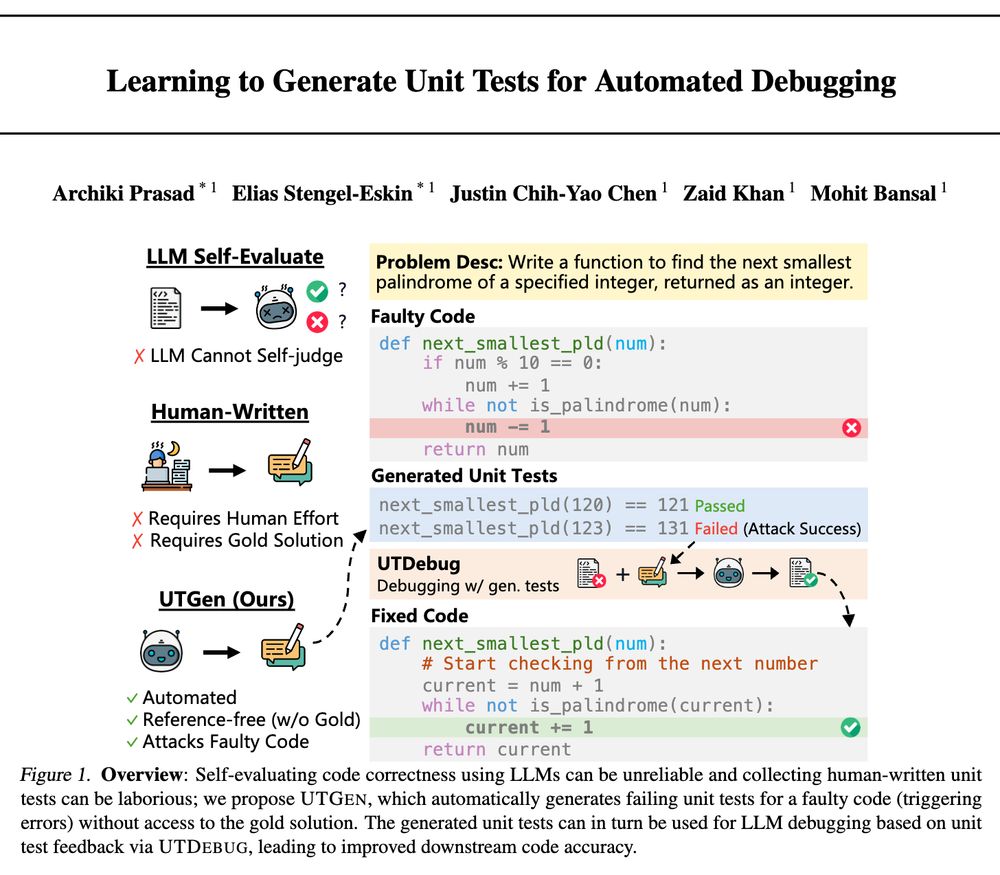

Elias Stengel-Eskin

@esteng.bsky.social

· Apr 29

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Zaid Khan

@codezakh.bsky.social

· Apr 15

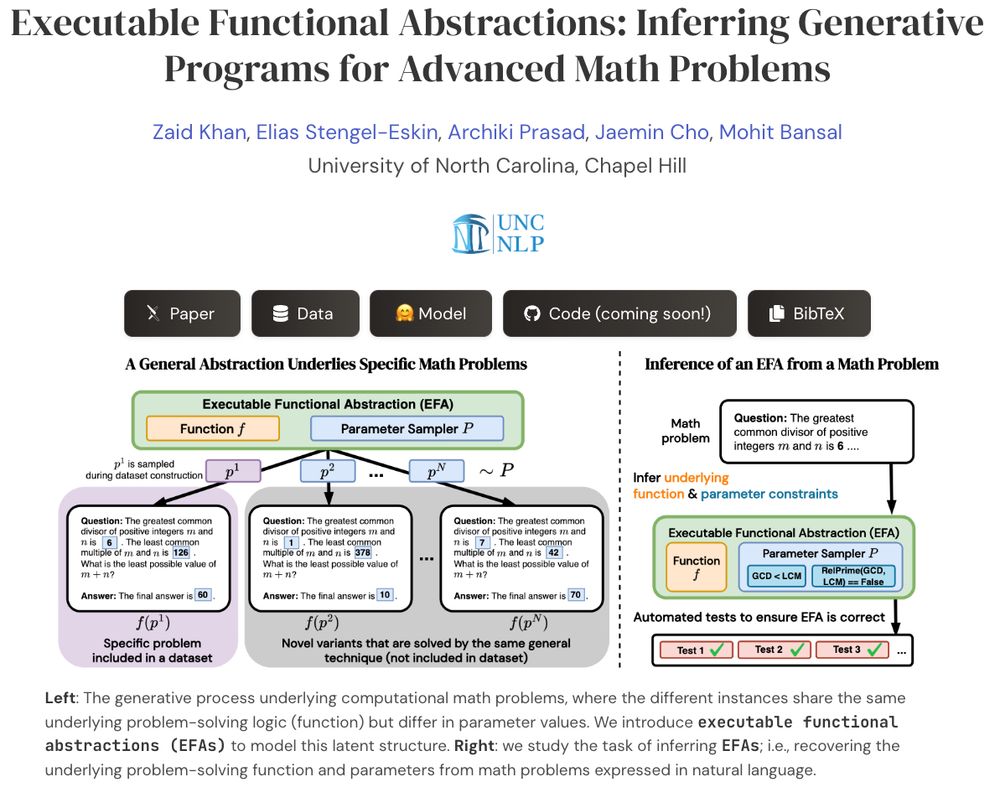

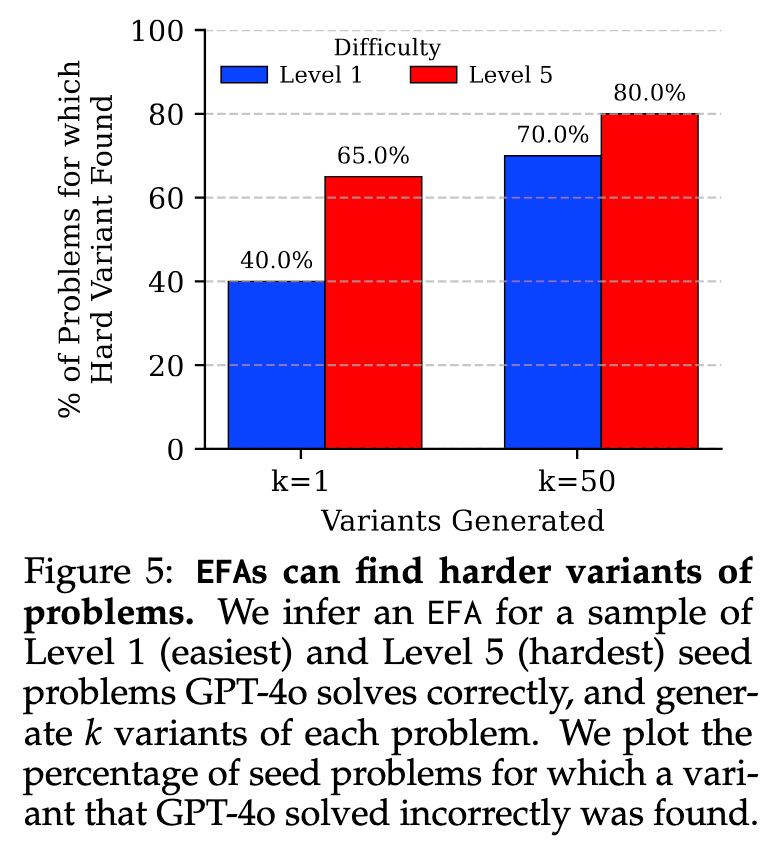

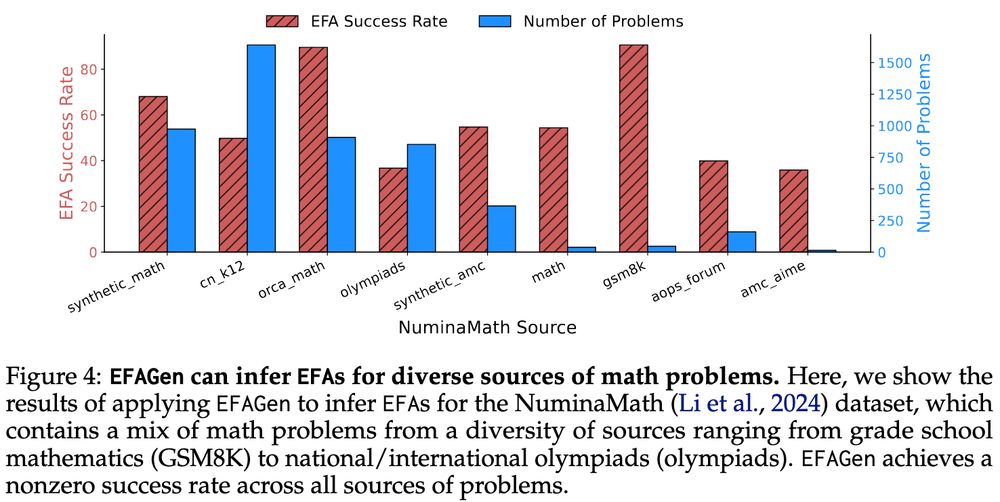

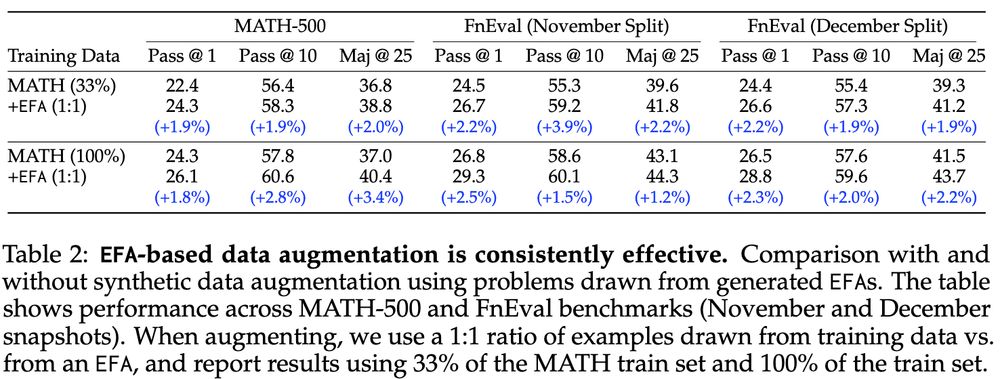

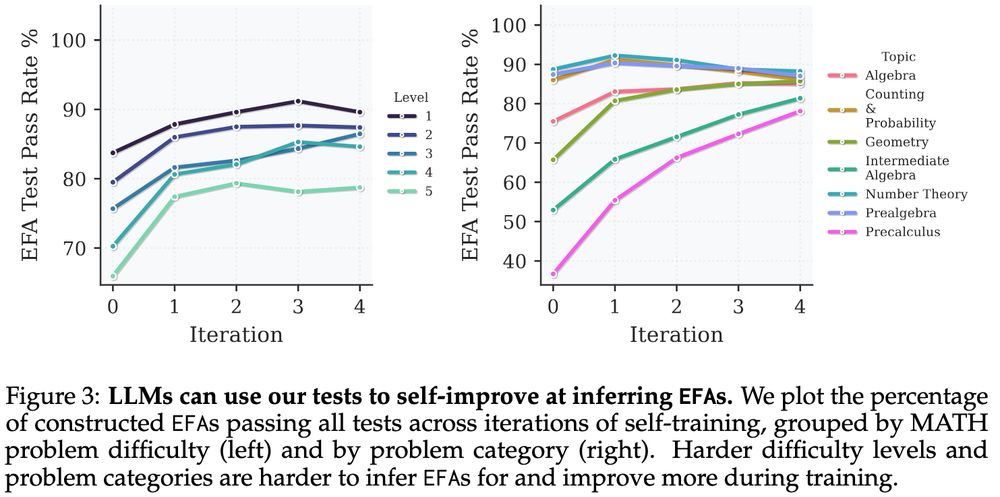

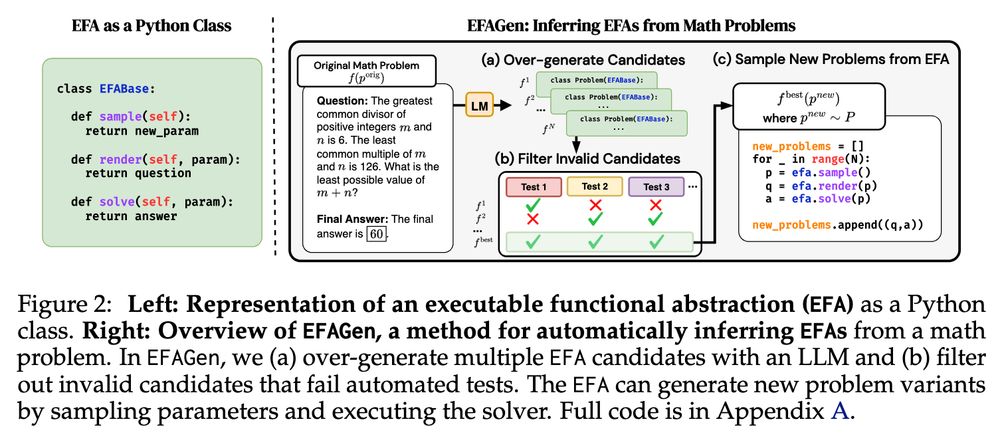

Executable Functional Abstractions: Inferring Generative Programs for Advanced Math Problems

Scientists often infer abstract procedures from specific instances of problems and use the abstractions to generate new, related instances. For example, programs encoding the formal rules and properti...

arxiv.org

Zaid Khan

@codezakh.bsky.social

· Apr 15

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

Reposted by Zaid Khan

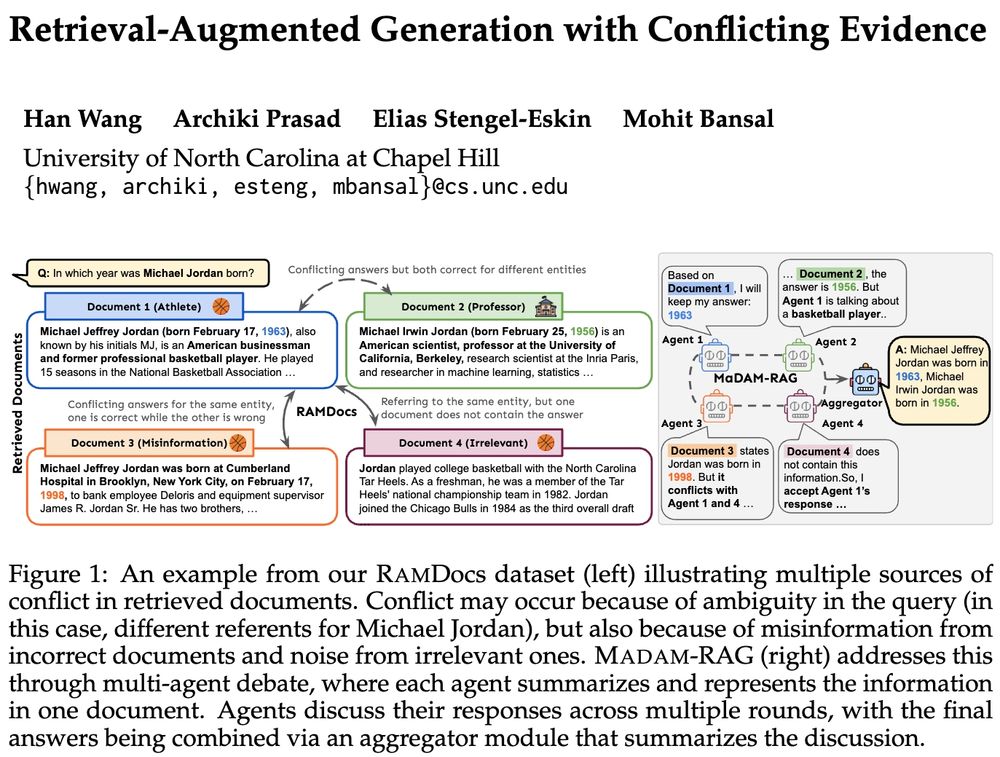

Mohit Bansal

@mohitbansal.bsky.social

· Jan 27

Reposted by Zaid Khan

Mohit Bansal

@mohitbansal.bsky.social

· Jan 27

Reposted by Zaid Khan

Mohit Bansal

@mohitbansal.bsky.social

· Jan 27