Samuel Lavoie

@lavoiems.bsky.social

230 followers

110 following

13 posts

PhD candidate @Mila_quebec, @UMontreal. Ex: FAIR @AIatMeta.

Learning representations, minimizing free energy, running.

Posts

Media

Videos

Starter Packs

Reposted by Samuel Lavoie

Guillaume Lajoie

@glajoie.bsky.social

· Aug 19

Eric Elmoznino

@ericelmoznino.bsky.social

· Aug 18

Defining and quantifying compositional structure

What is compositionality? For those of us working in AI or cognitive neuroscience this question can appear easy at first, but becomes increasingly perplexing the more we think about it. We aren’t shor...

ericelmoznino.github.io

Samuel Lavoie

@lavoiems.bsky.social

· Jul 22

Samuel Lavoie

@lavoiems.bsky.social

· Jul 22

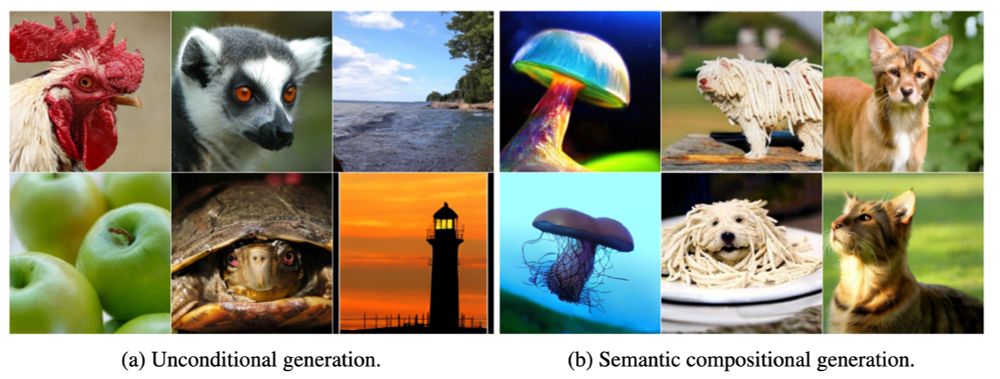

GitHub - lavoiems/DiscreteLatentCode: Official repository for the article Compositional Discrete Latent Code for High Fidelity, Productive Diffusion Models (https://arxiv.org/abs/2507.12318)

Official repository for the article Compositional Discrete Latent Code for High Fidelity, Productive Diffusion Models (https://arxiv.org/abs/2507.12318) - lavoiems/DiscreteLatentCode

github.com

Samuel Lavoie

@lavoiems.bsky.social

· Jul 17

Modeling Caption Diversity in Contrastive Vision-Language Pretraining

There are a thousand ways to caption an image. Contrastive Language Pretraining (CLIP) on the other hand, works by mapping an image and its caption to a single vector -- limiting how well CLIP-like mo...

arxiv.org

Samuel Lavoie

@lavoiems.bsky.social

· Dec 5