Max Bartolo

@maxbartolo.bsky.social

300 followers

27 following

15 posts

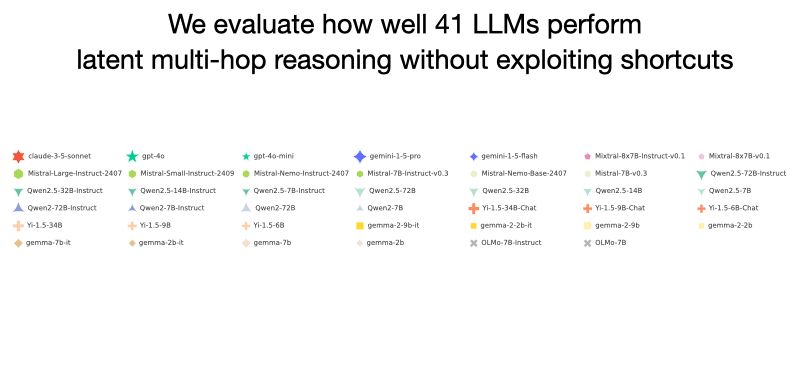

Building robust LLMs @Cohere

Posts

Media

Videos

Starter Packs

Reposted by Max Bartolo

Max Bartolo

@maxbartolo.bsky.social

· Mar 27

Max Bartolo

@maxbartolo.bsky.social

· Mar 27

Max Bartolo

@maxbartolo.bsky.social

· Mar 27

Max Bartolo

@maxbartolo.bsky.social

· Mar 10

Reposted by Max Bartolo

Reposted by Max Bartolo

Tim Rocktäschel

@handle.invalid

· Dec 4

Max Bartolo

@maxbartolo.bsky.social

· Dec 1

Max Bartolo

@maxbartolo.bsky.social

· Nov 20

Max Bartolo

@maxbartolo.bsky.social

· Nov 20

Reposted by Max Bartolo

atla

@atla-ai.bsky.social

· Nov 19