Nathaniel Blalock

@nathanielblalock.bsky.social

130 followers

420 following

20 posts

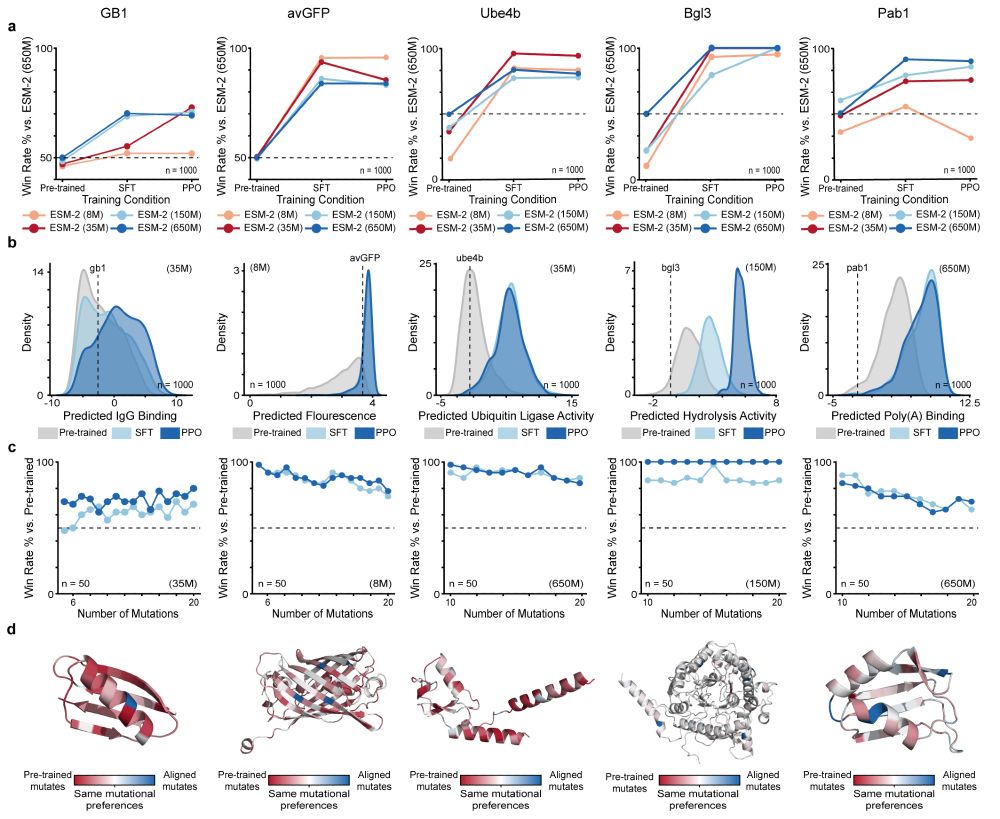

Graduate Research Assistant in Dr. Philip Romero's Lab at Duke/Wisconsin Reinforcement and Deep Learning for Protein Redesign | He/him

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Nathaniel Blalock

Reposted by Nathaniel Blalock

Philip Romero

@philromero.bsky.social

· Mar 24

Scalable and cost-efficient custom gene library assembly from oligopools

Advances in metagenomics, deep learning, and generative protein design have enabled broad in silico exploration of sequence space, but experimental characterization is still constrained by the cost an...

www.biorxiv.org

Reposted by Nathaniel Blalock

Alex Wild

@alexwild.bsky.social

· Jan 28

Reposted by Nathaniel Blalock