Tejas Srinivasan

@tejassrinivasan.bsky.social

290 followers

160 following

22 posts

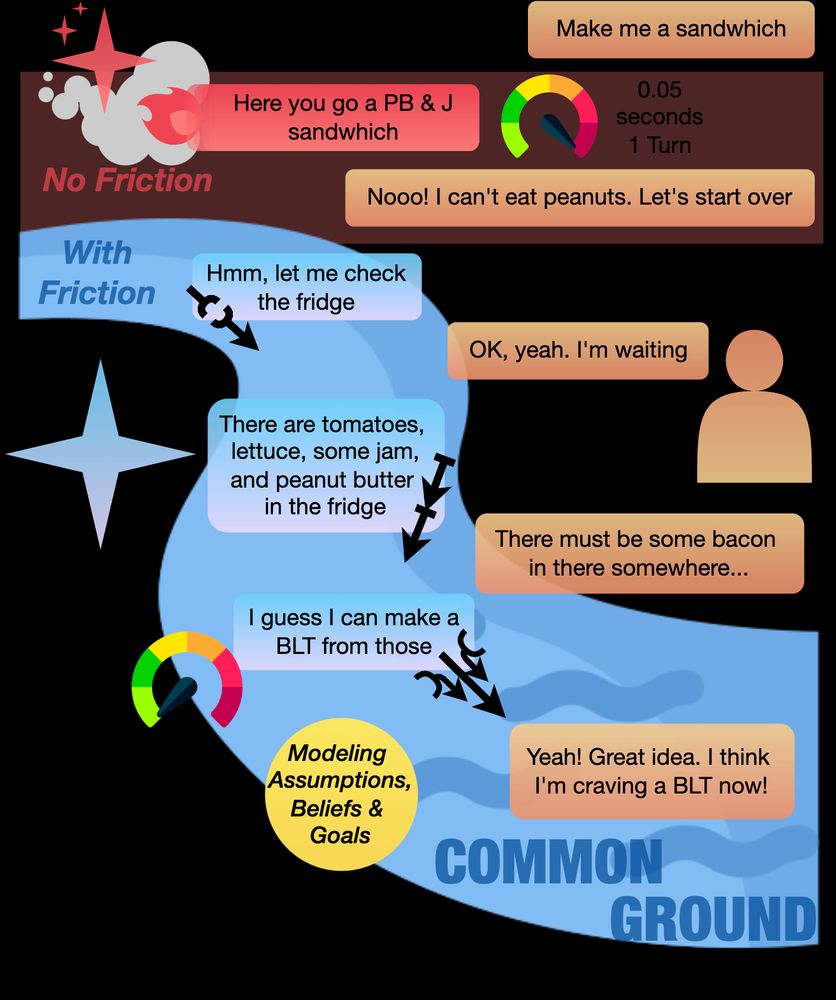

CS PhD student at USC. Former research intern at AI2 Mosaic. Interested in human-AI interaction and language grounding.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Tejas Srinivasan

Reposted by Tejas Srinivasan

Reposted by Tejas Srinivasan

Reposted by Tejas Srinivasan

Max Kennerly

@maxkennerly.bsky.social

· Mar 6

Reposted by Tejas Srinivasan

Reposted by Tejas Srinivasan

Reposted by Tejas Srinivasan