Anirban Ray

@anirbanray.bsky.social

240 followers

350 following

50 posts

PhD Student working on bioimaging inverse problems with @florianjug.bsky.social at @humantechnopole.bsky.social + @tudresden.bsky.social | Prev: computer vision Hitachi R&D, Tokyo.

🔗: https://rayanirban.github.io/

Likes 🏸🏋️🏔️🏓 and ✈️

Posts

Media

Videos

Starter Packs

Pinned

Anirban Ray

@anirbanray.bsky.social

· Nov 18

Reposted by Anirban Ray

Generative Point Tracking with Flow Matching

My latest project with Adam W. Harley, @csprofkgd.bsky.social, Derek Nowrouzezahrai, @chrisjpal.bsky.social.

Project page: mtesfaldet.net/genpt_projpa...

Paper: arxiv.org/abs/2510.20951

Code: github.com/tesfaldet/ge...

My latest project with Adam W. Harley, @csprofkgd.bsky.social, Derek Nowrouzezahrai, @chrisjpal.bsky.social.

Project page: mtesfaldet.net/genpt_projpa...

Paper: arxiv.org/abs/2510.20951

Code: github.com/tesfaldet/ge...

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· Sep 23

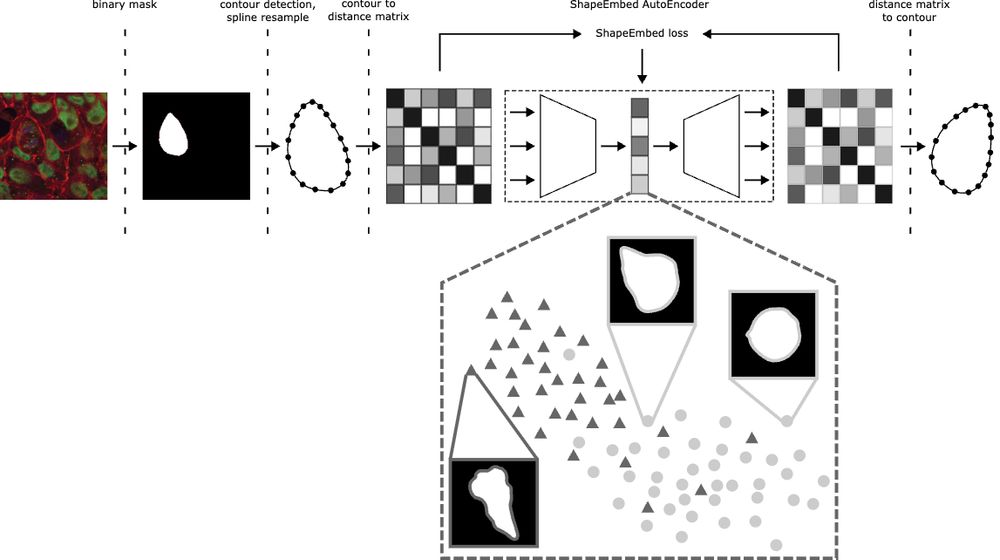

Happy to share that ShapeEmbed has been accepted at @neuripsconf.bsky.social 🎉 SE is self-supervised framework to encode 2D contours from microscopy & natural images into a latent representation invariant to translation, scaling, rotation, reflection & point indexing

📄 arxiv.org/pdf/2507.01009 (1/N)

📄 arxiv.org/pdf/2507.01009 (1/N)

Reposted by Anirban Ray

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· Jul 17

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· Jun 24

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· Jun 16

Anirban Ray

@anirbanray.bsky.social

· Jun 6

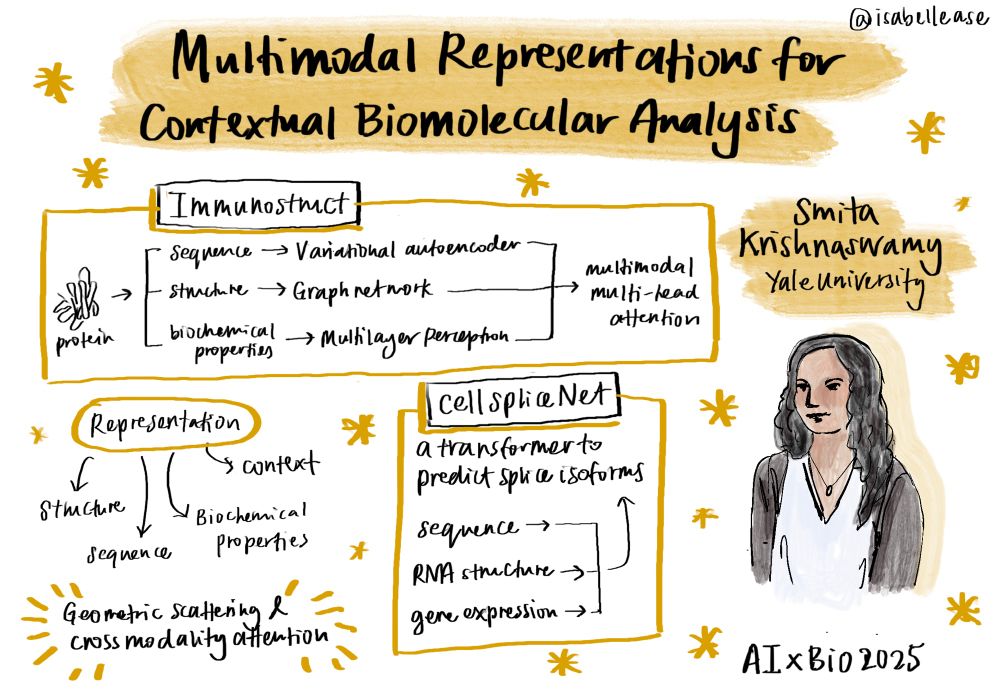

George Stoica, Vivek Ramanujan, Xiang Fan, Ali Farhadi, Ranjay Krishna, Judy Hoffman

Contrastive Flow Matching

https://arxiv.org/abs/2506.05350

Contrastive Flow Matching

https://arxiv.org/abs/2506.05350

Reposted by Anirban Ray

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· Jun 3

Anirban Ray

@anirbanray.bsky.social

· May 27

On the Relation between Rectified Flows and Optimal Transport

This paper investigates the connections between rectified flows, flow matching, and optimal transport. Flow matching is a recent approach to learning generative models by estimating velocity fields th...

arxiv.org

Reposted by Anirban Ray

Reposted by Anirban Ray

Reposted by Anirban Ray

Oliver Harschnitz

@harschnitz.bsky.social

· May 19

Monday is the big day! Very much looking forward to welcoming all participants and speakers of the Neurogenomics Conference to @humantechnopole.bsky.social in Milan. It promises to be an exciting few days filled with amazing science.

Anirban Ray

@anirbanray.bsky.social

· May 19

A Fourier Space Perspective on Diffusion Models

Diffusion models are state-of-the-art generative models on data modalities such as images, audio, proteins and materials. These modalities share the property of exponentially decaying variance and mag...

arxiv.org

Reposted by Anirban Ray

Anirban Ray

@anirbanray.bsky.social

· May 9