"AI use in American newspapers is widespread, uneven, and rarely disclosed"

arxiv.org/abs/2510.18774

"AI use in American newspapers is widespread, uneven, and rarely disclosed"

arxiv.org/abs/2510.18774

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

Frankentexts are weirdly creative, and they also pose problems for AI detectors: are they AI? human? More 👇

🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

Frankentexts are weirdly creative, and they also pose problems for AI detectors: are they AI? human? More 👇

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

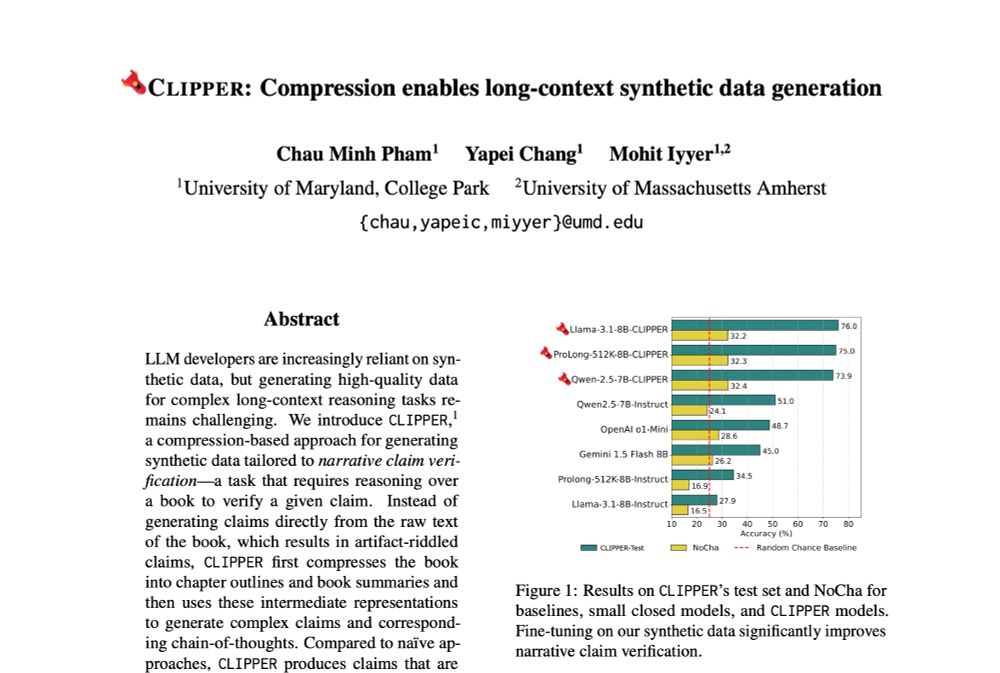

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

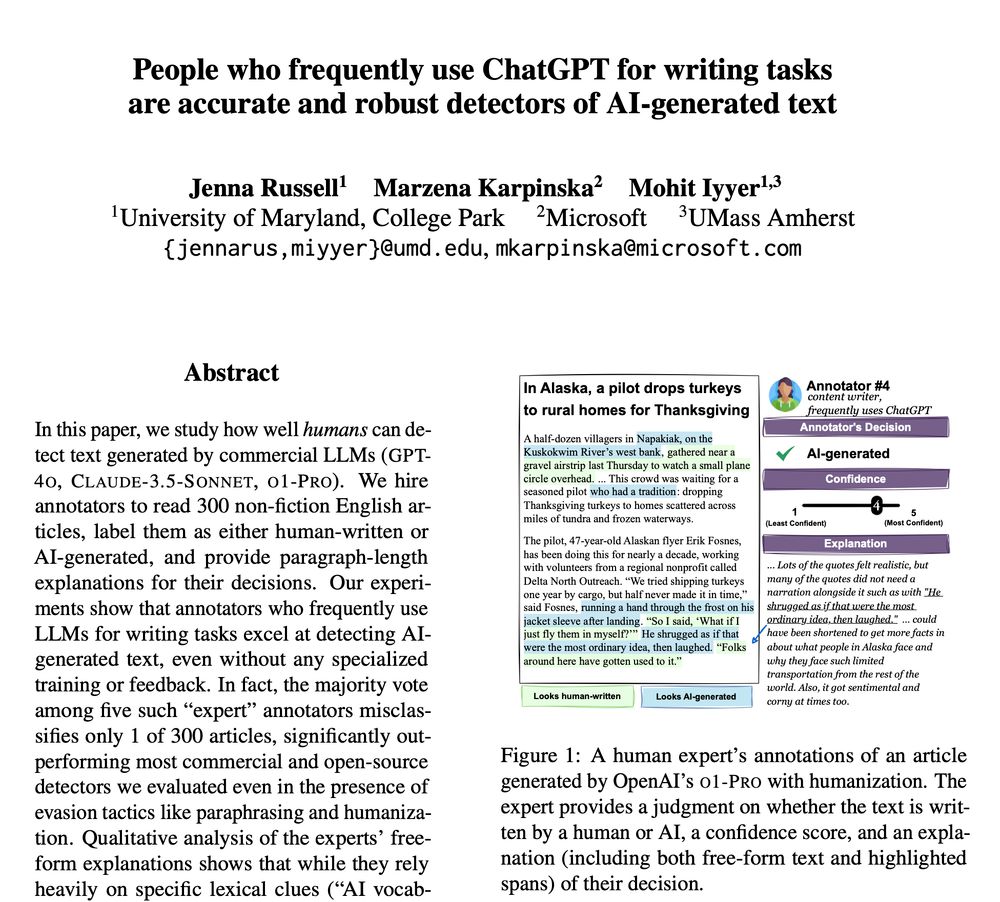

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯