Anirbit

@anirbit.bsky.social

150 followers

69 following

68 posts

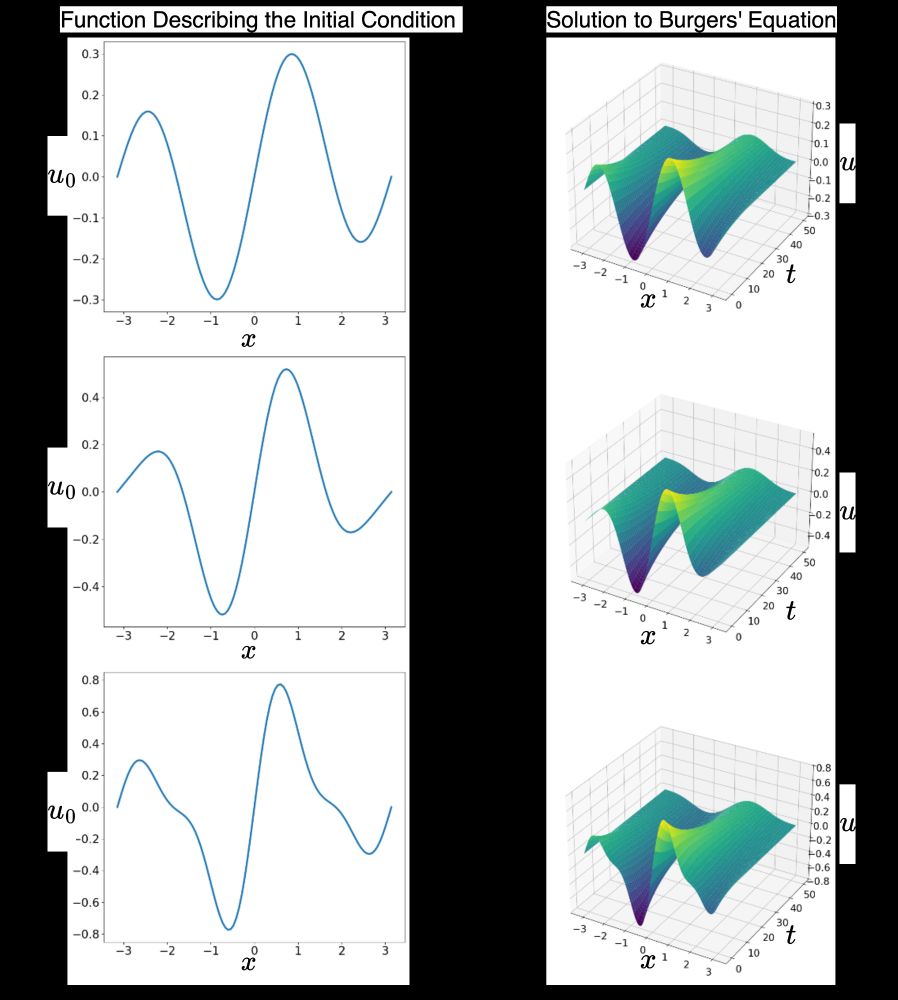

Assistant Professor/Lecturer in ML @ The University of Manchester | https://anirbit-ai.github.io/ | working on the theory of neural nets and how they solve differential equations. #AI4SCIENCE

Posts

Media

Videos

Starter Packs

Anirbit

@anirbit.bsky.social

· Sep 8

Anirbit

@anirbit.bsky.social

· Aug 22

Langevin Monte-Carlo Provably Learns Depth Two Neural Nets at Any Size and Data

In this work, we will establish that the Langevin Monte-Carlo algorithm can learn depth-2 neural nets of any size and for any data and we give non-asymptotic convergence rates for it. We achieve this ...

arxiv.org

Anirbit

@anirbit.bsky.social

· Aug 18

Anirbit

@anirbit.bsky.social

· Aug 7

Reposted by Anirbit

Anirbit

@anirbit.bsky.social

· Jul 24

Anirbit

@anirbit.bsky.social

· Jul 24

Anirbit

@anirbit.bsky.social

· Jul 23

Anirbit

@anirbit.bsky.social

· Jul 23

Anirbit

@anirbit.bsky.social

· Jul 23

Anirbit

@anirbit.bsky.social

· Jul 6

Anirbit

@anirbit.bsky.social

· Jul 1

Anirbit

@anirbit.bsky.social

· Jul 1