Bao Pham

@baopham.bsky.social

26 followers

17 following

4 posts

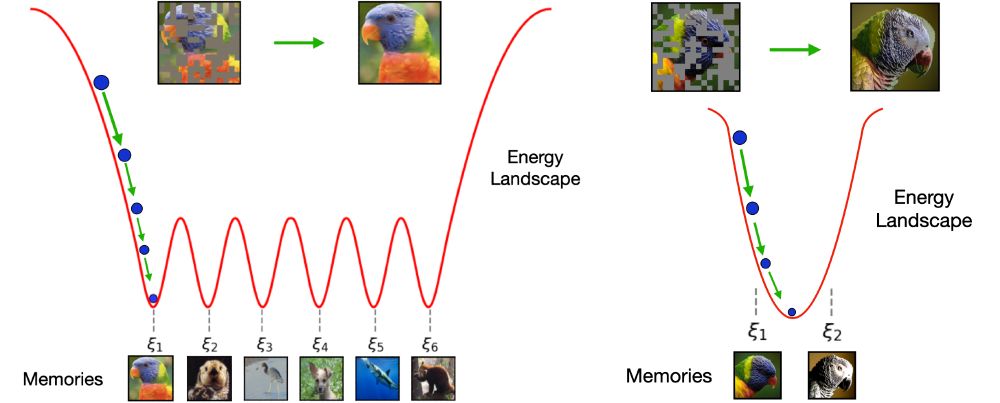

PhD Student at RPI. Interested in Hopfield or Associative Memory models and Energy-based models.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Bao Pham

Reposted by Bao Pham

Reposted by Bao Pham

Bao Pham

@baopham.bsky.social

· Dec 5