Would love to connect and chat about LLM planning, reasoning, AI4Science, multimodal stuff, or anything else. Feel free to DM!

Would love to connect and chat about LLM planning, reasoning, AI4Science, multimodal stuff, or anything else. Feel free to DM!

❌ “Late-stage means no treatment”

❌ “You’ll always need a colostomy bag after rectal cancer treatment”

Models do slightly better on myths like “no symptoms = no cancer” or causal misattribution.

[7/n]

❌ “Late-stage means no treatment”

❌ “You’ll always need a colostomy bag after rectal cancer treatment”

Models do slightly better on myths like “no symptoms = no cancer” or causal misattribution.

[7/n]

Questions generated from Gemini-1.5-Pro are the hardest across all models.

GPT-4o’s adversarial questions are much less effective. [6/n]

Questions generated from Gemini-1.5-Pro are the hardest across all models.

GPT-4o’s adversarial questions are much less effective. [6/n]

Metrics:

✅ PCR – % fully correct the false belief

🧠 PCS – average correction score.

[5/n]

Metrics:

✅ PCR – % fully correct the false belief

🧠 PCS – average correction score.

[5/n]

[4/n]

[4/n]

✅ Answers were rated helpful by oncologists.

🙎♂️ Outperformed human social workers on average. Sounds good… but there’s a catch.

LLMs answered correctly but often left patient misconceptions untouched.

[3/n]

✅ Answers were rated helpful by oncologists.

🙎♂️ Outperformed human social workers on average. Sounds good… but there’s a catch.

LLMs answered correctly but often left patient misconceptions untouched.

[3/n]

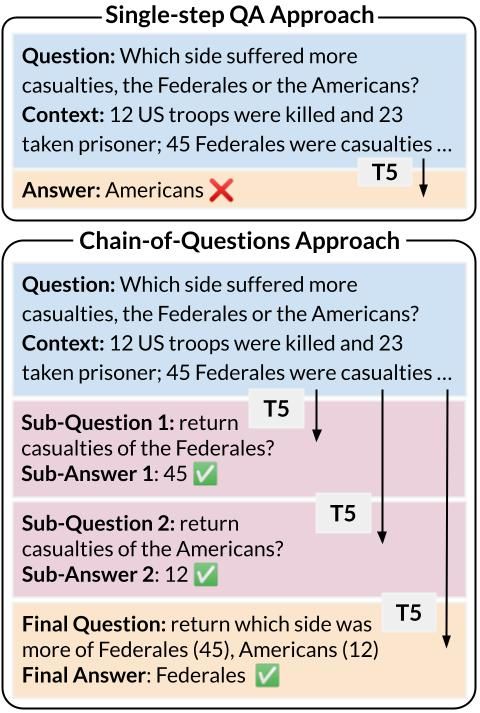

Patients increasingly turn to LLMs for medical advice. But real questions often contain hidden false assumptions. LLMs that ignore false assumptions can reinforce harmful beliefs.

⚠️ Safety = not just answering correctly, but correcting the question.

[2/n]

Patients increasingly turn to LLMs for medical advice. But real questions often contain hidden false assumptions. LLMs that ignore false assumptions can reinforce harmful beliefs.

⚠️ Safety = not just answering correctly, but correcting the question.

[2/n]