ControlAI

@controlai.com

340 followers

13 following

740 posts

We work to keep humanity in control.

Subscribe to our free newsletter: https://controlai.news

Join our discord at: https://discord.com/invite/ptPScqtdc5

Posts

Media

Videos

Starter Packs

ControlAI

@controlai.com

· 2d

ControlAI

@controlai.com

· 2d

ControlAI

@controlai.com

· 6d

Artificial Intelligence Apocalypse Scenarios

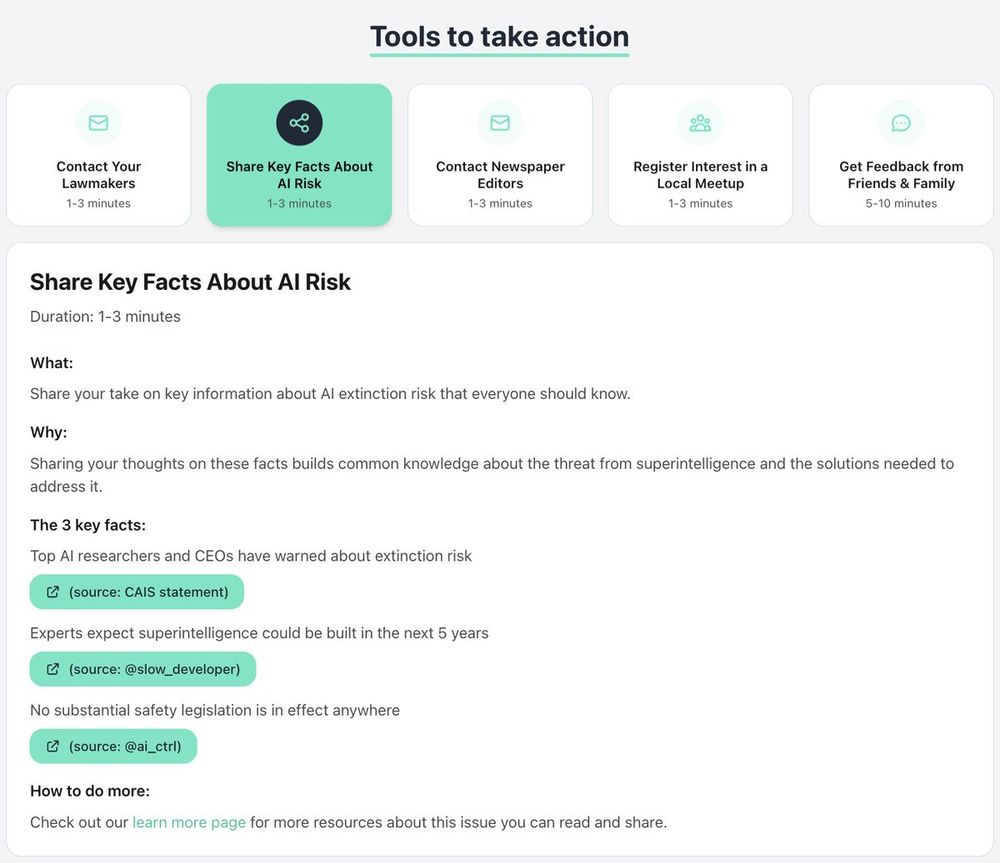

Take action to prevent the AI apocalypse at: https://campaign.controlai.com/

Ok so “misaligned AI” is dangerous. But if it does decide to kill us, what SPECIFICALLY could it do?

LEARN MORE

**************

To learn more about this topic, start your googling with these keywords:

- Superintelligence:

www.youtube.com