Leonardo Cotta

@cottascience.bsky.social

1K followers

250 following

55 posts

scaling lawver @ EIT from BH🔺🇧🇷

http://cottascience.github.io

Posts

Media

Videos

Starter Packs

Reposted by Leonardo Cotta

Leonardo Cotta

@cottascience.bsky.social

· Aug 12

Leonardo Cotta

@cottascience.bsky.social

· Jul 30

Leonardo Cotta

@cottascience.bsky.social

· Jul 30

Leonardo Cotta

@cottascience.bsky.social

· Jul 26

Scaling Laws Are Unreliable for Downstream Tasks: A Reality Check

Downstream scaling laws aim to predict task performance at larger scales from pretraining losses at smaller scales. Whether this prediction should be possible is unclear: some works demonstrate that t...

arxiv.org

Leonardo Cotta

@cottascience.bsky.social

· Jul 16

Leonardo Cotta

@cottascience.bsky.social

· Jun 30

Leonardo Cotta

@cottascience.bsky.social

· Jun 30

Leonardo Cotta

@cottascience.bsky.social

· Jun 29

Leonardo Cotta

@cottascience.bsky.social

· Jun 14

Reposted by Leonardo Cotta

Quaid Morris

@quaidmorris.bsky.social

· Jun 3

Damage and Misrepair Signatures: Compact Representations of Pan-cancer Mutational Processes

Mutational signatures of single-base substitutions (SBSs) characterize somatic mutation processes which contribute to cancer development and progression. However, current mutational signatures do not ...

www.biorxiv.org

Leonardo Cotta

@cottascience.bsky.social

· May 30

Leonardo Cotta

@cottascience.bsky.social

· Apr 18

Leonardo Cotta

@cottascience.bsky.social

· Apr 13

Leonardo Cotta

@cottascience.bsky.social

· Apr 12

Leonardo Cotta

@cottascience.bsky.social

· Mar 24

Position: Graph Learning Will Lose Relevance Due To Poor Benchmarks

While machine learning on graphs has demonstrated promise in drug design and molecular property prediction, significant benchmarking challenges hinder its further progress and relevance. Current bench...

arxiv.org

Reposted by Leonardo Cotta

Leonardo Cotta

@cottascience.bsky.social

· Feb 20

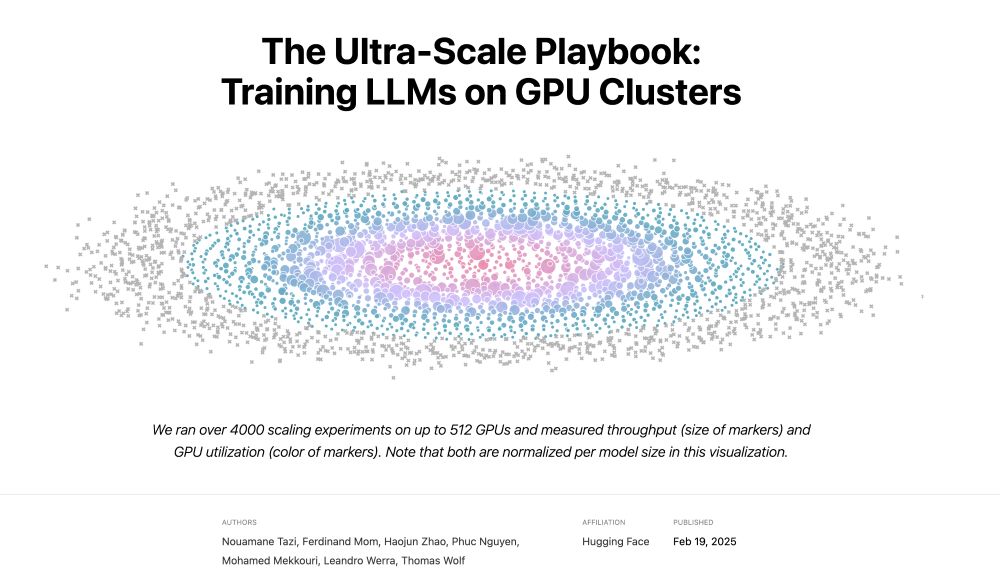

Leonardo Cotta

@cottascience.bsky.social

· Feb 20

Reposted by Leonardo Cotta

Leonardo Cotta

@cottascience.bsky.social

· Feb 19