David Selby

@davidselby.bsky.social

68 followers

190 following

13 posts

Data science researcher working on applications of machine learning in health at DFKI, getting the most out of small data. Reproducible #Rstats evangelist and unofficial British cultural ambassador to Rhineland-Palatinate 🇩🇪

https://selbydavid.com

Posts

Media

Videos

Starter Packs

David Selby

@davidselby.bsky.social

· Sep 5

How many patients could we save with LLM priors?

Imagine a world where clinical trials need far fewer patients to achieve the same statistical power, thanks to the knowledge encoded in large language models (LLMs). We present a novel framework for h...

arxiv.org

David Selby

@davidselby.bsky.social

· Aug 19

Automated Visualization Makeovers with LLMs

Making a good graphic that accurately and efficiently conveys the desired message to the audience is both an art and a science, typically not taught in the data science curriculum. Visualisation makeo...

arxiv.org

David Selby

@davidselby.bsky.social

· Aug 5

BioDisco: Multi-agent hypothesis generation with dual-mode evidence, iterative feedback and temporal evaluation

Identifying novel hypotheses is essential to scientific research, yet this process risks being overwhelmed by the sheer volume and complexity of available information. Existing automated methods often...

arxiv.org

David Selby

@davidselby.bsky.social

· Jul 21

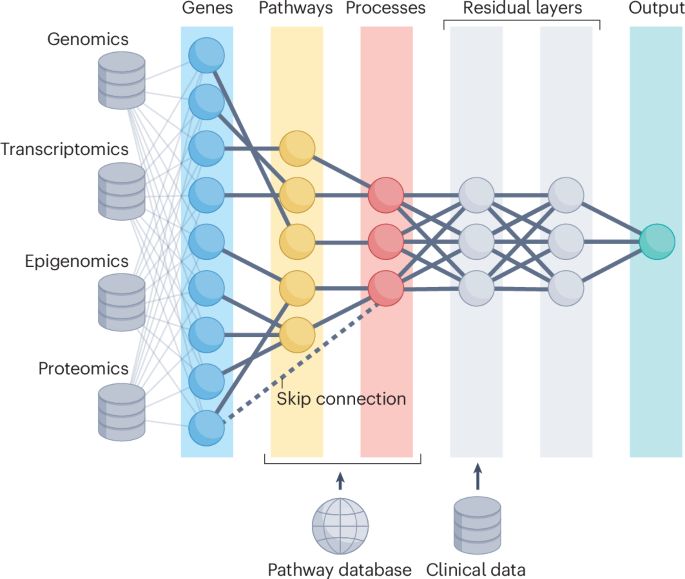

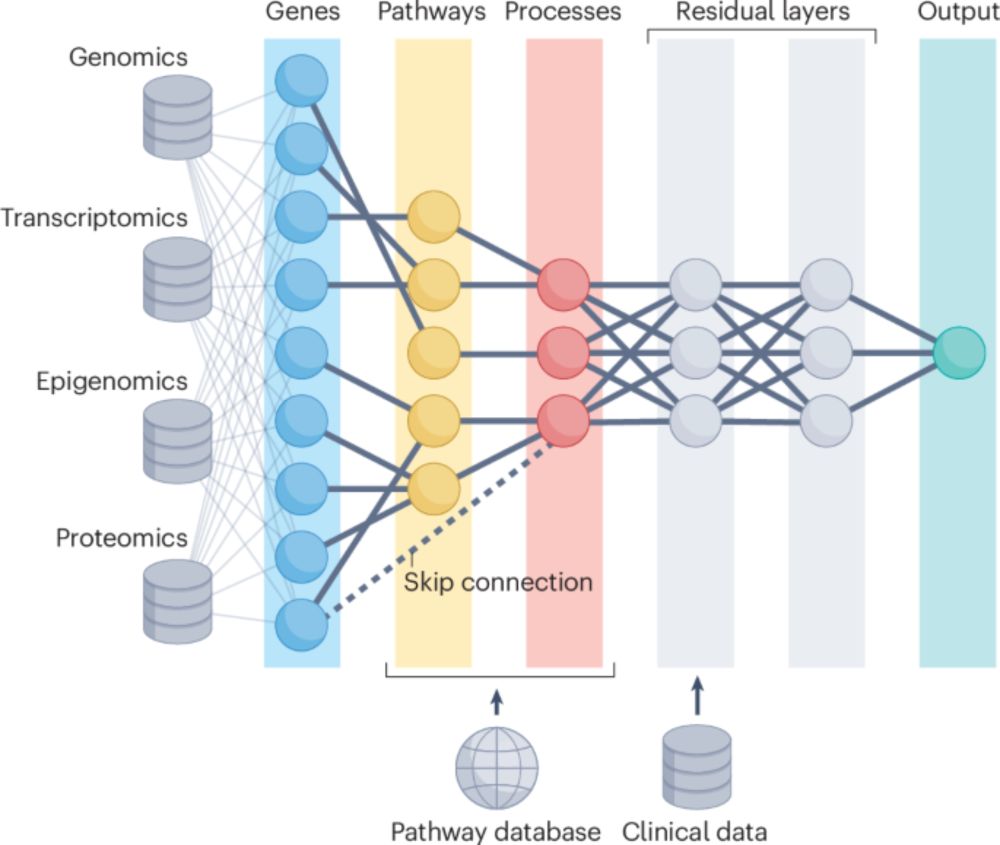

Frontiers | Visible neural networks for multi-omics integration: a critical review

BackgroundBiomarker discovery and drug response prediction are central to personalized medicine, driving demand for predictive models that also offer biologi...

www.frontiersin.org

Reposted by David Selby

David Selby

@davidselby.bsky.social

· Mar 17

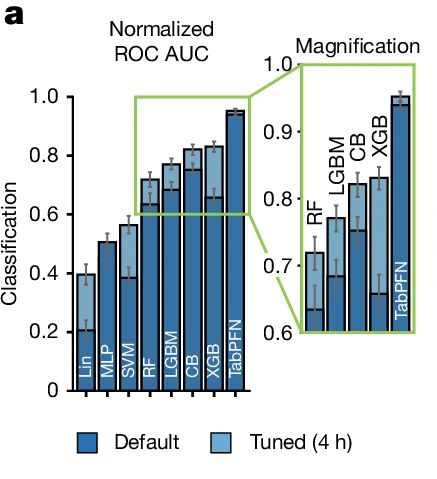

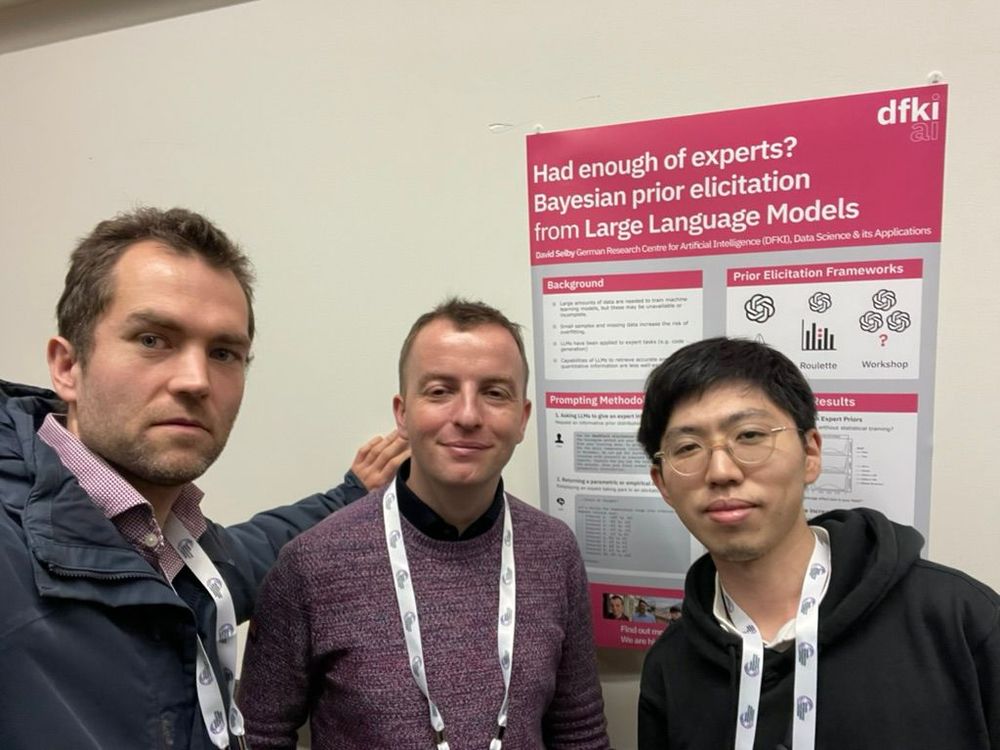

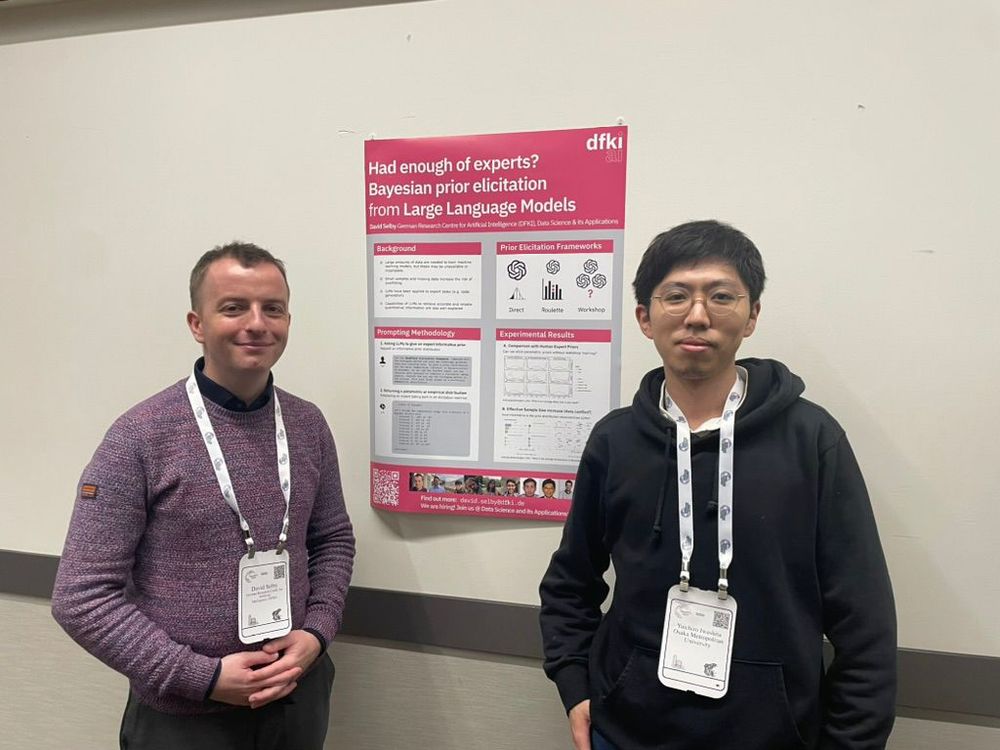

Had Enough of Experts? Quantitative Knowledge Retrieval From Large Language Models

Large language models (LLMs) have been extensively studied for their ability to generate convincing natural language sequences; however, their utility for quantitative information retrieval is less w...

doi.org

David Selby

@davidselby.bsky.social

· Mar 4

Beyond the black box with biologically informed neural networks - Nature Reviews Genetics

Biologically informed neural networks promise to lead to more explainable, data-driven discoveries in genomics, drug development and precision medicine. Selby et al. highlight emerging opportunities, ...

www.nature.com

David Selby

@davidselby.bsky.social

· Feb 20

Had enough of experts? Quantitative knowledge retrieval from large language models

Large language models (LLMs) have been extensively studied for their abilities to generate convincing natural language sequences, however their utility for quantitative information retrieval is less w...

arxiv.org

David Selby

@davidselby.bsky.social

· Dec 20

Visible neural networks for multi-omics integration: a critical review

Biomarker discovery and drug response prediction is central to personalized medicine, driving demand for predictive models that also offer biological insights. Biologically informed neural networks (B...

www.biorxiv.org