Prev @TU Munich

Stochastic&nonlin. dynamics @TU Berlin&@MPIDS

Learning dynamics, plasticity&geometry of representations

https://dimitra-maoutsa.github.io

https://dimitra-maoutsa.github.io/M-Dims-Blog

It's often said that hippocampal replay, which helps to build up a model of the world, is biased by reward. But the canonical temporal-difference learning requires updates proportional to reward-prediction error (RPE), not reward magnitude

1/4

rdcu.be/eRxNz

It's often said that hippocampal replay, which helps to build up a model of the world, is biased by reward. But the canonical temporal-difference learning requires updates proportional to reward-prediction error (RPE), not reward magnitude

1/4

rdcu.be/eRxNz

onlinelibrary.wiley.com/doi/10.1111/...

onlinelibrary.wiley.com/doi/10.1111/...

garymarcus.substack.com/p/a-trillion...

garymarcus.substack.com/p/a-trillion...

HPC is known for storing maps of the environment but not so known for generating planned trajectories.

This paper proposes that recurrence in CA3 is crucial for planning.

A🧵with my toy model and notes:

#neuroskyence #compneuro #NeuroAI

HPC is known for storing maps of the environment but not so known for generating planned trajectories.

This paper proposes that recurrence in CA3 is crucial for planning.

A🧵with my toy model and notes:

#neuroskyence #compneuro #NeuroAI

We applied our framework to a simplified model of interacting brain areas: a multi-area recurrent neural network (RNN) trained on a working memory task. After learning the task, its "sensory" area gained control over its "cognitive" area.

We applied our framework to a simplified model of interacting brain areas: a multi-area recurrent neural network (RNN) trained on a working memory task. After learning the task, its "sensory" area gained control over its "cognitive" area.

Scroll down the thread to learn how it works. For now, does it work?

Scroll down the thread to learn how it works. For now, does it work?

And asking me to rate english language skills, which, toefl exists?

And asking me to rate english language skills, which, toefl exists?

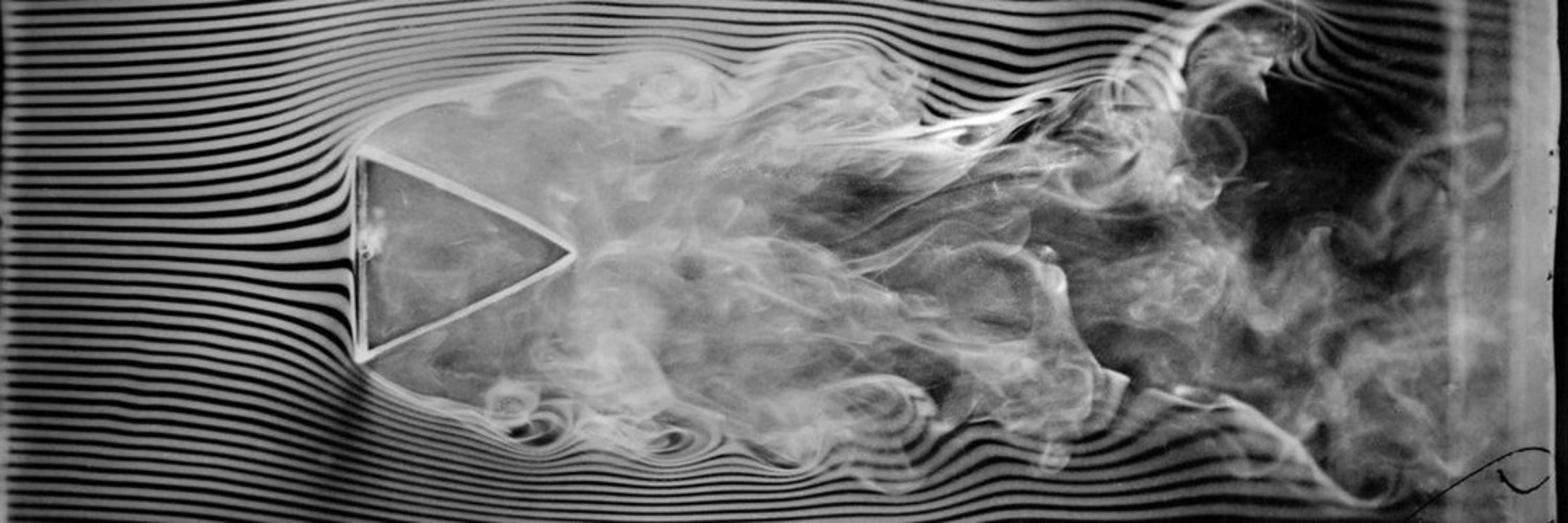

http://link.aps.org/doi/10.1103/7kk9-3jm8

http://link.aps.org/doi/10.1103/7kk9-3jm8

go.nature.com/4ocRj3n

go.nature.com/4ocRj3n

Congratulations to Matt Jones & Nathan Lepora for seeing this through to the end!

www.nature.com/articles/s41...

Congratulations to Matt Jones & Nathan Lepora for seeing this through to the end!

www.nature.com/articles/s41...

🔗 doi.org/10.1101/2025...

Led by @atenagm.bsky.social @mshalvagal.bsky.social

🔗 doi.org/10.1101/2025...

Led by @atenagm.bsky.social @mshalvagal.bsky.social

Cognitive maps are flexible, dynamic, (re)constructed representations

#psychscisky #neuroskyence #cognition #philsky 🧪

Cognitive maps are flexible, dynamic, (re)constructed representations

#psychscisky #neuroskyence #cognition #philsky 🧪

📄 Paper: openreview.net/forum?id=I82...

💾 Code: github.com/adamjeisen/J...

📍 Poster: Thu 4 Dec 11am - 2pm PST (#2111)

📄 Paper: openreview.net/forum?id=I82...

💾 Code: github.com/adamjeisen/J...

📍 Poster: Thu 4 Dec 11am - 2pm PST (#2111)

✨In our NeurIPS 2025 Spotlight paper, we introduce a data-driven framework to answer this question using deep learning, nonlinear control, and differential geometry.🧵⬇️

✨In our NeurIPS 2025 Spotlight paper, we introduce a data-driven framework to answer this question using deep learning, nonlinear control, and differential geometry.🧵⬇️