Élie Michel

@elie-michel.bsky.social

420 followers

230 following

110 posts

Research Scientist at #Adobe. PhD in Computer Graphics. Author of #LearnWebGPU C++. Creative Coding. Indie game. VFX. Opinions are my own. Writes in 🇫🇷 🇺🇸.

https://portfolio.exppad.com

https://twitter.com/exppad

Posts

Media

Videos

Starter Packs

Élie Michel

@elie-michel.bsky.social

· Jun 4

Real Time Multiscale Rendering of Dense Dynamic Stackings

Dense dynamic aggregates of similar elements are frequent in natural phenomena and challenging to render under full real time constraints. The optimal representation to render them changes drastically...

perso.telecom-paristech.fr

Élie Michel

@elie-michel.bsky.social

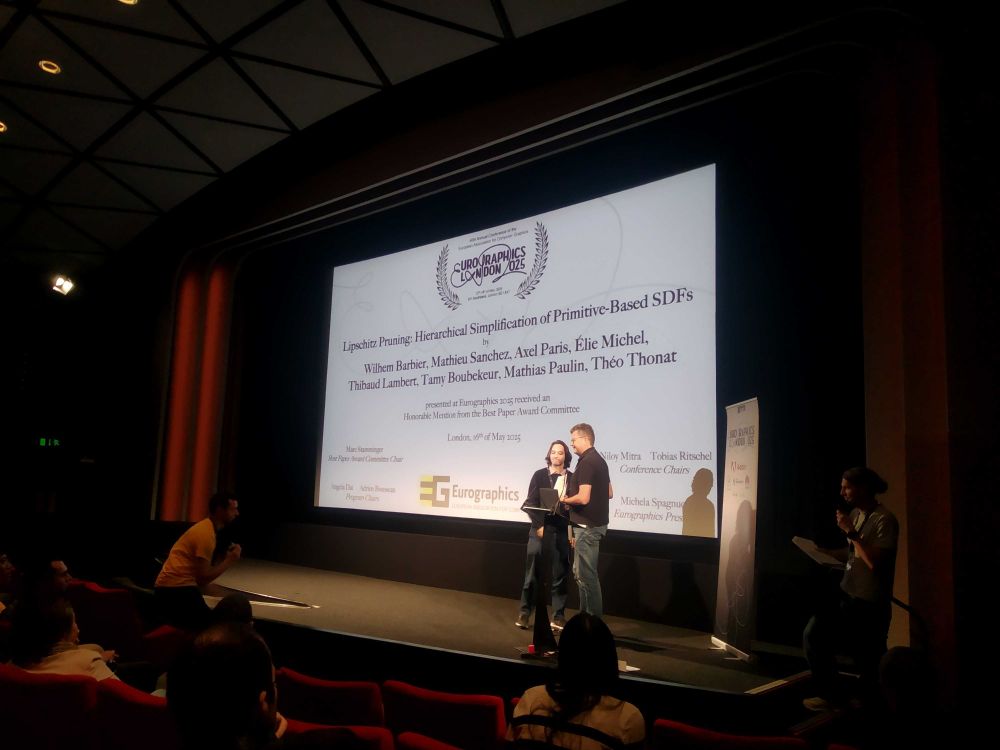

· Jun 3

Élie Michel

@elie-michel.bsky.social

· Jun 3

Reposted by Élie Michel

Élie Michel

@elie-michel.bsky.social

· May 29

Élie Michel

@elie-michel.bsky.social

· May 10

Reposted by Élie Michel

Élie Michel

@elie-michel.bsky.social

· May 2

Élie Michel

@elie-michel.bsky.social

· May 2

Élie Michel

@elie-michel.bsky.social

· Apr 27

Élie Michel

@elie-michel.bsky.social

· Apr 27

Google falling short of important climate target, cites electricity needs of AI

Google, which has an ambitious plan to address climate change with cleaner operations, came nowhere close to its goals last year, according to the company’s annual Environmental Report Tuesday.

apnews.com

Élie Michel

@elie-michel.bsky.social

· Apr 27

![MOTIVATION

Graphical Processing Units (GPUs) are at the core of Computer Graphics research. These chips are critical for rendering images, processing geometric data, and training machine learning models. Yet, the production and disposal of GPUs emits CO2 and results in toxic e-waste [1].

METHOD

We surveyed 888 papers presented at SIGGRAPH (premier conference for computer graphics research), from 2018 to 2024, and systematically gathered GPU models cited in the text.

We then contextualize the hardware reported in papers with publicly available data of consumers’ hardware [2, 3].

REFERENCES

[1] CRAWFORD, KATE. The Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press, 2021.

[2] STEAM. Steam Hardware Survey. https://store.steampowered.com/hwsurvey

[3] BLENDER. Blender Open Data. https://opendata.blender.org](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:gpta66q3ots5ia23kvfzpu5r/bafkreidqnyftshkcmtpzqlxsknnvcn34xszafururgdixddtcuzrmwloqq@jpeg)