Interested in (deep) learning theory and others.

1) Non-trivial upper bounds on test error for both true and random labels

2) Meaningful distinction between structure-rich and structure-poor datasets

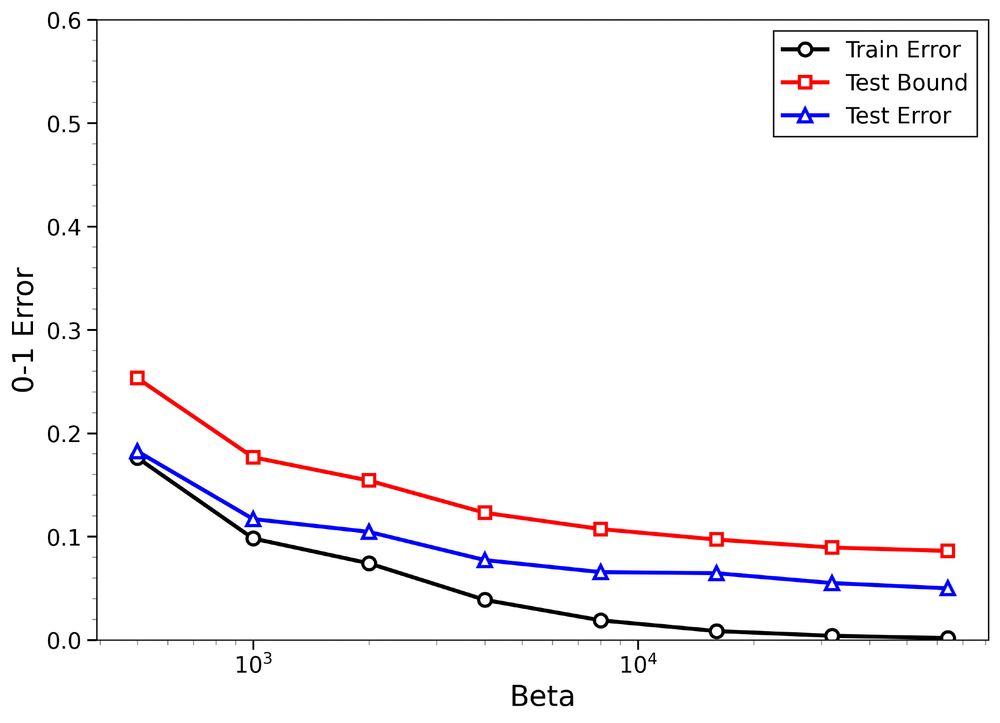

The figures: Binary classification with FCNNs using SGLD using 8k MNIST images

1) Non-trivial upper bounds on test error for both true and random labels

2) Meaningful distinction between structure-rich and structure-poor datasets

The figures: Binary classification with FCNNs using SGLD using 8k MNIST images

Here, predictors are sampled from a prescribed probability distribution, allowing us to apply PAC-Bayesian theory to study their generalization properties.

Here, predictors are sampled from a prescribed probability distribution, allowing us to apply PAC-Bayesian theory to study their generalization properties.

arxiv.org/abs/1611.03530

arxiv.org/abs/1611.03530

📍Poster Session 1 #125

We present a new empirical Bernstein inequality for Hilbert space-valued random processes—relevant for dependent, even non-stationary data.

w/ Andreas Maurer, @vladimir-slk.bsky.social & M. Pontil

📄 Paper: openreview.net/forum?id=a0E...

📍Poster Session 1 #125

We present a new empirical Bernstein inequality for Hilbert space-valued random processes—relevant for dependent, even non-stationary data.

w/ Andreas Maurer, @vladimir-slk.bsky.social & M. Pontil

📄 Paper: openreview.net/forum?id=a0E...