We got too used to no longer seeing the GPT base model.

Let’s compare to the DeepSeek base model.

The jump from base to reasoning is tremendous!

Large 3 starts off slightly higher than DeepSeek base. I’m eager to see Magistral Large!

We got too used to no longer seeing the GPT base model.

Let’s compare to the DeepSeek base model.

The jump from base to reasoning is tremendous!

Large 3 starts off slightly higher than DeepSeek base. I’m eager to see Magistral Large!

Large 3 improves reasoning compared to Large 2, but is overtaken by… reasoning models.

Large 3 improves reasoning compared to Large 2, but is overtaken by… reasoning models.

The OG one topped open models of that size. For the first time, a local model felt usable on consumer hardware.

Not only is the latest Ministral 8B on the Pareto frontier for knowledge vs. cost (and for search, math, agentic uses)…

The OG one topped open models of that size. For the first time, a local model felt usable on consumer hardware.

Not only is the latest Ministral 8B on the Pareto frontier for knowledge vs. cost (and for search, math, agentic uses)…

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

It jumps ahead of the pack, which had caught up Gemini 2.5.

It jumps ahead of the pack, which had caught up Gemini 2.5.

Its intrinsic knowledge is unmatched, surpassing 2.5 and GPT-5.1.

bsky.app/profile/espa...

Its intrinsic knowledge is unmatched, surpassing 2.5 and GPT-5.1.

bsky.app/profile/espa...

Why?

Company C1 releases model M1 and discloses benchmarks B1.

Company C2 releases M2, showing off benchmarks B2 which are distinct.

Comparing those models is hard since they don't share benchmarks!

Why?

Company C1 releases model M1 and discloses benchmarks B1.

Company C2 releases M2, showing off benchmarks B2 which are distinct.

Comparing those models is hard since they don't share benchmarks!

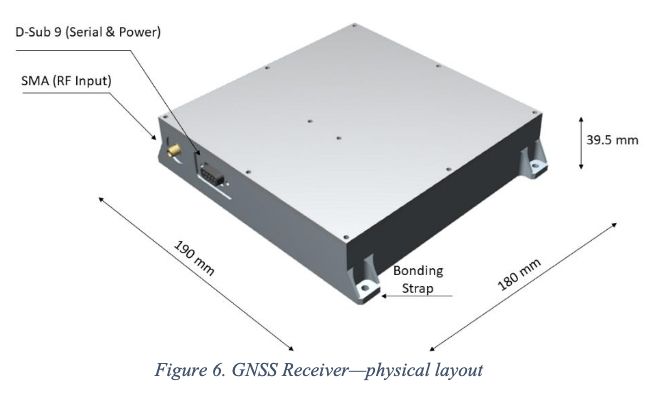

Greatly simplifies space travel.

I still believe we should set up a separate GNSS on every planet.

ntrs.nasa.gov/api/citation...

Greatly simplifies space travel.

I still believe we should set up a separate GNSS on every planet.

ntrs.nasa.gov/api/citation...

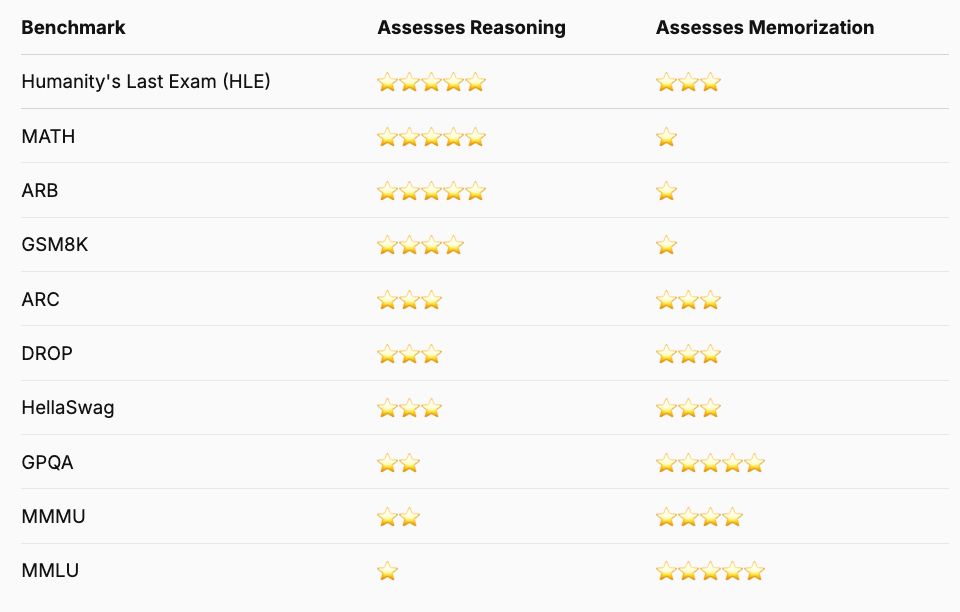

Model memorization is thus less useful than reasoning.

Yet a lot of benchmarks still focus on the former.

Model memorization is thus less useful than reasoning.

Yet a lot of benchmarks still focus on the former.

Unsurprisingly, base models evaluate the probability of a good answer better than instruct models, which will give a low probability to speech that doesn't match their style

Unsurprisingly, base models evaluate the probability of a good answer better than instruct models, which will give a low probability to speech that doesn't match their style

It is brittle because it depends on #browser being a sibling after #navigator-toolbox.

Do you have suggestions?

It is brittle because it depends on #browser being a sibling after #navigator-toolbox.

Do you have suggestions?

Here is my solution so far.

(Requires adding the CSS file in a chrome/ folder in the root dir in about:profiles, then about:config > toolkit.legacyUserProfileCustomizations.stylesheets > true.)

Here is my solution so far.

(Requires adding the CSS file in a chrome/ folder in the root dir in about:profiles, then about:config > toolkit.legacyUserProfileCustomizations.stylesheets > true.)

The first GPT had a hundred million parameters, and called itself large already.

GPT-4 has almost two trillion.

The first GPT had a hundred million parameters, and called itself large already.

GPT-4 has almost two trillion.