Lucas Beyer (bl16)

@giffmana.ai

Researcher (OpenAI. Ex: DeepMind, Brain, RWTH Aachen), Gamer, Hacker, Belgian.

Anon feedback: https://admonymous.co/giffmana

📍 Zürich, Suisse 🔗 http://lucasb.eyer.be

Anon feedback: https://admonymous.co/giffmana

📍 Zürich, Suisse 🔗 http://lucasb.eyer.be

I noticed that I'm not using bsky much anymore. Not sure why, vibes.

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

January 26, 2025 at 9:21 PM

I noticed that I'm not using bsky much anymore. Not sure why, vibes.

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

First candidate for banger of the year appeared, only 2 days in:

January 2, 2025 at 10:26 PM

First candidate for banger of the year appeared, only 2 days in:

Reposted by Lucas Beyer (bl16)

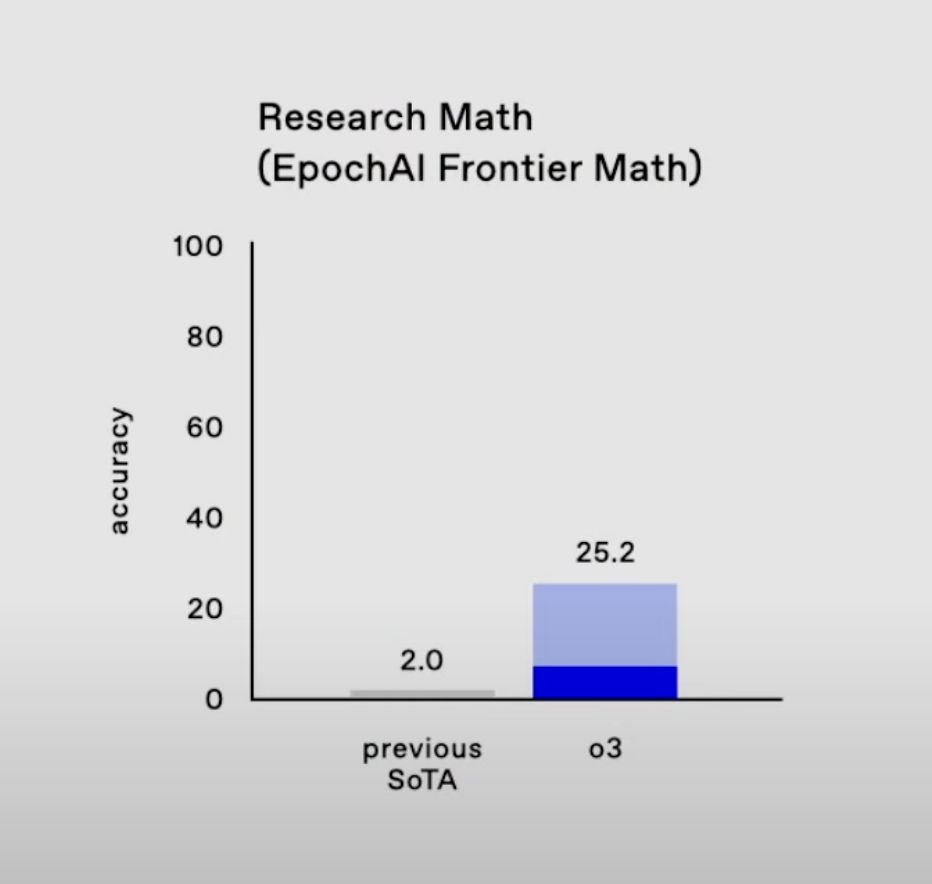

OpenAI skips o2, previews o3 scores, and they're truly crazy. Huge progress on the few benchmarks we think are truly hard today. Including ARC AGI.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

December 20, 2024 at 6:08 PM

OpenAI skips o2, previews o3 scores, and they're truly crazy. Huge progress on the few benchmarks we think are truly hard today. Including ARC AGI.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

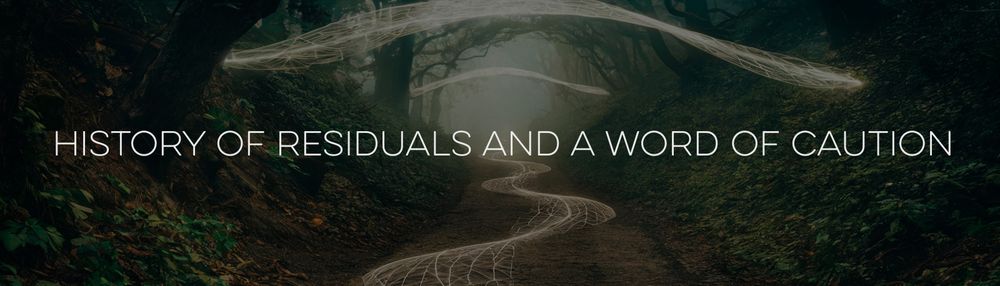

A post by @cloneofsimo on Twitter made me write up some lore about residuals, ResNets, and Transformers. And I couldn't resist sliding in the usual cautionary tale about small/mid-scale != large-scale.

Blogpost: lb.eyer.be/s/residuals....

Blogpost: lb.eyer.be/s/residuals....

December 18, 2024 at 11:14 PM

A post by @cloneofsimo on Twitter made me write up some lore about residuals, ResNets, and Transformers. And I couldn't resist sliding in the usual cautionary tale about small/mid-scale != large-scale.

Blogpost: lb.eyer.be/s/residuals....

Blogpost: lb.eyer.be/s/residuals....

Good morning Vancouver!

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

December 10, 2024 at 6:09 PM

Good morning Vancouver!

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

Good morning! On my way to NeurIPS, slightly sad to leave this beautiful place and my family for the week, but also excited to meet many new and old friends at NeurIPS!

December 9, 2024 at 8:40 AM

Good morning! On my way to NeurIPS, slightly sad to leave this beautiful place and my family for the week, but also excited to meet many new and old friends at NeurIPS!

Reposted by Lucas Beyer (bl16)

One of the best tutorials for understanding Transformers!

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

[M2L 2024] Transformers - Lucas Beyer

YouTube video by Mediterranean Machine Learning (M2L) summer school

www.youtube.com

December 8, 2024 at 9:58 AM

One of the best tutorials for understanding Transformers!

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

Reposted by Lucas Beyer (bl16)

Attending #NeurIPS2024? If you're interested in multimodal systems, building inclusive & culturally aware models, and how fractals relate to LLMs, we've 3 posters for you. I look forward to presenting them on behalf of our GDM team @ Zurich & collaborators. Details below (1/4)

December 7, 2024 at 6:50 PM

Attending #NeurIPS2024? If you're interested in multimodal systems, building inclusive & culturally aware models, and how fractals relate to LLMs, we've 3 posters for you. I look forward to presenting them on behalf of our GDM team @ Zurich & collaborators. Details below (1/4)

Reposted by Lucas Beyer (bl16)

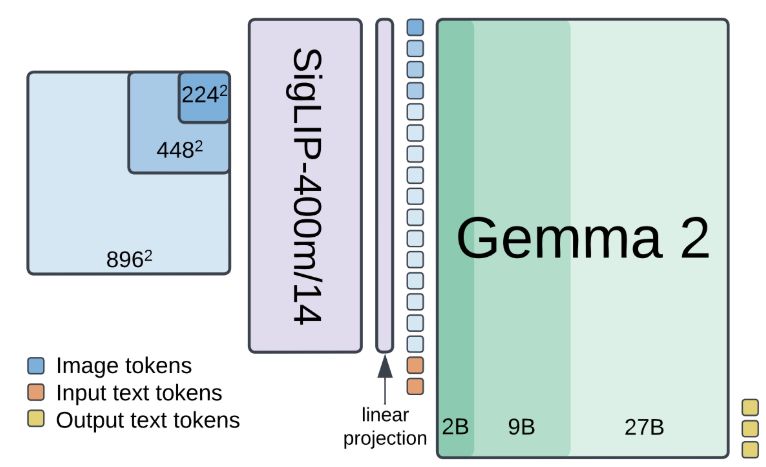

The fourth nice thing we* have for you this week: PaliGemma 2.

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

🚀🚀PaliGemma 2 is our updated and improved PaliGemma release using the Gemma 2 models and providing new pre-trained checkpoints for the full cross product of {224px,448px,896px} resolutions and {3B,10B,28B} model sizes.

1/7

1/7

December 5, 2024 at 8:19 PM

The fourth nice thing we* have for you this week: PaliGemma 2.

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

Reposted by Lucas Beyer (bl16)

OpenAI is coming to Switzerland, and the founding team of the new Zurich office is nothing short of stellar🇨🇭⭐️

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

So, now that our move to OpenAI became public, @kolesnikov.ch @xzhai.bsky.social and I are drowning in notifications. I read everything, but may not reply.

Excited about this new journey! 🚀

Quick FAQ thread...

Excited about this new journey! 🚀

Quick FAQ thread...

Ok, it is yesterdays news already, but good night sleep is important.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

December 5, 2024 at 8:24 AM

OpenAI is coming to Switzerland, and the founding team of the new Zurich office is nothing short of stellar🇨🇭⭐️

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

@francois.fleuret.org hey, can you buy me a few of tomorrow's Le Temps, if the news about us is printed in it? I'll pay you in beers at neurips.

December 4, 2024 at 9:52 PM

@francois.fleuret.org hey, can you buy me a few of tomorrow's Le Temps, if the news about us is printed in it? I'll pay you in beers at neurips.

So, now that our move to OpenAI became public, @kolesnikov.ch @xzhai.bsky.social and I are drowning in notifications. I read everything, but may not reply.

Excited about this new journey! 🚀

Quick FAQ thread...

Excited about this new journey! 🚀

Quick FAQ thread...

Ok, it is yesterdays news already, but good night sleep is important.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

December 4, 2024 at 9:23 PM

So, now that our move to OpenAI became public, @kolesnikov.ch @xzhai.bsky.social and I are drowning in notifications. I read everything, but may not reply.

Excited about this new journey! 🚀

Quick FAQ thread...

Excited about this new journey! 🚀

Quick FAQ thread...

Reposted by Lucas Beyer (bl16)

Ok, it is yesterdays news already, but good night sleep is important.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

December 4, 2024 at 9:14 AM

Ok, it is yesterdays news already, but good night sleep is important.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

Reposted by Lucas Beyer (bl16)

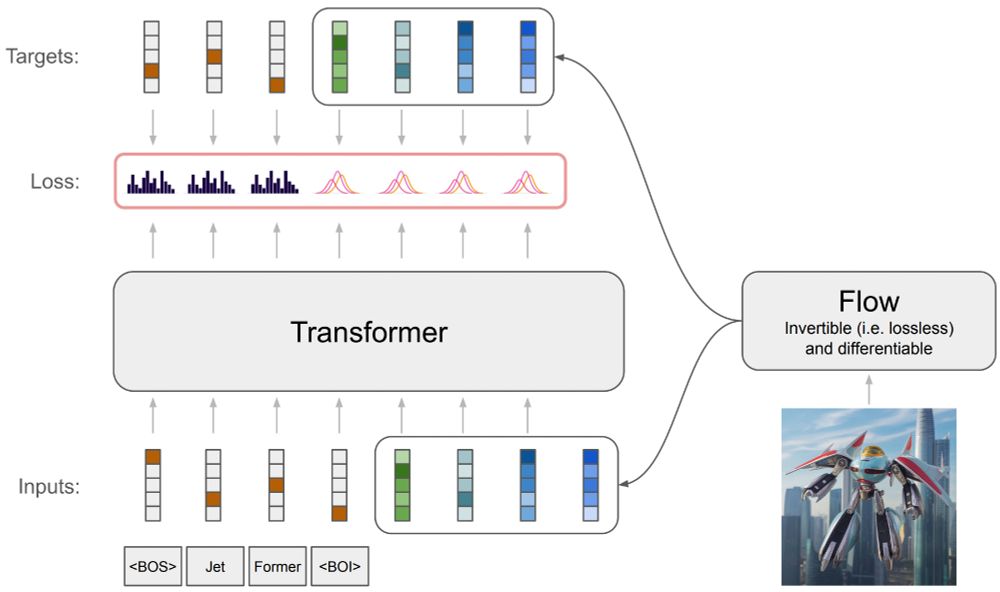

JetFormer: An Autoregressive Generative Model of Raw Images and Text

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

December 3, 2024 at 7:16 PM

JetFormer: An Autoregressive Generative Model of Raw Images and Text

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

So this was the second cool thing that we* got this week.

* this time the « we » is really just me, but hey, academic we 🙃

* this time the « we » is really just me, but hey, academic we 🙃

Our big_vision codebase is really good! And it's *the* reference for ViT, SigLIP, PaliGemma, JetFormer, ... including fine-tuning them.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

December 3, 2024 at 8:40 AM

So this was the second cool thing that we* got this week.

* this time the « we » is really just me, but hey, academic we 🙃

* this time the « we » is really just me, but hey, academic we 🙃

Our big_vision codebase is really good! And it's *the* reference for ViT, SigLIP, PaliGemma, JetFormer, ... including fine-tuning them.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

December 3, 2024 at 12:18 AM

Our big_vision codebase is really good! And it's *the* reference for ViT, SigLIP, PaliGemma, JetFormer, ... including fine-tuning them.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

Reposted by Lucas Beyer (bl16)

The answer has just dropped: bsky.app/profile/kole...

2021: Replace every CNN with a Transformer

2022: Replace every GAN with diffusion models

2023: Replace every NeRF with 3DGS

2024: Replace every diffusion model with Flow Matching

2025: ???

2022: Replace every GAN with diffusion models

2023: Replace every NeRF with 3DGS

2024: Replace every diffusion model with Flow Matching

2025: ???

December 2, 2024 at 7:00 PM

The answer has just dropped: bsky.app/profile/kole...

The first of the cool things we* got this week!

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

Have you ever wondered how to train an autoregressive generative transformer on text and raw pixels, without a pretrained visual tokenizer (e.g. VQ-VAE)?

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

December 2, 2024 at 7:23 PM

The first of the cool things we* got this week!

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

Some recent discussions made me write up a short read on how I think about doing computer vision research when there's clear potential for abuse.

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

November 29, 2024 at 2:51 PM

Some recent discussions made me write up a short read on how I think about doing computer vision research when there's clear potential for abuse.

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

hahahahhahaah holy cow, paligemma is good =)

November 28, 2024 at 1:18 PM

hahahahhahaah holy cow, paligemma is good =)

segment cow

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

November 28, 2024 at 11:37 AM

segment cow

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

Sorry, but I was strangely attracted... @5trange4ttractor.bsky.social =D

November 27, 2024 at 9:01 PM

Sorry, but I was strangely attracted... @5trange4ttractor.bsky.social =D

Here's a fun real-life prompt where 30min of Googling didn't really help me.

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!

November 27, 2024 at 8:38 PM

Here's a fun real-life prompt where 30min of Googling didn't really help me.

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!