Joel Lehman

@joelbot3000.bsky.social

790 followers

53 following

18 posts

ML researcher, co-author Why Greatness Cannot Be Planned. Creative+safe AI, AI+human flourishing, philosophy; prev OpenAI / Uber AI / Geometric Intelligence

Posts

Media

Videos

Starter Packs

Reposted by Joel Lehman

Reposted by Joel Lehman

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

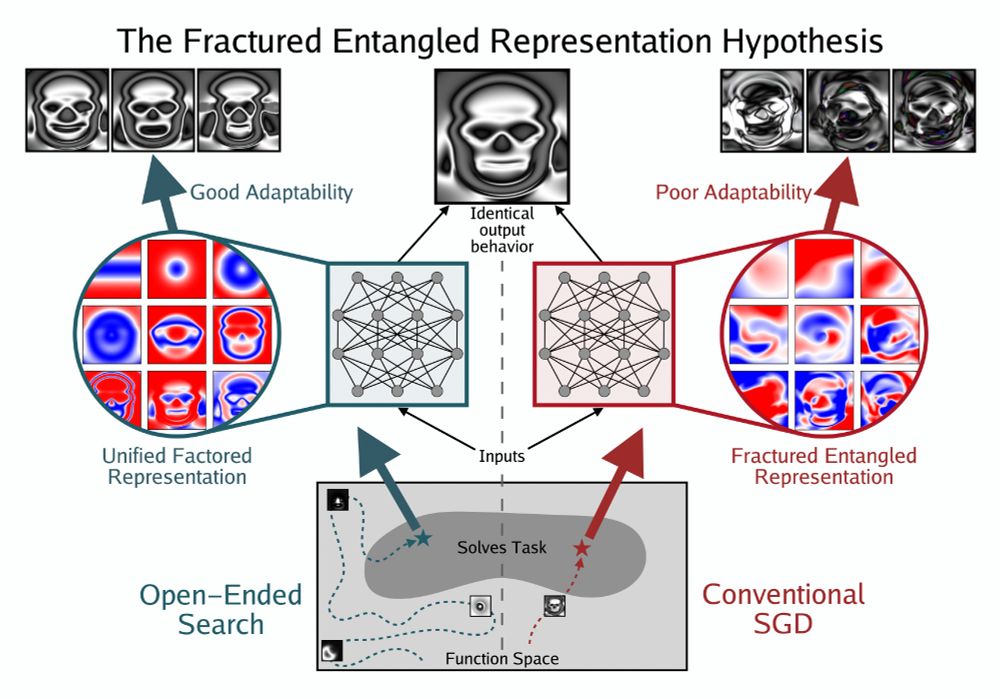

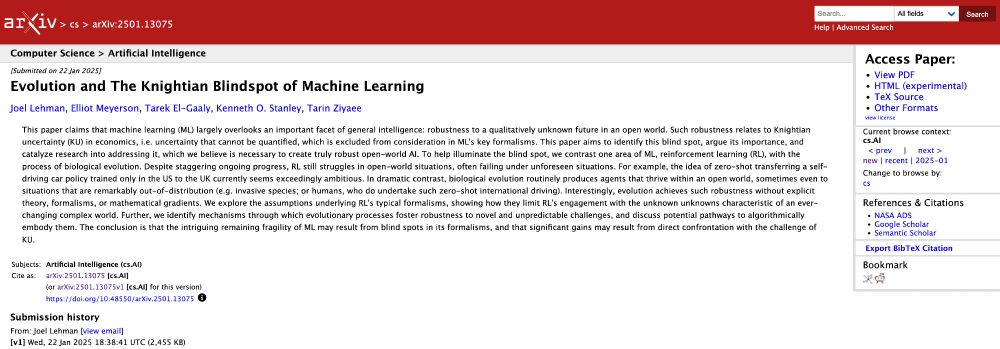

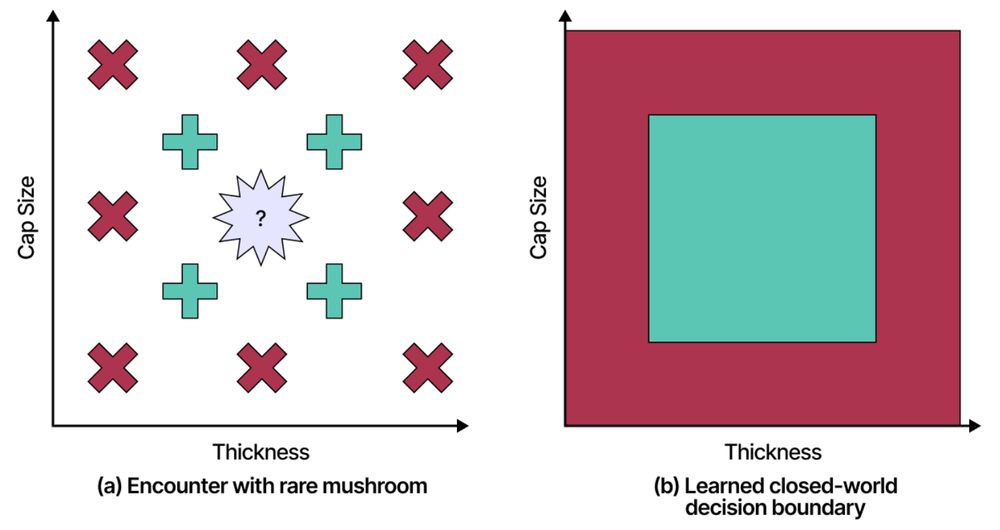

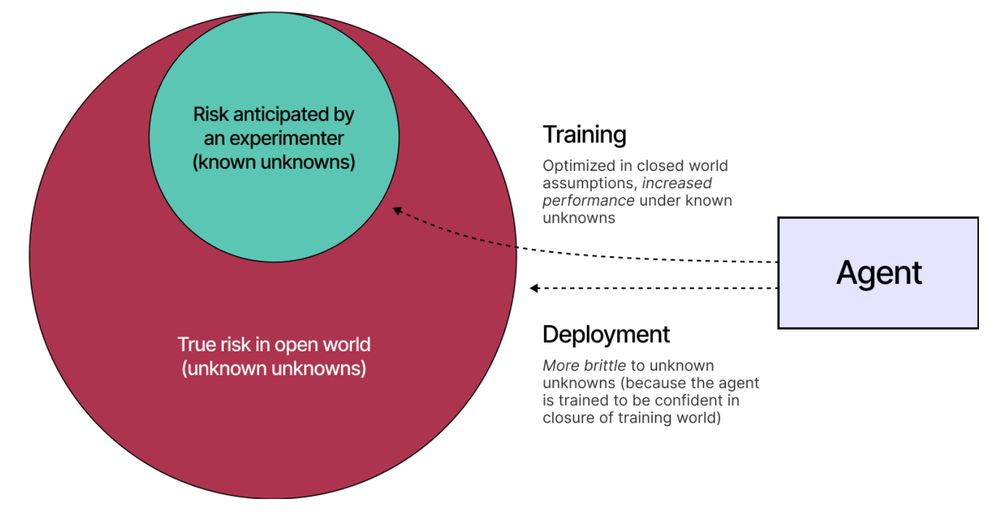

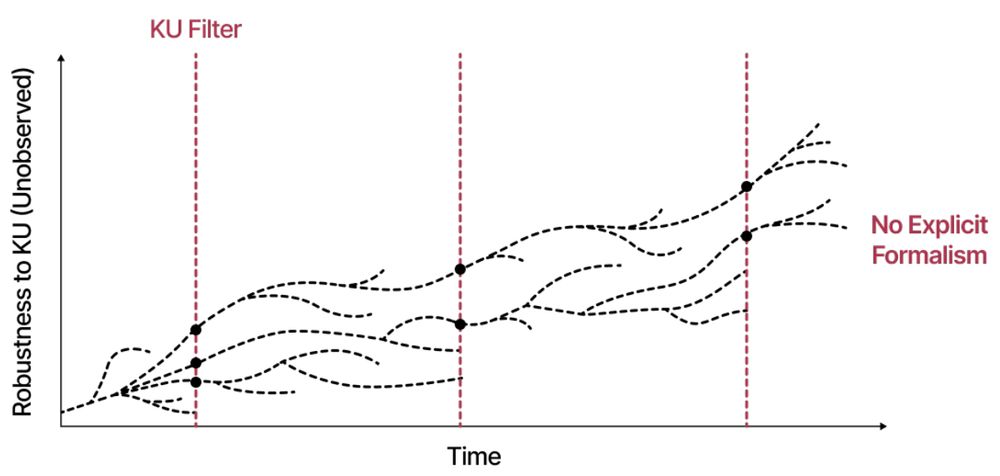

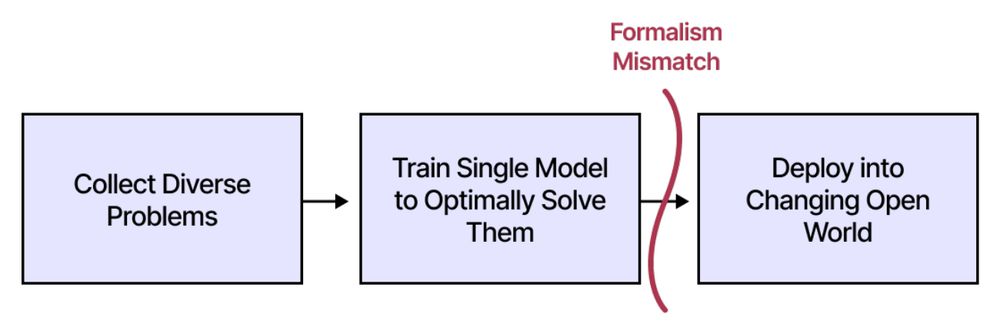

Evolution and The Knightian Blindspot of Machine Learning

This paper claims that machine learning (ML) largely overlooks an important facet of general intelligence: robustness to a qualitatively unknown future in an open world. Such robustness relates to...

arxiv.org

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

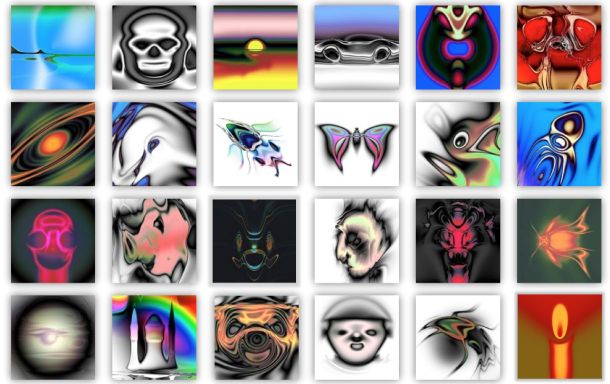

Kenneth Stanley (@kennethstanley.bsky.social)

In the past: CEO of Maven, Team Lead at OpenAI, head of basic/core research at Uber AI, professor at UCF.

Stuff I helped invent: NEAT, CPPNs, HyperNEAT, novelty search, POET, Picbreeder.

Book: Why Greatness Cannot Be Planned

bsky.app

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Joel Lehman

@joelbot3000.bsky.social

· Jan 24

Reposted by Joel Lehman

Joel Lehman

@joelbot3000.bsky.social

· Nov 25

Reposted by Joel Lehman

Max

@maxbittker.bsky.social

· Aug 10