📍New York, NY

👩💻 https://j-kruk.github.io/

📍 West Ballroom A-D #5211

⏰ Wednesday Dec 11th, 11 a.m. — 2 p.m. PST.

📍 West Ballroom A-D #5211

⏰ Wednesday Dec 11th, 11 a.m. — 2 p.m. PST.

🙏 Huge thank you to @judyh.bsky.social,

@polochau.bsky.social, and @diyiyang.bsky.social for their guidance!

🙏 Huge thank you to @judyh.bsky.social,

@polochau.bsky.social, and @diyiyang.bsky.social for their guidance!

🤗HF: huggingface.co/semi-truths

👾Github: github.com/J-Kruk/SemiT...

🤗HF: huggingface.co/semi-truths

👾Github: github.com/J-Kruk/SemiT...

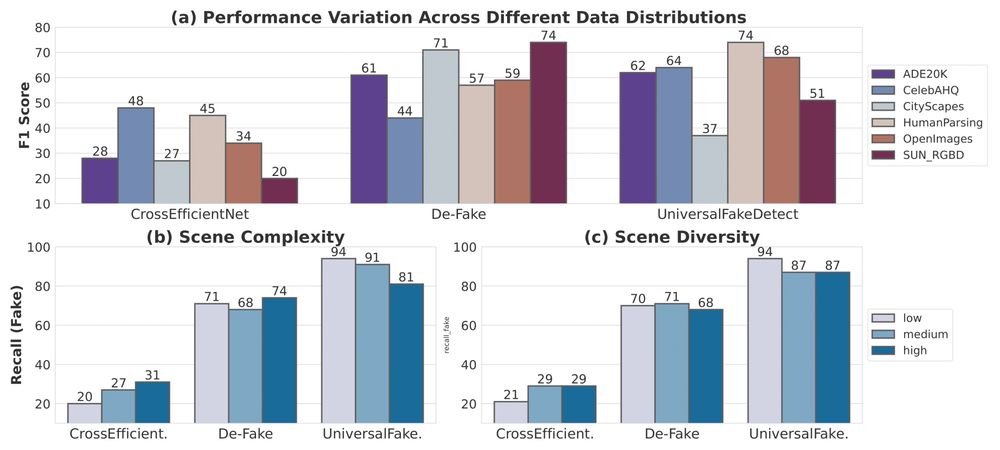

💡 UniversalFakeDetector suffers >35 point performance drop on different scenes, and >5 points on magnitude of change.

💡 UniversalFakeDetector suffers >35 point performance drop on different scenes, and >5 points on magnitude of change.

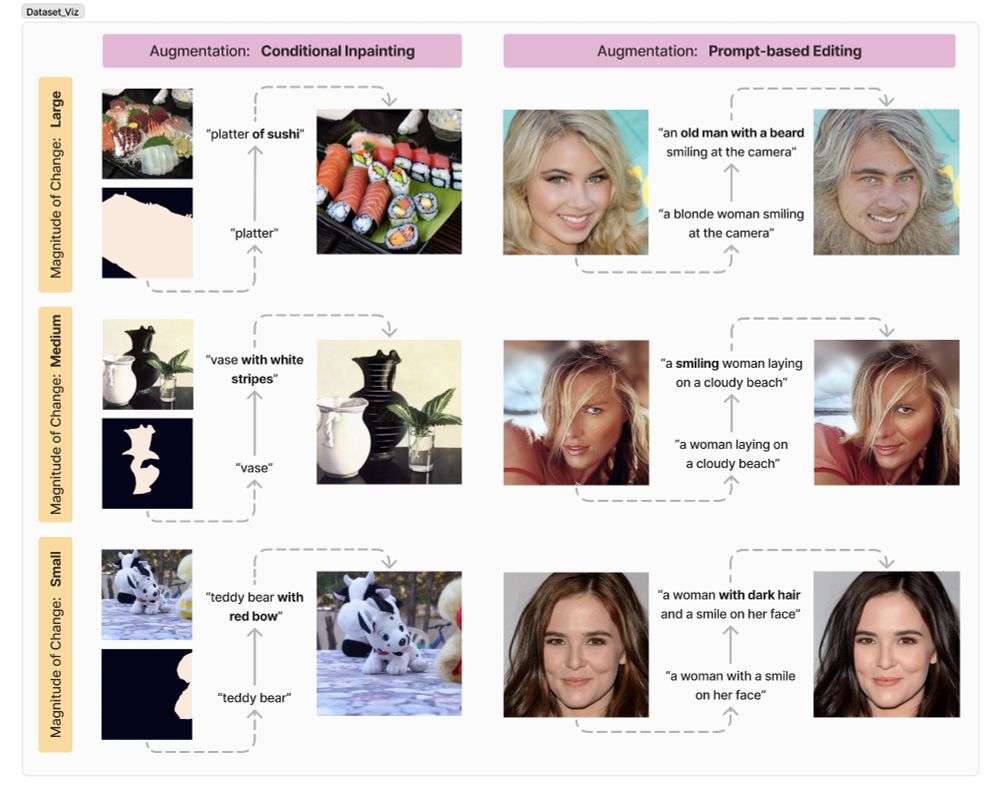

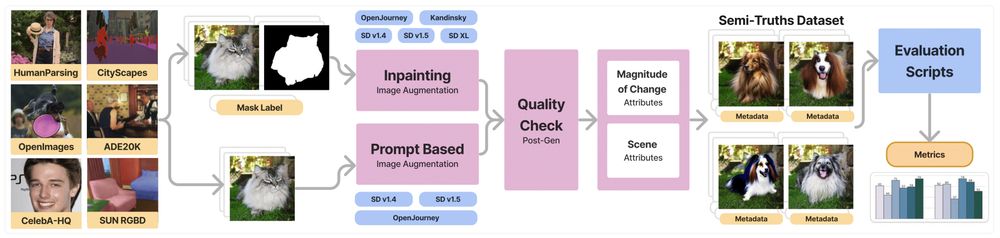

We perturb entity & image captions with LLMs, then apply different diffusion models and augmentation techniques to alter images.

We perturb entity & image captions with LLMs, then apply different diffusion models and augmentation techniques to alter images.

It includes a wide array of scenes & subjects, as well as various magnitudes of image augmentation. We define “magnitude” by size of the augmented region and the semantic change achieved.

It includes a wide array of scenes & subjects, as well as various magnitudes of image augmentation. We define “magnitude” by size of the augmented region and the semantic change achieved.

🔍 One such case is known as “Sleepy Joe”, where a video of Joe Biden was changed only in the facial region to make it appear as though he fell asleep at a podium.

🔍 One such case is known as “Sleepy Joe”, where a video of Joe Biden was changed only in the facial region to make it appear as though he fell asleep at a podium.

🤔 However, the majority of the SOTA systems are trained exclusively on end-to-end fully generated images, or on data from very constrained distributions.

🤔 However, the majority of the SOTA systems are trained exclusively on end-to-end fully generated images, or on data from very constrained distributions.