Lukas Schäfer

@lukaschaefer.bsky.social

280 followers

160 following

50 posts

www.lukaschaefer.com

Researcher @msftresearch.bsky.social; working on autonomous agents in video games; PhD Univ of Edinburgh ; Ex Huawei Noah’s Ark Lab, Dematic; Young researcher HLF 2022

Posts

Media

Videos

Starter Packs

Pinned

Lukas Schäfer

@lukaschaefer.bsky.social

· Jun 19

Lukas Schäfer

@lukaschaefer.bsky.social

· Aug 26

Lukas Schäfer

@lukaschaefer.bsky.social

· Aug 26

Reposted by Lukas Schäfer

Reposted by Lukas Schäfer

Lukas Schäfer

@lukaschaefer.bsky.social

· Jun 29

Lukas Schäfer

@lukaschaefer.bsky.social

· Jun 23

Lukas Schäfer

@lukaschaefer.bsky.social

· Jun 19

Lukas Schäfer

@lukaschaefer.bsky.social

· Jun 19

Reposted by Lukas Schäfer

Lukas Schäfer

@lukaschaefer.bsky.social

· May 23

Lukas Schäfer

@lukaschaefer.bsky.social

· May 23

Lukas Schäfer

@lukaschaefer.bsky.social

· May 21

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18

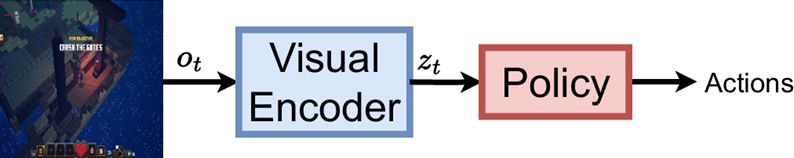

GitHub - microsoft/imitation_learning_in_modern_video_games: Accompanying code for "Visual Encoders for Data-Efficient Imitation Learning in Modern Video Games" publication

Accompanying code for "Visual Encoders for Data-Efficient Imitation Learning in Modern Video Games" publication - microsoft/imitation_learning_in_modern_video_games

github.com

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18

Lukas Schäfer

@lukaschaefer.bsky.social

· May 18