Nicholas Sharp

@nmwsharp.bsky.social

1.2K followers

93 following

26 posts

3D geometry researcher: graphics, vision, 3D ML, etc | Senior Research Scientist @NVIDIA | polyscope.run and geometry-central.net | running, hockey, baking, & cheesy sci fi | opinions my own | he/him

personal website: nmwsharp.com

Posts

Media

Videos

Starter Packs

Reposted by Nicholas Sharp

Abhishek Madan

@abhishekmadan.bsky.social

· Jul 30

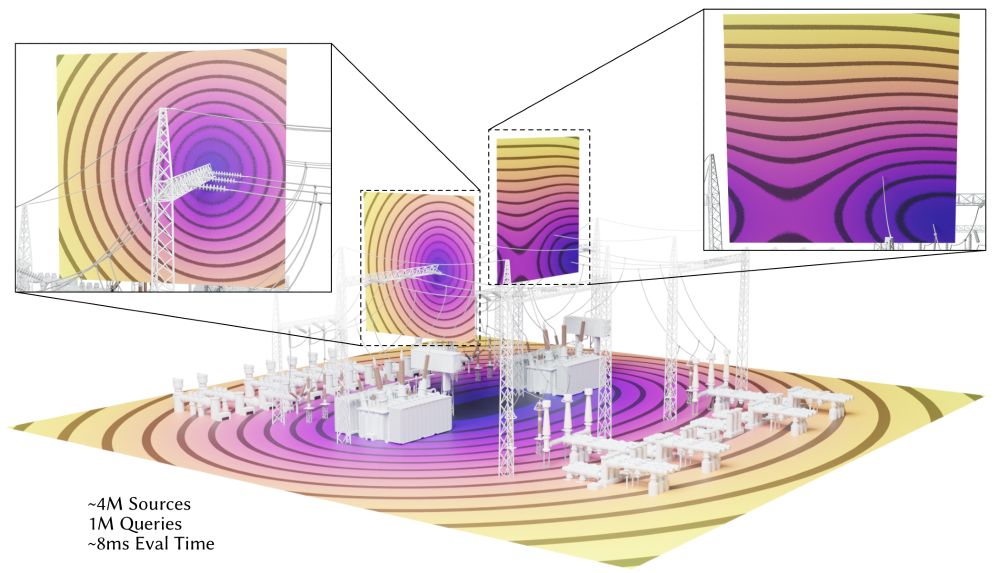

Nicholas Sharp

@nmwsharp.bsky.social

· Jul 2

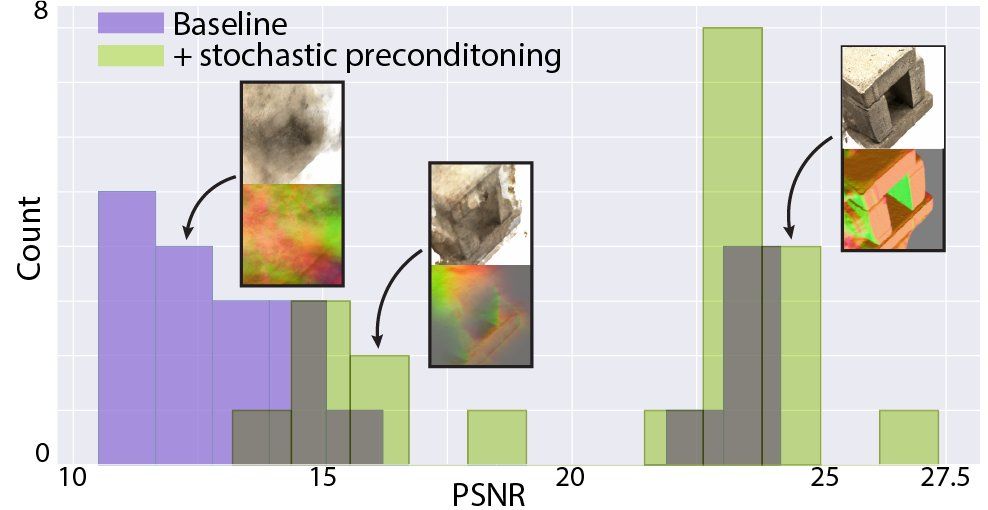

Nicholas Sharp

@nmwsharp.bsky.social

· Jul 2

Reposted by Nicholas Sharp

David Levin

@diwlevin.bsky.social

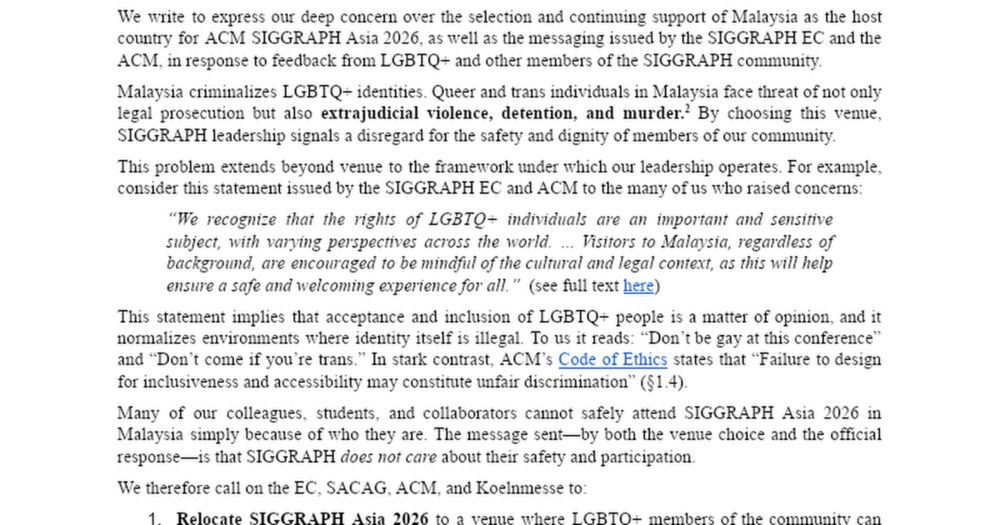

· Jun 18

Open Letter to the SIGGRAPH Leadership

RE: Call for SIGGRAPH Asia to relocate from Malaysia and commit to a venue selection process that safeguards LGBTQ+ and other at-risk communities. To the SIGGRAPH Leadership: SIGGRAPH Executive Commit...

docs.google.com

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 8

Reposted by Nicholas Sharp

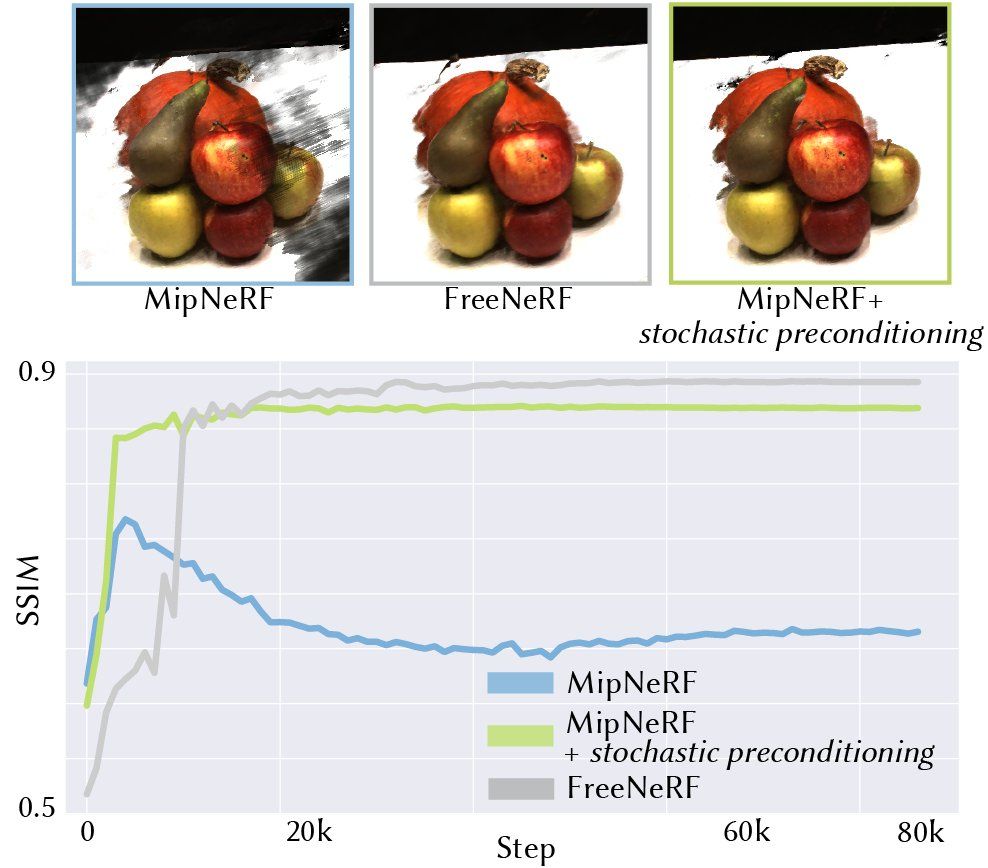

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 4

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 4

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 4

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 3

Nicholas Sharp

@nmwsharp.bsky.social

· Jun 3