Writes at https://olickel.com

This might be the start of us actually being able to talk to models with images, conveying a lot more than what's been possible before.

This might be the start of us actually being able to talk to models with images, conveying a lot more than what's been possible before.

5. Ideas - in this case that would be the characters themselves

6. Guidelines - for us that's style guidelines

5. Ideas - in this case that would be the characters themselves

6. Guidelines - for us that's style guidelines

1. Background - writing, reasoning, definitions, etc.

2. Primary Task - what's the overarching objective?

3. Audience - who is this for? What is the intended outcome?

then comes more specific parts:

1. Background - writing, reasoning, definitions, etc.

2. Primary Task - what's the overarching objective?

3. Audience - who is this for? What is the intended outcome?

then comes more specific parts:

Go to Opus with the results, ask for updated specs (or addendums), and go back to nano with the specs.

Go to Opus with the results, ask for updated specs (or addendums), and go back to nano with the specs.

Generate from specs ↠ Render ↠ Critique ↠ Edit specs ↠ Regenerate works extremely well with Opus. For fun, you can also throw in Nano to generate out-there-undesignable-but-cool frontends to remix from.

Generate from specs ↠ Render ↠ Critique ↠ Edit specs ↠ Regenerate works extremely well with Opus. For fun, you can also throw in Nano to generate out-there-undesignable-but-cool frontends to remix from.

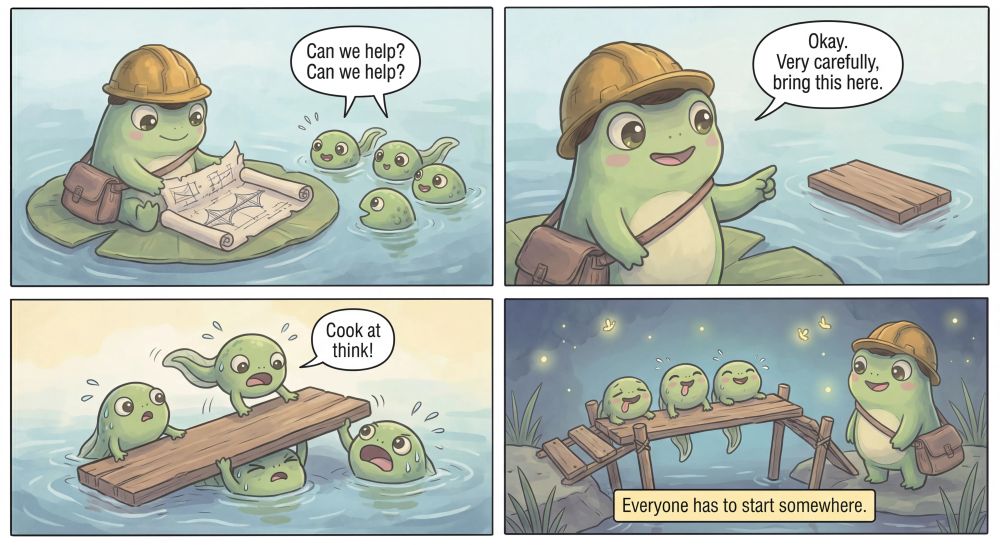

Sorry - next strip coming up!

Sorry - next strip coming up!

Turns out Opus is now miles ahead of even Gemini at visual understanding. This is a model that can pick out and critique emotional impact, while noticing elements 10 pixels out of place.

Turns out Opus is now miles ahead of even Gemini at visual understanding. This is a model that can pick out and critique emotional impact, while noticing elements 10 pixels out of place.

Opus is amazing at visual review and making very, VERY detailed specs, and banan is good at following them.

Let me tell you two stories.

Opus is amazing at visual review and making very, VERY detailed specs, and banan is good at following them.

Let me tell you two stories.

1) Gemini 2.5 Pro in AI Studio for Deep Writing

2) Opus 4.5 in Cursor for the best greenfield frontend work money can buy - if you're rich

1) Gemini 2.5 Pro in AI Studio for Deep Writing

2) Opus 4.5 in Cursor for the best greenfield frontend work money can buy - if you're rich

I wonder how it happened

I wonder how it happened

Here's a slice of a 4 HOUR run (~1 second per minute) with not much more than 'keep going' from me every 90 minutes or so.

moonshotai.github.io/Kimi-K2/

Here's a slice of a 4 HOUR run (~1 second per minute) with not much more than 'keep going' from me every 90 minutes or so.

moonshotai.github.io/Kimi-K2/

The key problems for modern LLM application design that get often overlooked (I think) are:

• Streaming outputs and partial parsing

• Context organization and management (I don't mean summarising at 90%)

The key problems for modern LLM application design that get often overlooked (I think) are:

• Streaming outputs and partial parsing

• Context organization and management (I don't mean summarising at 90%)

Most flows today treat NL as reasoning, code as execution, and structured data as an extraction method. There might be problems with this approach.

Most flows today treat NL as reasoning, code as execution, and structured data as an extraction method. There might be problems with this approach.

Verified on Qwen 3 - a30b (below)

Lots of interesting takeaways from the Random Rewards paper. NOT that RL is dead, but honestly far more interesting than that!

Verified on Qwen 3 - a30b (below)

Lots of interesting takeaways from the Random Rewards paper. NOT that RL is dead, but honestly far more interesting than that!