Writes at https://olickel.com

YMMV though - happy Thanksgiving!

YMMV though - happy Thanksgiving!

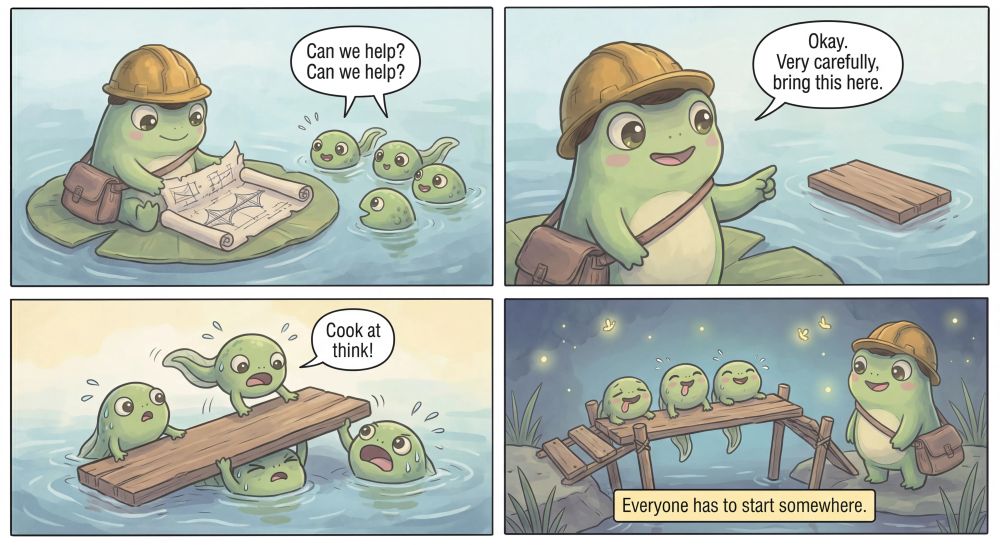

This might be the start of us actually being able to talk to models with images, conveying a lot more than what's been possible before.

This might be the start of us actually being able to talk to models with images, conveying a lot more than what's been possible before.

5. Ideas - in this case that would be the characters themselves

6. Guidelines - for us that's style guidelines

5. Ideas - in this case that would be the characters themselves

6. Guidelines - for us that's style guidelines

1. Background - writing, reasoning, definitions, etc.

2. Primary Task - what's the overarching objective?

3. Audience - who is this for? What is the intended outcome?

then comes more specific parts:

1. Background - writing, reasoning, definitions, etc.

2. Primary Task - what's the overarching objective?

3. Audience - who is this for? What is the intended outcome?

then comes more specific parts:

Go to Opus with the results, ask for updated specs (or addendums), and go back to nano with the specs.

Go to Opus with the results, ask for updated specs (or addendums), and go back to nano with the specs.

Generate from specs ↠ Render ↠ Critique ↠ Edit specs ↠ Regenerate works extremely well with Opus. For fun, you can also throw in Nano to generate out-there-undesignable-but-cool frontends to remix from.

Generate from specs ↠ Render ↠ Critique ↠ Edit specs ↠ Regenerate works extremely well with Opus. For fun, you can also throw in Nano to generate out-there-undesignable-but-cool frontends to remix from.

Sorry - next strip coming up!

Sorry - next strip coming up!

Turns out Opus is now miles ahead of even Gemini at visual understanding. This is a model that can pick out and critique emotional impact, while noticing elements 10 pixels out of place.

Turns out Opus is now miles ahead of even Gemini at visual understanding. This is a model that can pick out and critique emotional impact, while noticing elements 10 pixels out of place.

Antigravity: antigravity.google/

Claude UI: claude.ai

Cline: cline.bot/

Claude Code: www.claude.com/product/cla...

Codex: developers.openai.com/codex/cli/

Sambot: chat.com

V0: v0.dev

Mandark: github.com/hrishioa/ma...

Antigravity: antigravity.google/

Claude UI: claude.ai

Cline: cline.bot/

Claude Code: www.claude.com/product/cla...

Codex: developers.openai.com/codex/cli/

Sambot: chat.com

V0: v0.dev

Mandark: github.com/hrishioa/ma...

• Gemini 3.0 in Antigravity is really good at one shotting complex (in terms of functionality) frontends!

Goes without saying, I haven't tested everything, and anything not mentioned is either unknown or had weird results *for me*! Your mileage may vary :)

• Gemini 3.0 in Antigravity is really good at one shotting complex (in terms of functionality) frontends!

Goes without saying, I haven't tested everything, and anything not mentioned is either unknown or had weird results *for me*! Your mileage may vary :)

11) Nanobanana pro for image generation and diagrams- Flux 2 as a far second for photo work

12) Firecrawl MCP connected to almost all of these for bulk web searching

11) Nanobanana pro for image generation and diagrams- Flux 2 as a far second for photo work

12) Firecrawl MCP connected to almost all of these for bulk web searching

7) Codex for placebo testing

8) Claude UI with Sonnet (or GPT-5 on chat[dot]com) as a Google/Perplexity replacement

9) V0 for frontend if you already have assets in Figma

7) Codex for placebo testing

8) Claude UI with Sonnet (or GPT-5 on chat[dot]com) as a Google/Perplexity replacement

9) V0 for frontend if you already have assets in Figma

4) Gemini CLI with Gemini 3.0 (only Gemini 3) for long refactors and edits

5) Sonnet 4.5 1m in Cline for long complex tasks where you already have a good plan

4) Gemini CLI with Gemini 3.0 (only Gemini 3) for long refactors and edits

5) Sonnet 4.5 1m in Cline for long complex tasks where you already have a good plan