Orpheus Lummis

@orpheuslummis.info

Advancing AI safety through convenings, coordination, software

https://orpheuslummis.info, based in Montréal

https://orpheuslummis.info, based in Montréal

Reposted by Orpheus Lummis

Extremely excited to launch this report; the second report from World Internet Conference's International AI Governance Programme that I co-Chair with Yi Zeng. It goes further than any similar report I've seen in recommending robust governance interventions 1/4

www.wicinternet.org/pdf/Advancin...

www.wicinternet.org/pdf/Advancin...

www.wicinternet.org

November 11, 2025 at 2:04 PM

Extremely excited to launch this report; the second report from World Internet Conference's International AI Governance Programme that I co-Chair with Yi Zeng. It goes further than any similar report I've seen in recommending robust governance interventions 1/4

www.wicinternet.org/pdf/Advancin...

www.wicinternet.org/pdf/Advancin...

Reposted by Orpheus Lummis

I’m thrilled to share that I’ve been helping out my brother David who is starting a new org, Evitable.com, focused on informing and organizing the public around societal-scale risks and harms of AI, and countering industry narratives of AI inevitability and acceleration! 1/n

Evitable

Evitable.com

October 29, 2025 at 5:31 PM

I’m thrilled to share that I’ve been helping out my brother David who is starting a new org, Evitable.com, focused on informing and organizing the public around societal-scale risks and harms of AI, and countering industry narratives of AI inevitability and acceleration! 1/n

Reposted by Orpheus Lummis

They’re here! 🎉 After months of rigorous evaluations, our 2025 Charity Recommendations are out! Learn more about the organizations that can do the most good for animals with additional donations at https://bit.ly/2025-charity-recs 🙌🐥 Together, we’re helping people help more animals. 💙

November 4, 2025 at 6:53 PM

They’re here! 🎉 After months of rigorous evaluations, our 2025 Charity Recommendations are out! Learn more about the organizations that can do the most good for animals with additional donations at https://bit.ly/2025-charity-recs 🙌🐥 Together, we’re helping people help more animals. 💙

Montréal event on the International AI Safety Report, First Key Update: Capabilities and Risk Implications

Tuesday Nov 4, 7PM

RSVP: luma.com/09j4095g

Tuesday Nov 4, 7PM

RSVP: luma.com/09j4095g

October 30, 2025 at 9:12 PM

Montréal event on the International AI Safety Report, First Key Update: Capabilities and Risk Implications

Tuesday Nov 4, 7PM

RSVP: luma.com/09j4095g

Tuesday Nov 4, 7PM

RSVP: luma.com/09j4095g

Reposted by Orpheus Lummis

This workshop follows one we ran in July, adding optional specialized talks, and light moderation in the breakout sessions. To see how that one went, and videos of the talks, see this thread:

www.lesswrong.com/posts/csdn3e...

www.lesswrong.com/posts/csdn3e...

Summary of our Workshop on Post-AGI Outcomes — LessWrong

Last month we held a workshop on Post-AGI outcomes. This post is a list of all the talks, with short summaries, as well as my personal takeaways. …

www.lesswrong.com

October 28, 2025 at 10:06 PM

This workshop follows one we ran in July, adding optional specialized talks, and light moderation in the breakout sessions. To see how that one went, and videos of the talks, see this thread:

www.lesswrong.com/posts/csdn3e...

www.lesswrong.com/posts/csdn3e...

thanks X25519MLKEM768

October 24, 2025 at 8:11 PM

thanks X25519MLKEM768

We call for a prohibition on the development of superintelligence, not lifted before there is

- broad scientific consensus that it will be done safely and controllably, and

- strong public buy-in.

superintelligence-statement.org

- broad scientific consensus that it will be done safely and controllably, and

- strong public buy-in.

superintelligence-statement.org

Statement on Superintelligence

“We call for a prohibition on the development of superintelligence, not lifted before there is (1) broad scientific consensus that it will be done safely and controllably, and (2) strong public bu...

superintelligence-statement.org

October 22, 2025 at 10:11 AM

We call for a prohibition on the development of superintelligence, not lifted before there is

- broad scientific consensus that it will be done safely and controllably, and

- strong public buy-in.

superintelligence-statement.org

- broad scientific consensus that it will be done safely and controllably, and

- strong public buy-in.

superintelligence-statement.org

AI safety coworking spaces:

- LISA (London)

- FAR Labs (Berkeley)

- Constellation (Berkeley)

- SASH (Singapore)

- Mox (SF)

- Trajectory Labs (Toronto)

- Meridian (Cambridge)

- CEEALAR (Blackpool)

- SAISS (Sydney)

- PEAKS (Zurich)

- AISCT (Cape Town)

- Monoid (Moscow)

Let's have one for Montréal?

- LISA (London)

- FAR Labs (Berkeley)

- Constellation (Berkeley)

- SASH (Singapore)

- Mox (SF)

- Trajectory Labs (Toronto)

- Meridian (Cambridge)

- CEEALAR (Blackpool)

- SAISS (Sydney)

- PEAKS (Zurich)

- AISCT (Cape Town)

- Monoid (Moscow)

Let's have one for Montréal?

October 21, 2025 at 3:56 PM

AI safety coworking spaces:

- LISA (London)

- FAR Labs (Berkeley)

- Constellation (Berkeley)

- SASH (Singapore)

- Mox (SF)

- Trajectory Labs (Toronto)

- Meridian (Cambridge)

- CEEALAR (Blackpool)

- SAISS (Sydney)

- PEAKS (Zurich)

- AISCT (Cape Town)

- Monoid (Moscow)

Let's have one for Montréal?

- LISA (London)

- FAR Labs (Berkeley)

- Constellation (Berkeley)

- SASH (Singapore)

- Mox (SF)

- Trajectory Labs (Toronto)

- Meridian (Cambridge)

- CEEALAR (Blackpool)

- SAISS (Sydney)

- PEAKS (Zurich)

- AISCT (Cape Town)

- Monoid (Moscow)

Let's have one for Montréal?

Reposted by Orpheus Lummis

AI is evolving too quickly for an annual report to suffice. To help policymakers keep pace, we're introducing the first Key Update to the International AI Safety Report. 🧵⬇️

(1/10)

(1/10)

October 15, 2025 at 10:49 AM

AI is evolving too quickly for an annual report to suffice. To help policymakers keep pace, we're introducing the first Key Update to the International AI Safety Report. 🧵⬇️

(1/10)

(1/10)

Tonight the Montréal AI safety meetup is on the Global Call for AI Red Lines luma.com/vjgi2npr

My slides: docs.google.com/presentation...

My slides: docs.google.com/presentation...

Global Call for AI Red Lines · Luma

EN:

At UNGA-80 this September, Nobel laureates, former heads of state, AI pioneers like Yoshua Bengio, and leaders from across diplomacy, human rights, and…

luma.com

October 14, 2025 at 9:57 PM

Tonight the Montréal AI safety meetup is on the Global Call for AI Red Lines luma.com/vjgi2npr

My slides: docs.google.com/presentation...

My slides: docs.google.com/presentation...

Reposted by Orpheus Lummis

Our work, purpose, mission are now more clearly expressed as we updated our website.

www.horizonomega.org

www.horizonomega.org

HΩ

Reducing risks from AI through collaboration, research, and education

www.horizonomega.org

October 7, 2025 at 4:56 PM

Our work, purpose, mission are now more clearly expressed as we updated our website.

www.horizonomega.org

www.horizonomega.org

Give your input towards Canada's renewed AI strategy.

> Canada is running a national sprint to shape a renewed AI strategy. Tell us where Canada should focus.

> The consultation is open from October 1 to October 31.

ised-isde.canada.ca/site/ised/en...

> Canada is running a national sprint to shape a renewed AI strategy. Tell us where Canada should focus.

> The consultation is open from October 1 to October 31.

ised-isde.canada.ca/site/ised/en...

Help define the next chapter of Canada's AI leadership

Current status: Open from October 1 to October 31, 2025

Canada helped invent modern AI. To stay a leader—and protect our digital sovereignty—we're running a 30-day national sprint to shape a renewed...

ised-isde.canada.ca

October 3, 2025 at 8:03 PM

Give your input towards Canada's renewed AI strategy.

> Canada is running a national sprint to shape a renewed AI strategy. Tell us where Canada should focus.

> The consultation is open from October 1 to October 31.

ised-isde.canada.ca/site/ised/en...

> Canada is running a national sprint to shape a renewed AI strategy. Tell us where Canada should focus.

> The consultation is open from October 1 to October 31.

ised-isde.canada.ca/site/ised/en...

Demanding governments to establish verifiable prohibitions on the most dangerous AI uses and behaviors (e.g., lethal autonomy, enablement of weapons of mass destruction, self-replicating systems). Seeking international agreement with enforcement mechanisms by the end of next 2026.

red-lines.ai

red-lines.ai

200+ prominent figures endorse Global Call for AI Red Lines

AI could soon far surpass human capabilities and escalate risks such as engineered pandemics, widespread disinformation, large-scale manipulation of individuals including children...

red-lines.ai

September 24, 2025 at 12:28 PM

Demanding governments to establish verifiable prohibitions on the most dangerous AI uses and behaviors (e.g., lethal autonomy, enablement of weapons of mass destruction, self-replicating systems). Seeking international agreement with enforcement mechanisms by the end of next 2026.

red-lines.ai

red-lines.ai

In Montréal and interested in AI safety, gov, and ethics?

You may find it interesting to subscribe to the Montréal AI safety, ethics, and governance events calendar.

luma.com/montreal-ai-....

You may find it interesting to subscribe to the Montréal AI safety, ethics, and governance events calendar.

luma.com/montreal-ai-....

Montréal AI safety, ethics, and governance · Events Calendar

View and subscribe to events from Montréal AI safety, ethics, and governance on Luma. Montréal community of researchers, builders, policymakers, and lively persons interested in advancing AI safety, e...

luma.com

September 23, 2025 at 12:15 PM

In Montréal and interested in AI safety, gov, and ethics?

You may find it interesting to subscribe to the Montréal AI safety, ethics, and governance events calendar.

luma.com/montreal-ai-....

You may find it interesting to subscribe to the Montréal AI safety, ethics, and governance events calendar.

luma.com/montreal-ai-....

joyeux équinoxe ! 🌞

September 22, 2025 at 11:11 AM

joyeux équinoxe ! 🌞

Next Guaranteed Safe AI Seminar:

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power – Jobst Heitzig

Thursday, October 9, 1 PM EDT

RSVP: luma.com/susn7zfs

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power – Jobst Heitzig

Thursday, October 9, 1 PM EDT

RSVP: luma.com/susn7zfs

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power – Jobst Heitzig · Zoom · Luma

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power

Jobst Heitzig – Senior Mathematician AI Safety Designer

Power is a key concept in AI…

luma.com

September 18, 2025 at 8:53 AM

Next Guaranteed Safe AI Seminar:

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power – Jobst Heitzig

Thursday, October 9, 1 PM EDT

RSVP: luma.com/susn7zfs

Model-Based Soft Maximization of Suitable Metrics of Long-Term Human Power – Jobst Heitzig

Thursday, October 9, 1 PM EDT

RSVP: luma.com/susn7zfs

Upcoming on the Montréal AI safety, ethics, and governance meetup:

Verifying a toy neural network, by Samuel Gélineau.

Thu Oct 2, 7 PM.

RSVP: luma.com/bc8rlwxr

Verifying a toy neural network, by Samuel Gélineau.

Thu Oct 2, 7 PM.

RSVP: luma.com/bc8rlwxr

Verifying a toy neural network · Luma

Samuel Gélineau will present his AI Safety side project, gelisam.com/parity-bot, which demonstrates that it is possible to verify that a neural network…

luma.com

September 17, 2025 at 3:33 PM

Upcoming on the Montréal AI safety, ethics, and governance meetup:

Verifying a toy neural network, by Samuel Gélineau.

Thu Oct 2, 7 PM.

RSVP: luma.com/bc8rlwxr

Verifying a toy neural network, by Samuel Gélineau.

Thu Oct 2, 7 PM.

RSVP: luma.com/bc8rlwxr

Are you in Montréal, interested in AI safety? Join our meetup Tuesday Sep 16, 7PM. I'll be presenting the paper Towards Guaranteed Safe AI (arxiv.org/abs/2405.06624).

RSVP luma.com/exh4xs42

RSVP luma.com/exh4xs42

Towards Guaranteed Safe AI · Luma

Orpheus will present the core ideas from Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems for ~half hour, then we will have…

luma.com

September 10, 2025 at 9:19 PM

Are you in Montréal, interested in AI safety? Join our meetup Tuesday Sep 16, 7PM. I'll be presenting the paper Towards Guaranteed Safe AI (arxiv.org/abs/2405.06624).

RSVP luma.com/exh4xs42

RSVP luma.com/exh4xs42

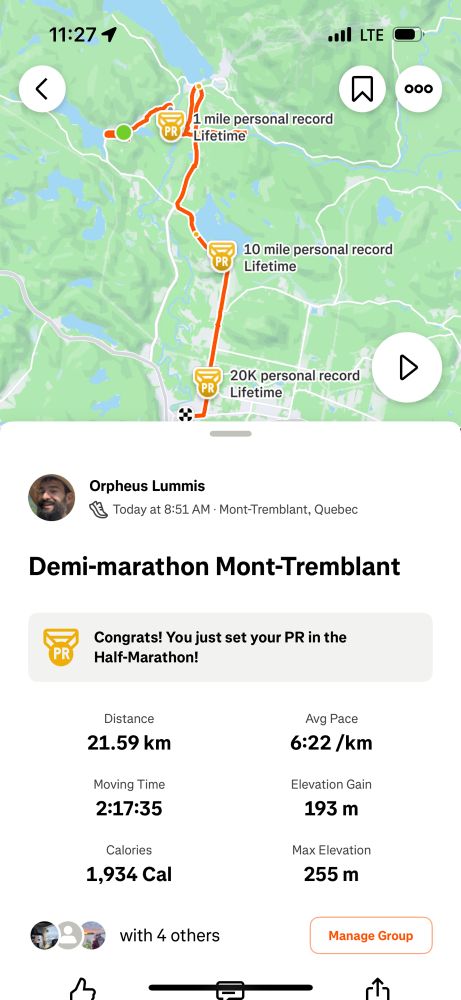

Celebrating my first half marathon 🏃!

August 10, 2025 at 5:46 PM

Celebrating my first half marathon 🏃!

Reposted by Orpheus Lummis

Today I’m releasing an essay series called Better Futures.

It’s been something like eight years in the making, so I’m pretty happy it’s finally out!

It asks: when looking to the future, should we focus on surviving, or on flourishing?

It’s been something like eight years in the making, so I’m pretty happy it’s finally out!

It asks: when looking to the future, should we focus on surviving, or on flourishing?

August 4, 2025 at 2:13 PM

Today I’m releasing an essay series called Better Futures.

It’s been something like eight years in the making, so I’m pretty happy it’s finally out!

It asks: when looking to the future, should we focus on surviving, or on flourishing?

It’s been something like eight years in the making, so I’m pretty happy it’s finally out!

It asks: when looking to the future, should we focus on surviving, or on flourishing?

Reposted by Orpheus Lummis

Guaranteed Safe AI Seminars, August 2025 edition:

Towards Safe and Hallucination-Free Coding AIs, by GasStationManager (Independent Researcher)

On August 7, 13:00 EDT. To join: lu.ma/6zpndj0w

Towards Safe and Hallucination-Free Coding AIs, by GasStationManager (Independent Researcher)

On August 7, 13:00 EDT. To join: lu.ma/6zpndj0w

Towards Safe and Hallucination-Free Coding AIs – GasStationManager · Zoom · Luma

Towards Safe and Hallucination-Free Coding AIs

GasStationManager – Independent Researcher

Modern LLM-based AIs have exhibited great coding abilities, and have…

lu.ma

July 22, 2025 at 12:21 PM

Guaranteed Safe AI Seminars, August 2025 edition:

Towards Safe and Hallucination-Free Coding AIs, by GasStationManager (Independent Researcher)

On August 7, 13:00 EDT. To join: lu.ma/6zpndj0w

Towards Safe and Hallucination-Free Coding AIs, by GasStationManager (Independent Researcher)

On August 7, 13:00 EDT. To join: lu.ma/6zpndj0w

New community hub listing AI safety efforts and meetups in Montréal !

Browse the directory & get involved.

👉 aisafetymontreal.org

Browse the directory & get involved.

👉 aisafetymontreal.org

AI Safety Montréal – Community Directory

Comprehensive directory of AI safety, ethics, and governance organizations in Montréal. Connect with research institutes, student groups, and meetups.

aisafetymontreal.org

July 4, 2025 at 1:50 PM

New community hub listing AI safety efforts and meetups in Montréal !

Browse the directory & get involved.

👉 aisafetymontreal.org

Browse the directory & get involved.

👉 aisafetymontreal.org

In Montréal Tuesday June 17 evening? Join us for a Moral Parliament Giving Game, where participants represent different ethical frameworks (utilitarian, rights-based, virtue ethics, etc.) and collectively negotiate how to allocate a donation to an effective charity.

www.meetup.com/altruismeeff...

www.meetup.com/altruismeeff...

Moral Parliement Giving Game, Tue, Jun 17, 2025, 7:00 PM | Meetup

Nous continuons nos rencontres sur l'altruisme efficace sur une base mensuelle. Les rencontres sont bilingues, mais vu que le matériel est en anglais, la description de l'é

www.meetup.com

June 10, 2025 at 9:45 AM

In Montréal Tuesday June 17 evening? Join us for a Moral Parliament Giving Game, where participants represent different ethical frameworks (utilitarian, rights-based, virtue ethics, etc.) and collectively negotiate how to allocate a donation to an effective charity.

www.meetup.com/altruismeeff...

www.meetup.com/altruismeeff...

Reposted by Orpheus Lummis

Today marks a big milestone for me. I'm launching @law-zero.bsky.social, a nonprofit focusing on a new safe-by-design approach to AI that could both accelerate scientific discovery and provide a safeguard against the dangers of agentic AI.

Every frontier AI system should be grounded in a core commitment: to protect human joy and endeavour. Today, we launch LawZero, a nonprofit dedicated to advancing safe-by-design AI. lawzero.org

June 3, 2025 at 10:20 AM

Today marks a big milestone for me. I'm launching @law-zero.bsky.social, a nonprofit focusing on a new safe-by-design approach to AI that could both accelerate scientific discovery and provide a safeguard against the dangers of agentic AI.