Paul Gavrikov

@paulgavrikov.bsky.social

42 followers

62 following

48 posts

PostDoc Tübingen AI Center | Machine Learning & Computer Vision

paulgavrikov.github.io

Posts

Media

Videos

Starter Packs

Reposted by Paul Gavrikov

Paul Gavrikov

@paulgavrikov.bsky.social

· Apr 24

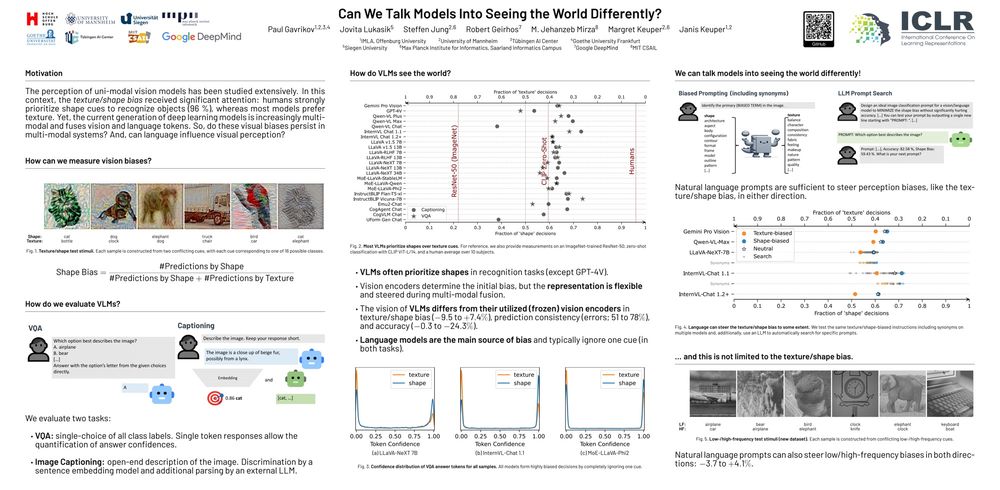

Paul Gavrikov

@paulgavrikov.bsky.social

· Apr 24