Pausal Zivference

@pausalz.bsky.social

2.2K followers

570 following

830 posts

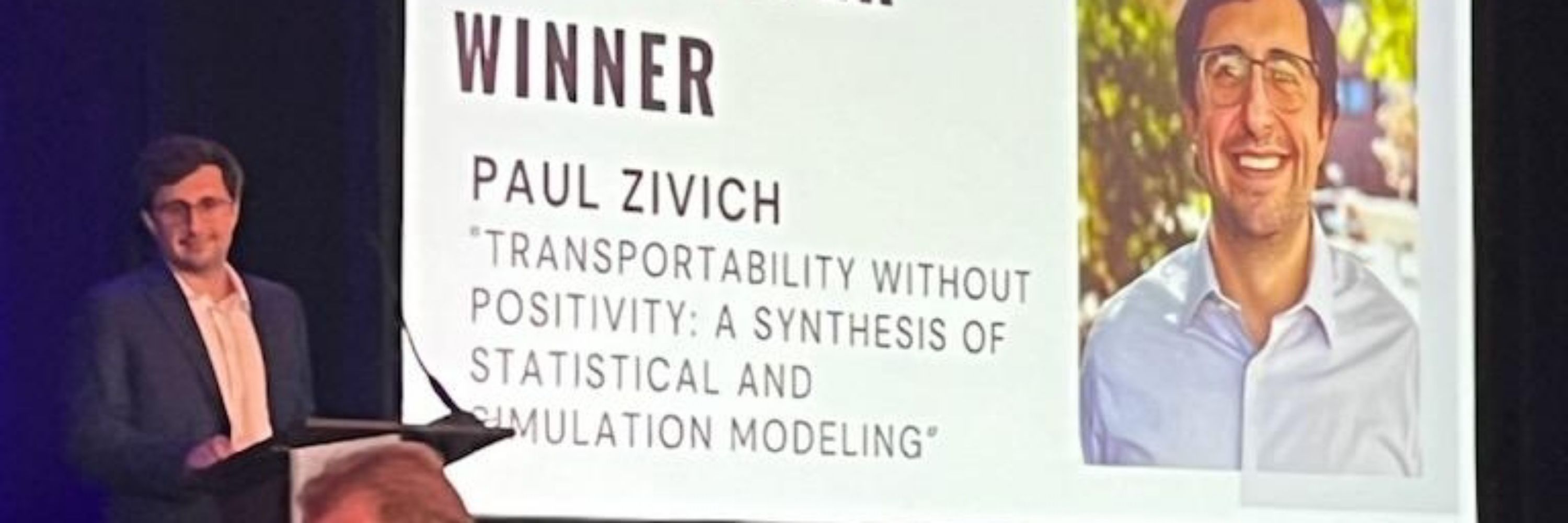

Paul Zivich, Assistant (to the Regional) Professor

Computational epidemiologist, causal inference researcher, amateur mycologist, and open-source enthusiast.

https://github.com/pzivich

#epidemiology #statistics #python #episky #causalsky

Posts

Media

Videos

Starter Packs