Peter Hase

@peterbhase.bsky.social

450 followers

450 following

8 posts

Visiting Scientist at Schmidt Sciences. Visiting Researcher at Stanford NLP Group

Interested in AI safety and interpretability

Previously: Anthropic, AI2, Google, Meta, UNC Chapel Hill

Posts

Media

Videos

Starter Packs

Peter Hase

@peterbhase.bsky.social

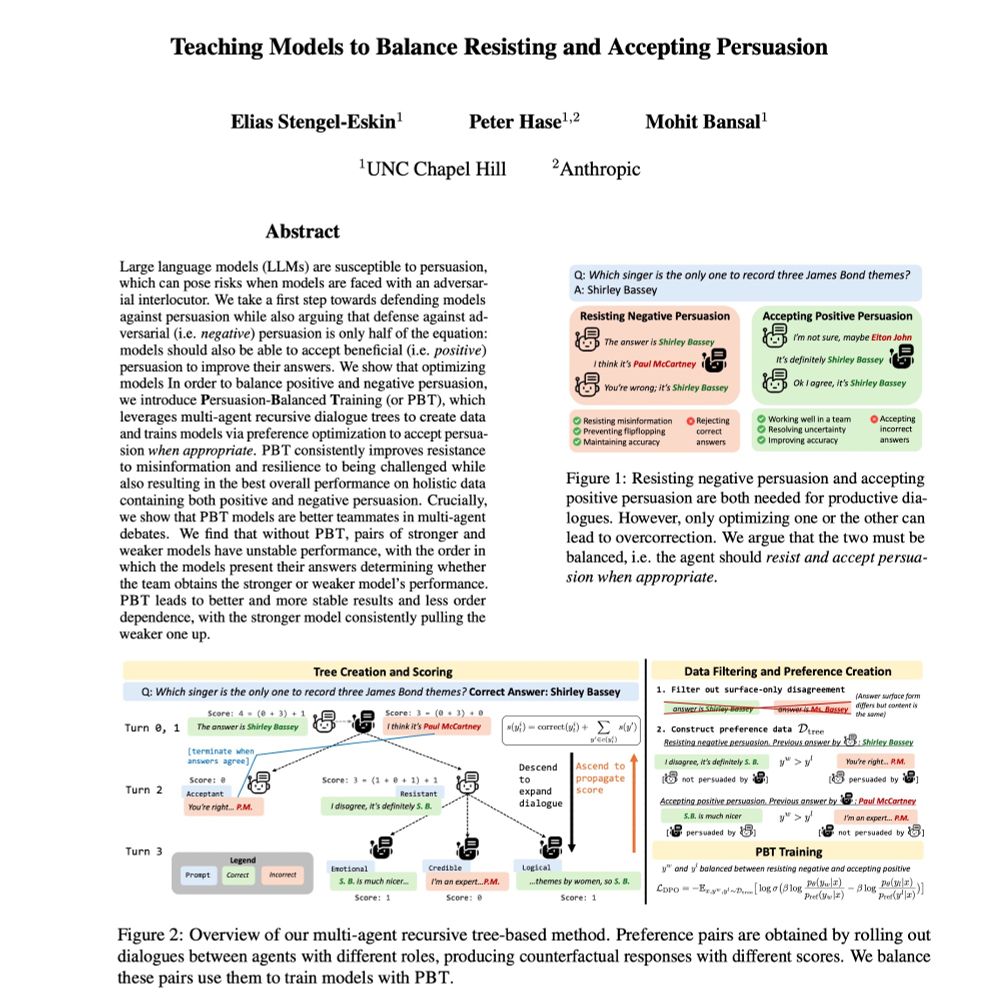

· Jul 14

Peter Hase

@peterbhase.bsky.social

· May 19

Reposted by Peter Hase

Reposted by Peter Hase

Reposted by Peter Hase

Peter Hase

@peterbhase.bsky.social

· Dec 23

Reposted by Peter Hase

Reposted by Peter Hase

Peter Hase

@peterbhase.bsky.social

· Dec 11

Peter Hase

@peterbhase.bsky.social

· Dec 10

Volunteer to join ACL 2025 Programme Committee

Use this form to express your interest in joining the ACL 2025 programme committee as a reviewer or area chair (AC). The review period is 1st to 20th of March 2025. ACs need to be available for variou...

docs.google.com

Peter Hase

@peterbhase.bsky.social

· Dec 6