I post about the AI News, Open Models, Interesting AI Paper Summaries, blog posts, and guides!

My is blog at www.philschmid.de

Make sure to follow! 🤗

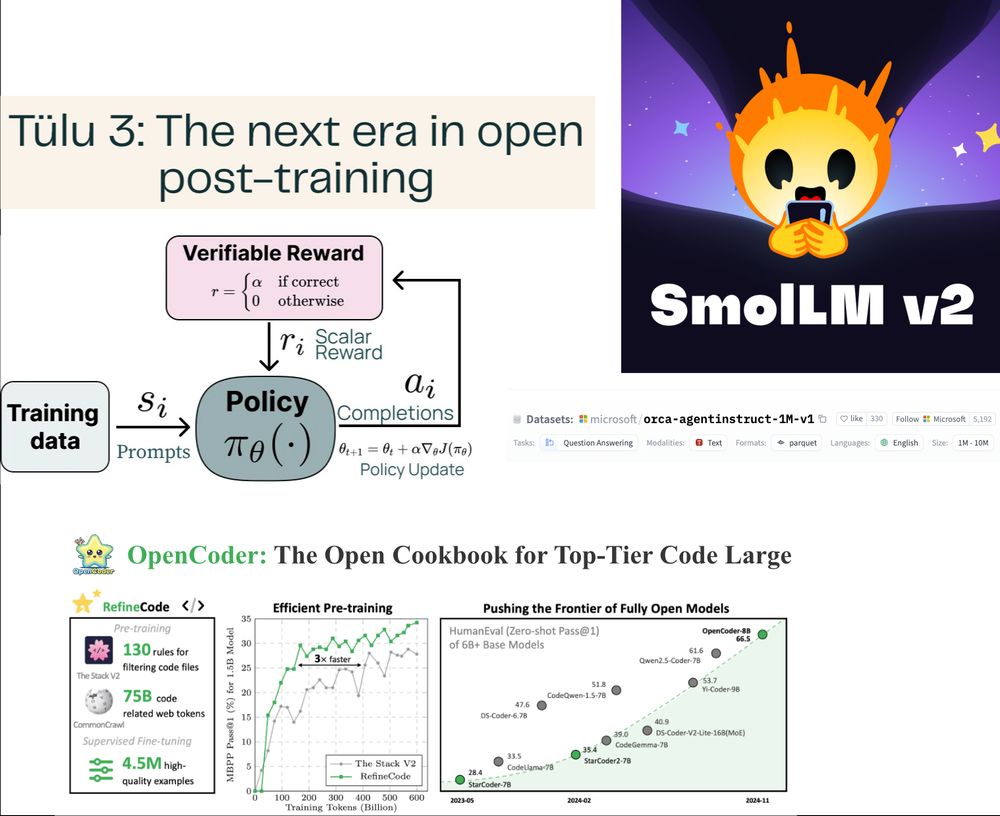

Paper: huggingface.co/papers/2410....

Paper: huggingface.co/papers/2410....

For now, it supports Llama. Which one would you want to see next?

For now, it supports Llama. Which one would you want to see next?

SQLite is all you need! Big sqlite-vec update! 🚀 sqlite-vec is a plugin to support Vector Search in SQLite or LibSQL databases. v0.1.6 now allows storing non-vector data in vec0 virtual tables, enabling metadata conditioning and filtering! 🤯

SQLite is all you need! Big sqlite-vec update! 🚀 sqlite-vec is a plugin to support Vector Search in SQLite or LibSQL databases. v0.1.6 now allows storing non-vector data in vec0 virtual tables, enabling metadata conditioning and filtering! 🤯

SmolTalk: a 1M sample synthetic dataset used to train SmolLM v2 is here! Available under Apache 2.0, it combines newly generated datasets + publicly available ones.

Here’s what you need to know 🧵👇

SmolTalk: a 1M sample synthetic dataset used to train SmolLM v2 is here! Available under Apache 2.0, it combines newly generated datasets + publicly available ones.

Here’s what you need to know 🧵👇

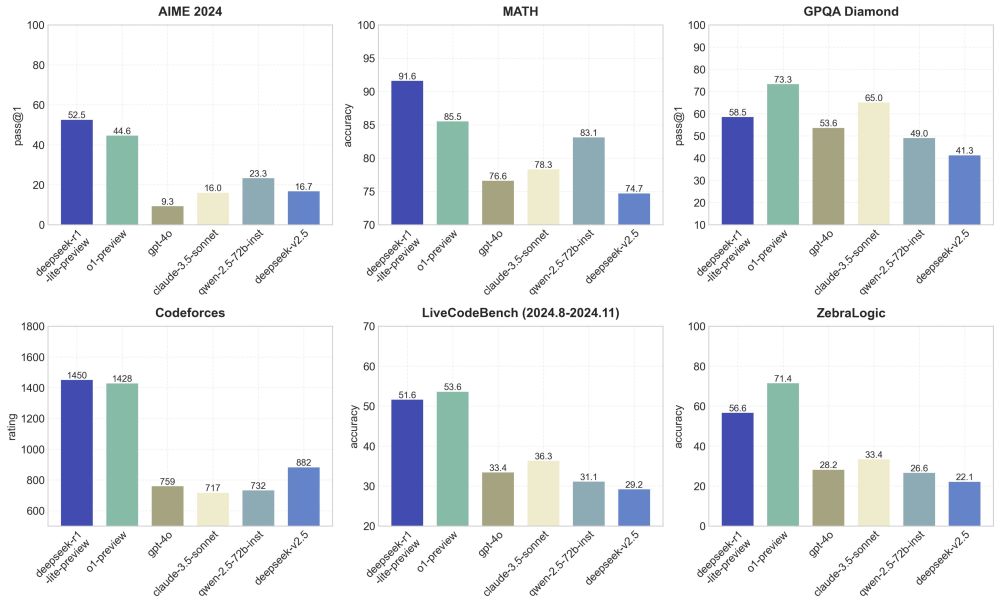

R1, "thoughts" are streamed, no MCTS is used during inference. They must have baked the "search" and "backtracking" directly into the model. huggingface.co/papers/2404....

R1, "thoughts" are streamed, no MCTS is used during inference. They must have baked the "search" and "backtracking" directly into the model. huggingface.co/papers/2404....

> o1-preview-level performance on AIME & MATH benchmarks.

> Access to CoT and transparent thought process in real-time.

> Open-source models & API coming soon!

> o1-preview-level performance on AIME & MATH benchmarks.

> Access to CoT and transparent thought process in real-time.

> Open-source models & API coming soon!

> 3x speed up over Flash Attention2, maintaining 99% performance

> INT4/8 for Q and K matrices, and FP8/16 for P and V + smoothing methods for Q and V

> Drop-in replacement of torch scaled_dot_product_attention

> SageAttention 2 code to be released soon

> 3x speed up over Flash Attention2, maintaining 99% performance

> INT4/8 for Q and K matrices, and FP8/16 for P and V + smoothing methods for Q and V

> Drop-in replacement of torch scaled_dot_product_attention

> SageAttention 2 code to be released soon

I post about the AI News, Open Models, Interesting AI Paper Summaries, blog posts, and guides!

My is blog at www.philschmid.de

Make sure to follow! 🤗

I post about the AI News, Open Models, Interesting AI Paper Summaries, blog posts, and guides!

My is blog at www.philschmid.de

Make sure to follow! 🤗