Samuel Liebana

@samuel-liebana.bsky.social

55 followers

52 following

8 posts

Research Fellow at the Gatsby Unit, UCL

Q: How do we learn?

Posts

Media

Videos

Starter Packs

Reposted by Samuel Liebana

Reposted by Samuel Liebana

Reposted by Samuel Liebana

Reposted by Samuel Liebana

Armin Lak

@laklab.bsky.social

· 19d

A hardwired neural circuit for temporal difference learning

The neurotransmitter dopamine plays a major role in learning by acting as a teaching signal to update the brain's predictions about rewards. A leading theory proposes that this process is analogous to...

www.biorxiv.org

Reposted by Samuel Liebana

Blake Richards

@tyrellturing.bsky.social

· Jul 10

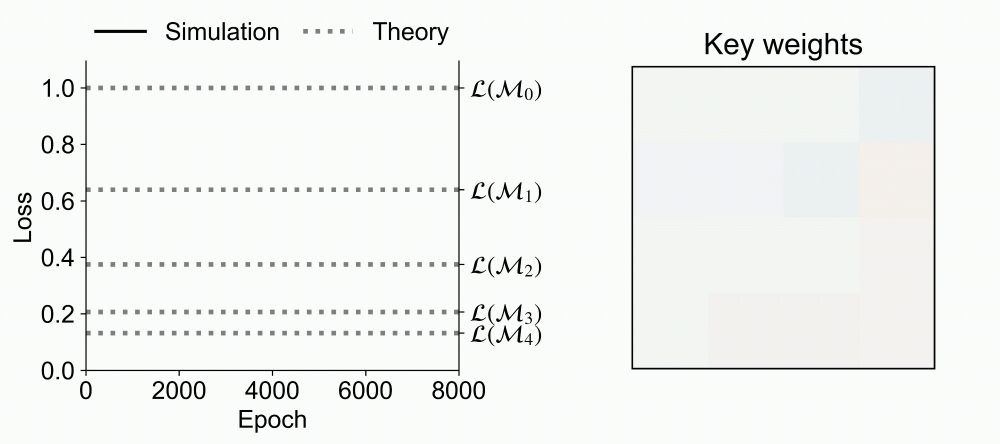

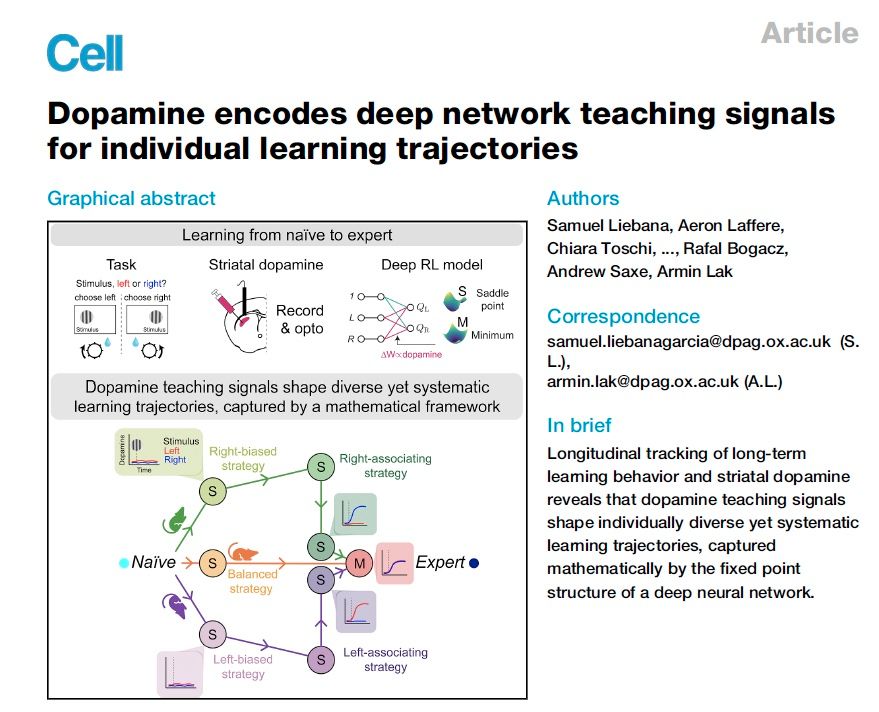

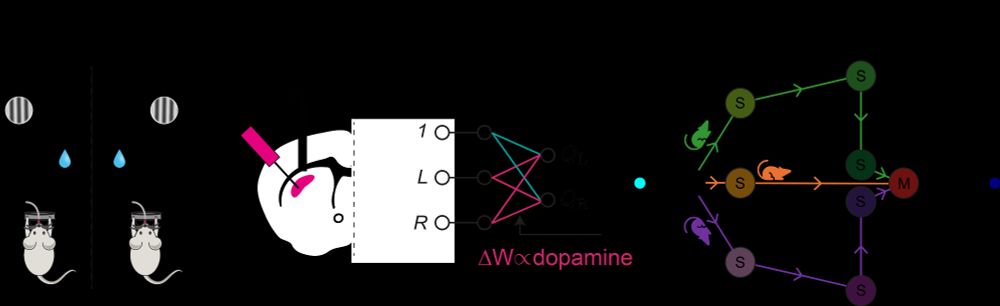

Dopamine encodes deep network teaching signals for individual learning trajectories

Longitudinal tracking of long-term learning behavior and striatal dopamine reveals

that dopamine teaching signals shape individually diverse yet systematic learning

trajectories, captured mathematical...

www.cell.com

Reposted by Samuel Liebana

Reposted by Samuel Liebana

Reposted by Samuel Liebana

Reposted by Samuel Liebana