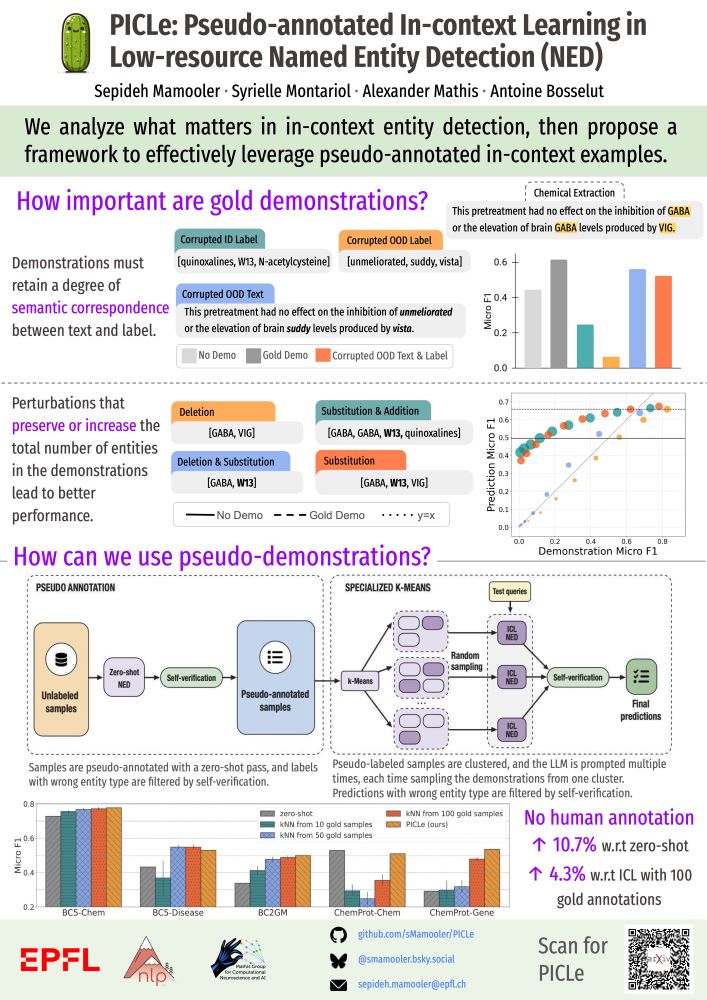

Sepideh Mamooler@ACL🇦🇹

@smamooler.bsky.social

39 followers

44 following

14 posts

PhD Candidate at @icepfl.bsky.social | Ex Research Intern@Google DeepMind

👩🏻💻 Working on multi-modal AI reasoning models in scientific domains

https://smamooler.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Sepideh Mamooler@ACL🇦🇹

Reposted by Sepideh Mamooler@ACL🇦🇹

Reposted by Sepideh Mamooler@ACL🇦🇹

Reposted by Sepideh Mamooler@ACL🇦🇹

Debjit Paul

@debjit-paul.bsky.social

· May 1

Reposted by Sepideh Mamooler@ACL🇦🇹

Reposted by Sepideh Mamooler@ACL🇦🇹

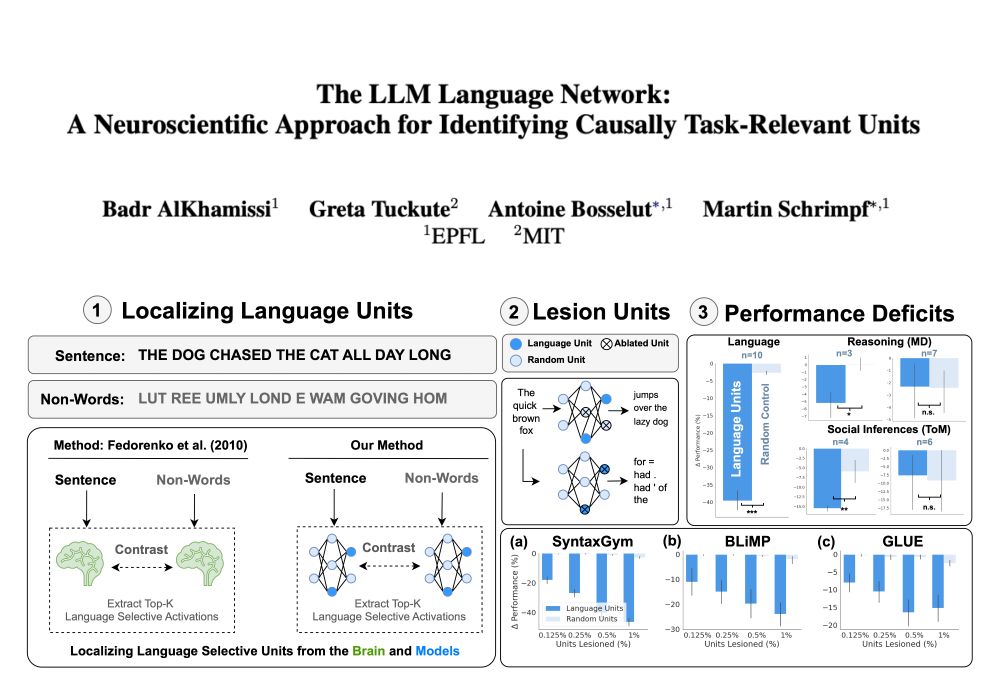

Antoine Bosselut

@abosselut.bsky.social

· Feb 25

Reposted by Sepideh Mamooler@ACL🇦🇹