* Faculty position, Chulalongkorn University, Thailand

* Postdoc, EPFL, Switzerland

* PhD, CQT, Singapore

Also, special thanks to @mvscerezo.bsky.social Martin Larocca for their valuable insight on correlated Haar random unitaries 🌮

Also, special thanks to @mvscerezo.bsky.social Martin Larocca for their valuable insight on correlated Haar random unitaries 🌮

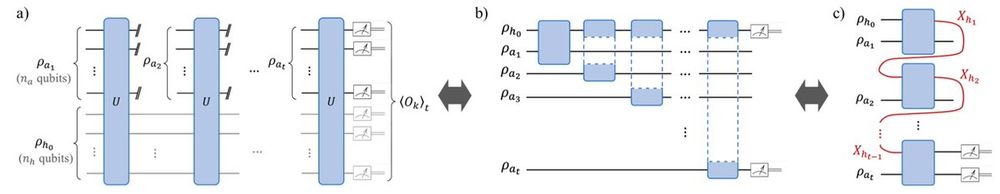

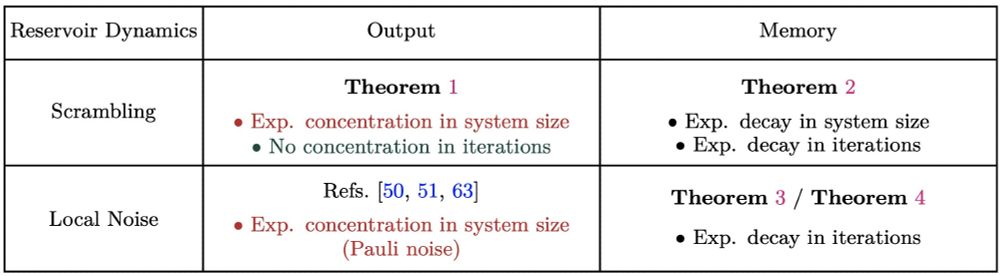

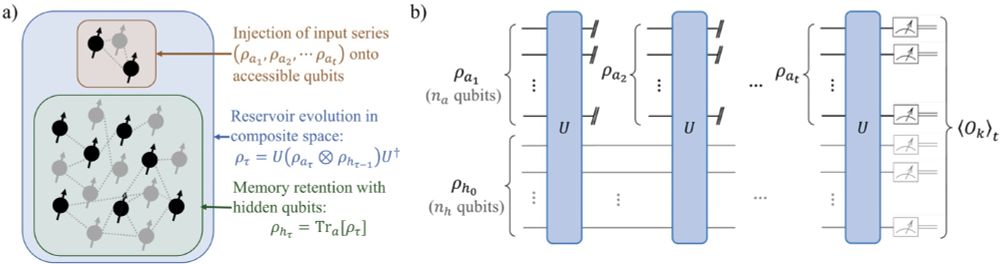

We prove that in extreme-scrambling QRPs, old inputs or initial states get forgotten exponentially fast (in both time steps and system size !). Too much scrambling -> you effectively “MIB” zap each past input.

We prove that in extreme-scrambling QRPs, old inputs or initial states get forgotten exponentially fast (in both time steps and system size !). Too much scrambling -> you effectively “MIB” zap each past input.

🎯 Big scrambling in quantum reservoirs helps at small sizes but kills input-sensitivity at large scale

🎯 Memory of older states decays exponentially (in both time steps and system size !)

🎯 Noise can make us forget even faster

🎯 Big scrambling in quantum reservoirs helps at small sizes but kills input-sensitivity at large scale

🎯 Memory of older states decays exponentially (in both time steps and system size !)

🎯 Noise can make us forget even faster

Doomed by their own chaotic dynamics, QRP may not scale in the extreme scrambling limit.

Check out our new Star Wa… I mean paper on arxiv: scirate.com/arxiv/2505.1...

Doomed by their own chaotic dynamics, QRP may not scale in the extreme scrambling limit.

Check out our new Star Wa… I mean paper on arxiv: scirate.com/arxiv/2505.1...