* Faculty position, Chulalongkorn University, Thailand

* Postdoc, EPFL, Switzerland

* PhD, CQT, Singapore

Also, special thanks to @mvscerezo.bsky.social Martin Larocca for their valuable insight on correlated Haar random unitaries 🌮

Also, special thanks to @mvscerezo.bsky.social Martin Larocca for their valuable insight on correlated Haar random unitaries 🌮

You want that “just right” level of chaos: enough to get expressive states, not so much that it all washes out.

You want that “just right” level of chaos: enough to get expressive states, not so much that it all washes out.

Not everything is gloom and doom. We found that for moderate scrambling (like shallow random circuits or chaotic Ising with short evolution), you don’t get lethal exponential concentration.

Not everything is gloom and doom. We found that for moderate scrambling (like shallow random circuits or chaotic Ising with short evolution), you don’t get lethal exponential concentration.

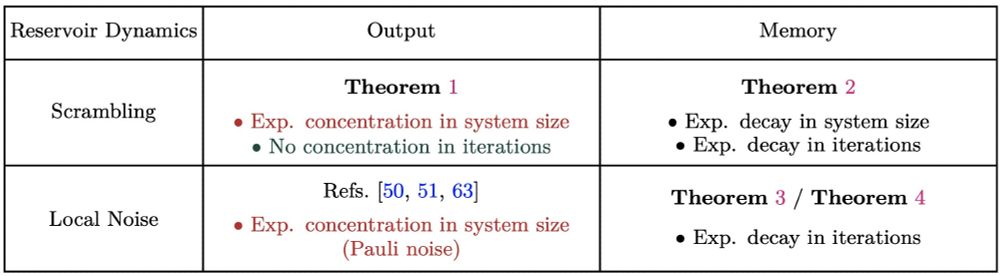

We also studied QRP under local unital or non-unital noise. While there are work that argue dissipation as a resource for QRP, we prove noise also forces your reservoir to forget states from the distant past exponentially quickly

We also studied QRP under local unital or non-unital noise. While there are work that argue dissipation as a resource for QRP, we prove noise also forces your reservoir to forget states from the distant past exponentially quickly

We prove that in extreme-scrambling QRPs, old inputs or initial states get forgotten exponentially fast (in both time steps and system size !). Too much scrambling -> you effectively “MIB” zap each past input.

We prove that in extreme-scrambling QRPs, old inputs or initial states get forgotten exponentially fast (in both time steps and system size !). Too much scrambling -> you effectively “MIB” zap each past input.

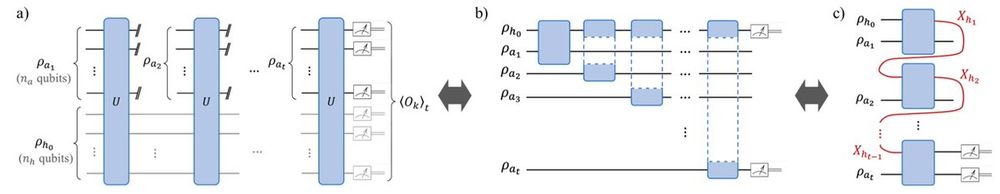

Based on the unrolled form, we prove the exponential concentration of QRP output. In a large scale setting, the trained QRP model becomes input-insensitive leading to poor generalization despite trainability guarantee.

Based on the unrolled form, we prove the exponential concentration of QRP output. In a large scale setting, the trained QRP model becomes input-insensitive leading to poor generalization despite trainability guarantee.

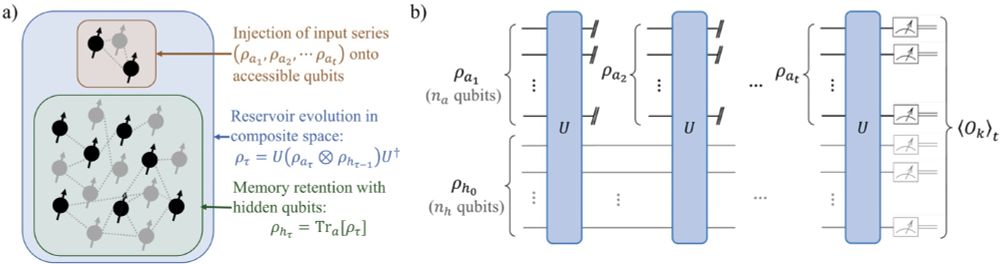

While related techniques already establish scalability barriers for other quantum models, the QRP protocol is much more demanding: a fixed reservoir repeatedly interleaves with a stream of input time-series.

While related techniques already establish scalability barriers for other quantum models, the QRP protocol is much more demanding: a fixed reservoir repeatedly interleaves with a stream of input time-series.

🎯 Big scrambling in quantum reservoirs helps at small sizes but kills input-sensitivity at large scale

🎯 Memory of older states decays exponentially (in both time steps and system size !)

🎯 Noise can make us forget even faster

🎯 Big scrambling in quantum reservoirs helps at small sizes but kills input-sensitivity at large scale

🎯 Memory of older states decays exponentially (in both time steps and system size !)

🎯 Noise can make us forget even faster