Tim Weiland

@timwei.land

390 followers

260 following

15 posts

PhD student @ ELLIS, IMPRS-IS.

Working on physics-informed ML and probabilistic numerics at Philipp Hennig's group in Tübingen.

https://timwei.land

Posts

Media

Videos

Starter Packs

Pinned

Tim Weiland

@timwei.land

· Apr 24

Reposted by Tim Weiland

Reposted by Tim Weiland

Tim Weiland

@timwei.land

· Mar 17

Tim Weiland

@timwei.land

· Mar 17

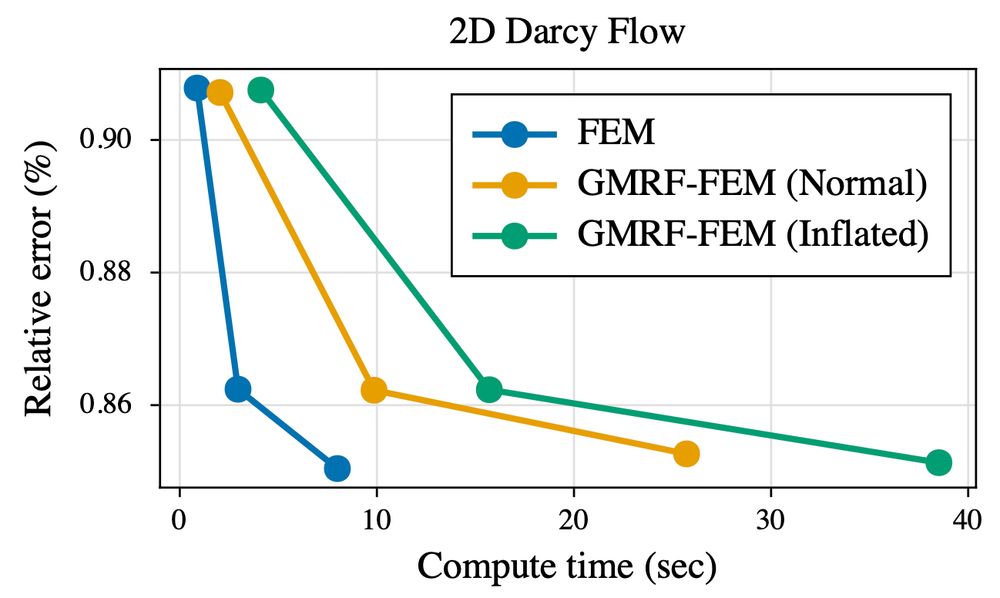

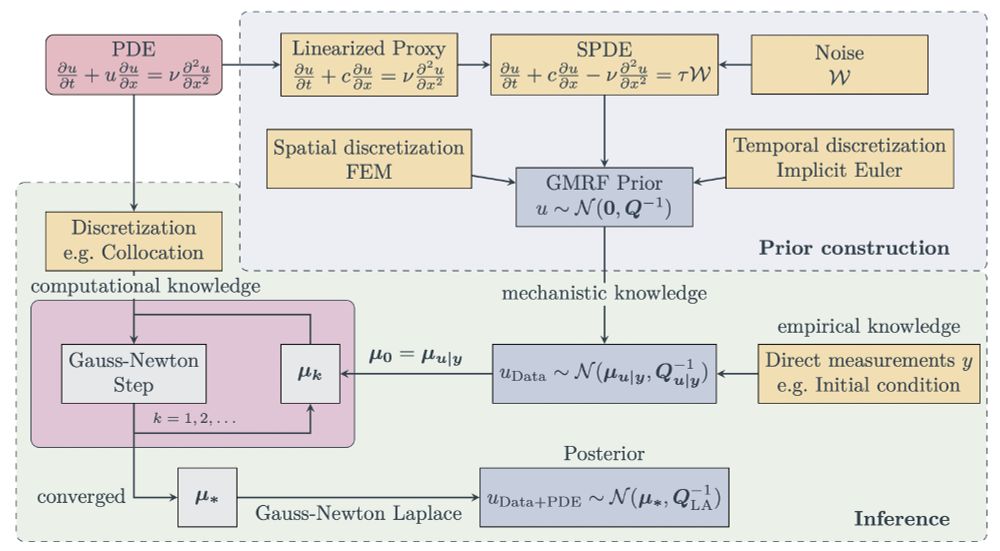

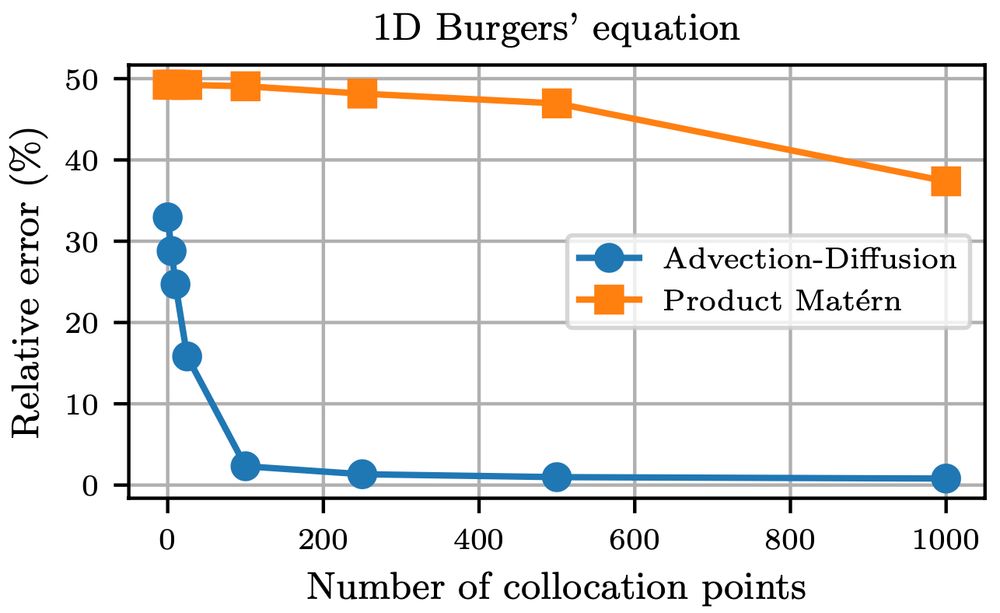

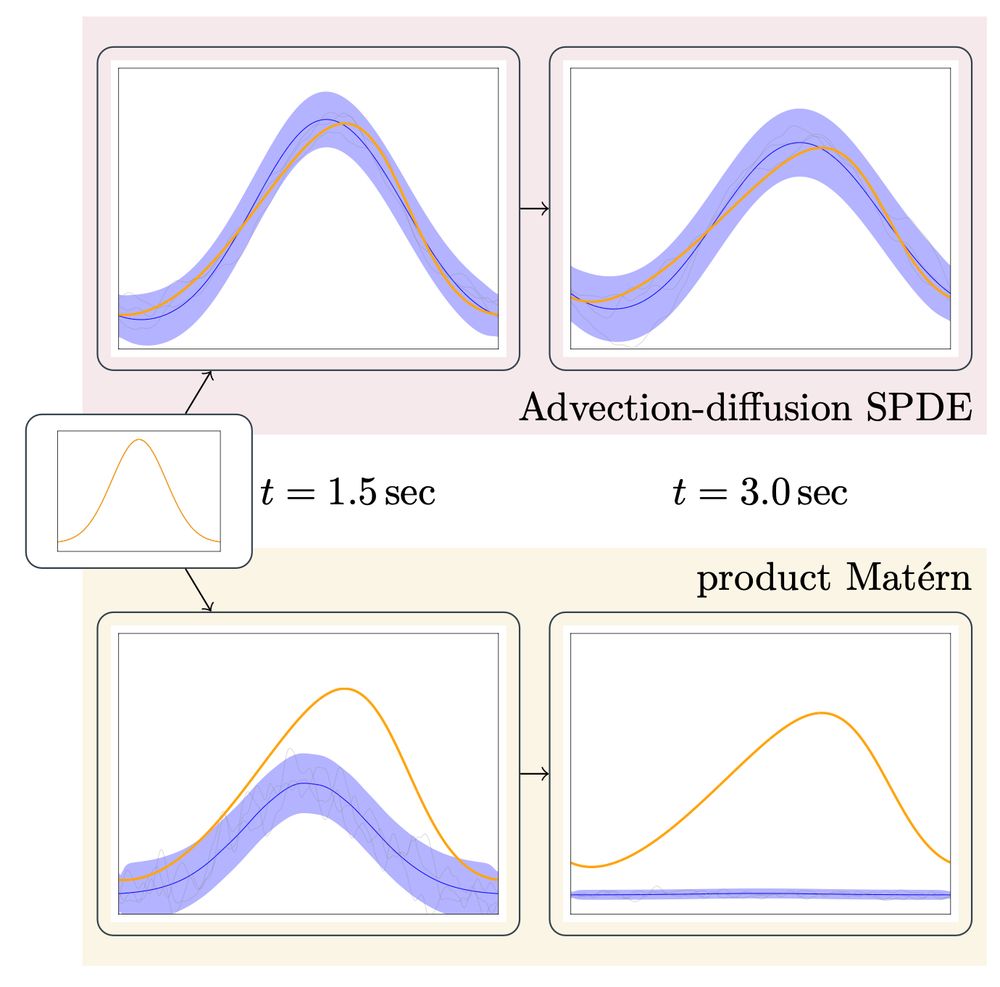

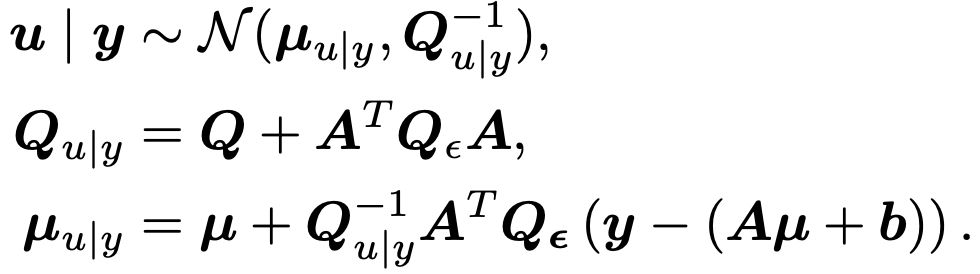

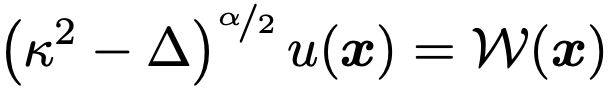

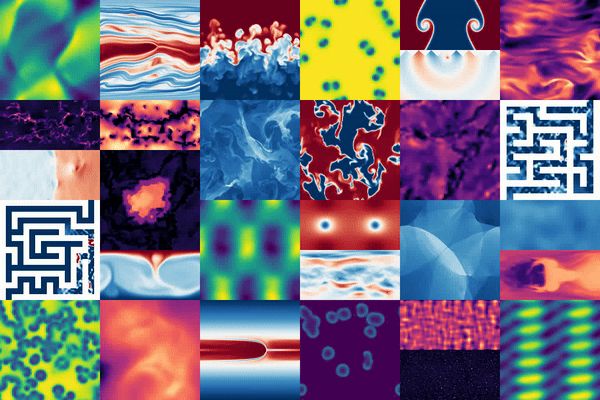

Flexible and Efficient Probabilistic PDE Solvers through Gaussian Markov Random Fields

Mechanistic knowledge about the physical world is virtually always expressed via partial differential equations (PDEs). Recently, there has been a surge of interest in probabilistic PDE solvers -- Bay...

arxiv.org

Tim Weiland

@timwei.land

· Mar 17

Tim Weiland

@timwei.land

· Feb 16

Reparameterization invariance in approximate Bayesian inference

Current approximate posteriors in Bayesian neural networks (BNNs) exhibit a crucial limitation: they fail to maintain invariance under reparameterization, i.e. BNNs assign different posterior densitie...

arxiv.org

Reposted by Tim Weiland

Tim Weiland

@timwei.land

· Dec 30

Tim Weiland

@timwei.land

· Nov 19

Tim Weiland

@timwei.land

· Nov 19