Toviah Moldwin

@tmoldwin.bsky.social

290 followers

350 following

340 posts

Computational neuroscience: Plasticity, learning, connectomics.

Posts

Media

Videos

Starter Packs

Toviah Moldwin

@tmoldwin.bsky.social

· May 17

Toviah Moldwin

@tmoldwin.bsky.social

· May 17

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 16

Toviah Moldwin

@tmoldwin.bsky.social

· May 15

Toviah Moldwin

@tmoldwin.bsky.social

· May 13

Toviah Moldwin

@tmoldwin.bsky.social

· May 7

Toviah Moldwin

@tmoldwin.bsky.social

· May 4

Toviah Moldwin

@tmoldwin.bsky.social

· May 4

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

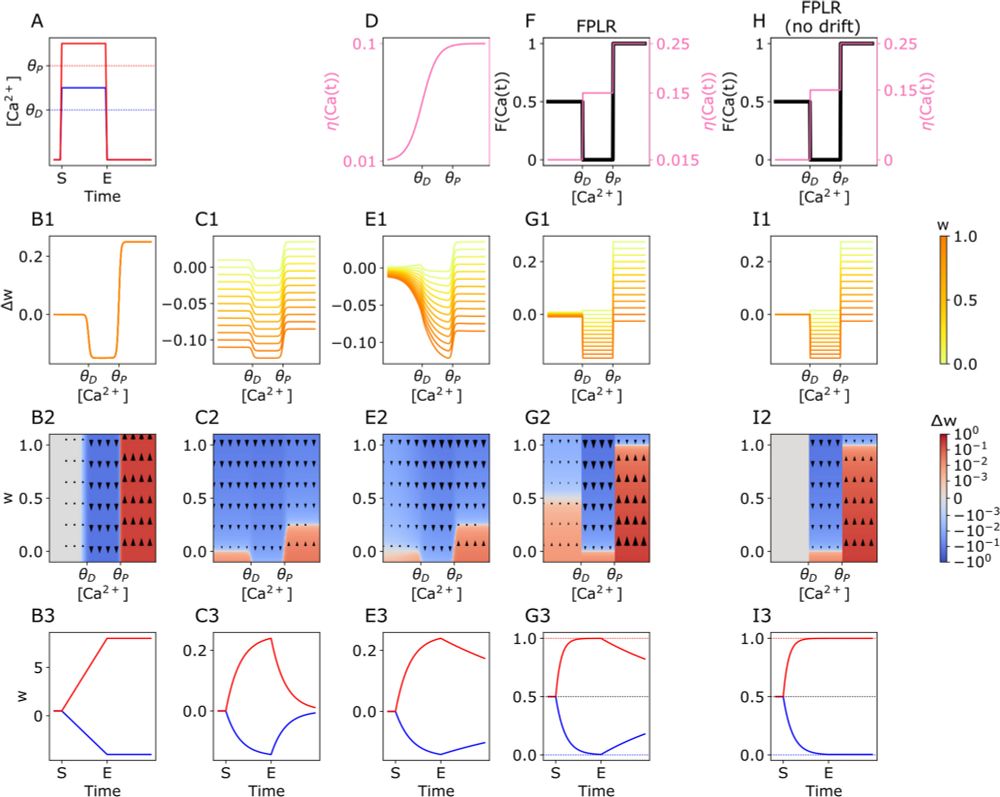

A generalized mathematical framework for the calcium control hypothesis describes weight-dependent synaptic plasticity - Journal of Computational Neuroscience

The brain modifies synaptic strengths to store new information via long-term potentiation (LTP) and long-term depression (LTD). Evidence has mounted that long-term synaptic plasticity is controlled vi...

link.springer.com

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3

Toviah Moldwin

@tmoldwin.bsky.social

· May 3