Posts

Media

Videos

Starter Packs

Pinned

Reposted by Nils Trost

Reposted by Nils Trost

Reposted by Nils Trost

Nils Trost

@trostnils.bsky.social

· Aug 14

Reposted by Nils Trost

Reposted by Nils Trost

Reposted by Nils Trost

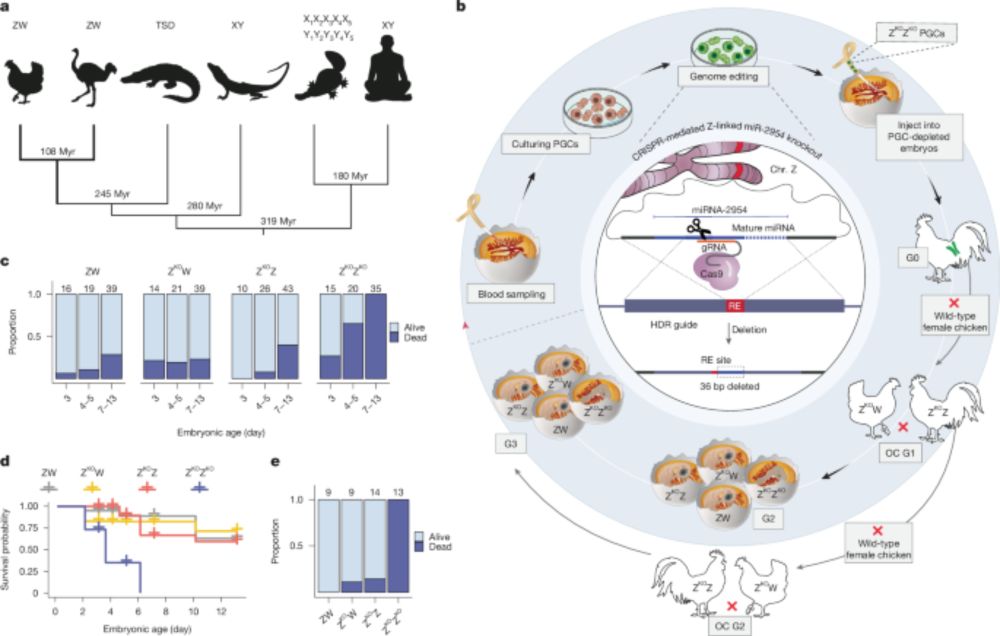

Kaessmann Lab

@kaessmannlab.bsky.social

· Jul 16

A male-essential miRNA is key for avian sex chromosome dosage compensation - Nature

Birds have evolved a unique sex chromosome dosage compensation mechanism involving the male-biased microRNA (miR-2954), which is essential for male survival by regulating the expression of dosage-sens...

www.nature.com

Reposted by Nils Trost

Nils Trost

@trostnils.bsky.social

· Jun 20

Reposted by Nils Trost

Nils Trost

@trostnils.bsky.social

· May 26

Reposted by Nils Trost

Reposted by Nils Trost

Reposted by Nils Trost

Reposted by Nils Trost

Kaessmann Lab

@kaessmannlab.bsky.social

· Feb 13

Developmental origins and evolution of pallial cell types and structures in birds

Innovations in the pallium likely facilitated the evolution of advanced cognitive abilities in birds. We therefore scrutinized its cellular composition and evolution using cell type atlases from chick...

www.science.org