Ulyana Piterbarg

@upiter.bsky.social

1.5K followers

330 following

5 posts

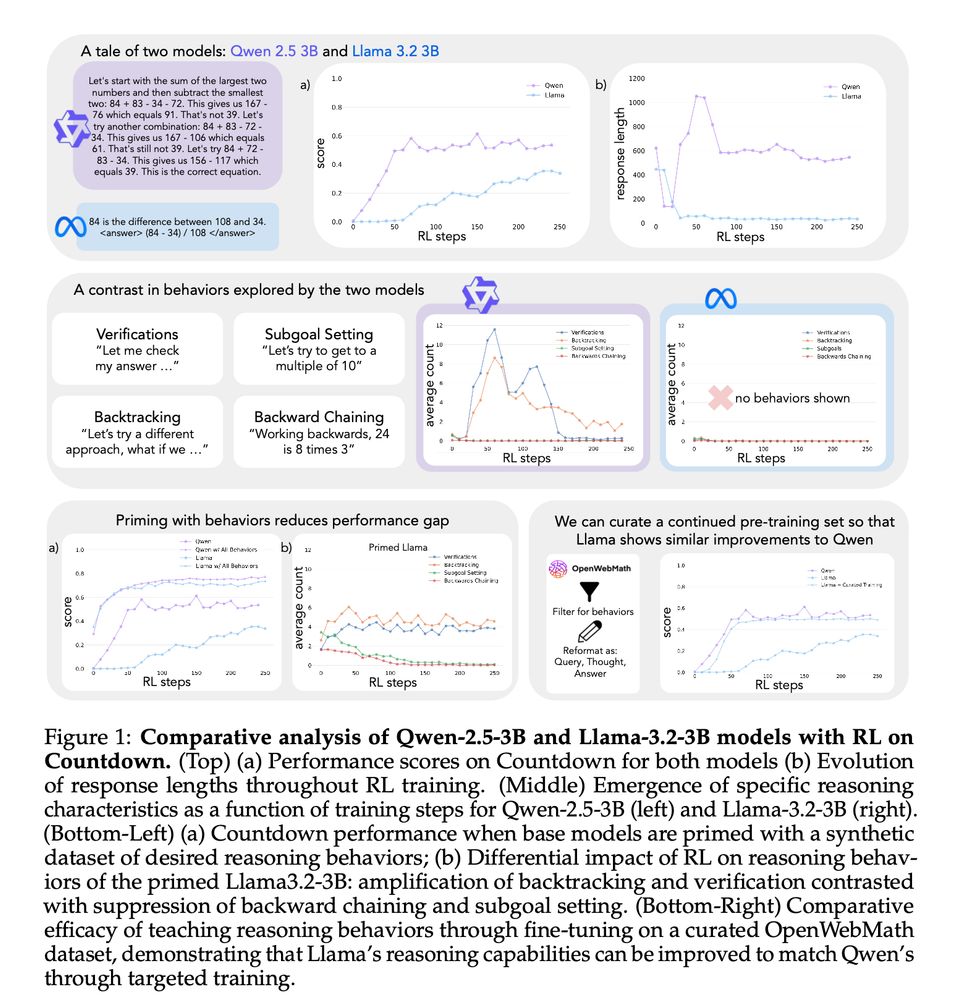

PhD at NYU studying reasoning, decision-making, and open-endedness

alum of MIT | prev: Google, MSR, MIT CoCoSci

https://upiterbarg.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Ulyana Piterbarg

Reposted by Ulyana Piterbarg

Lerrel Pinto

@lerrelpinto.com

· Feb 18

Reposted by Ulyana Piterbarg

Reposted by Ulyana Piterbarg

Reposted by Ulyana Piterbarg

Tim Rocktäschel

@handle.invalid

· Nov 20