Posts

Media

Videos

Starter Packs

Wanyun Xie

@wanyunxie.bsky.social

· Jul 16

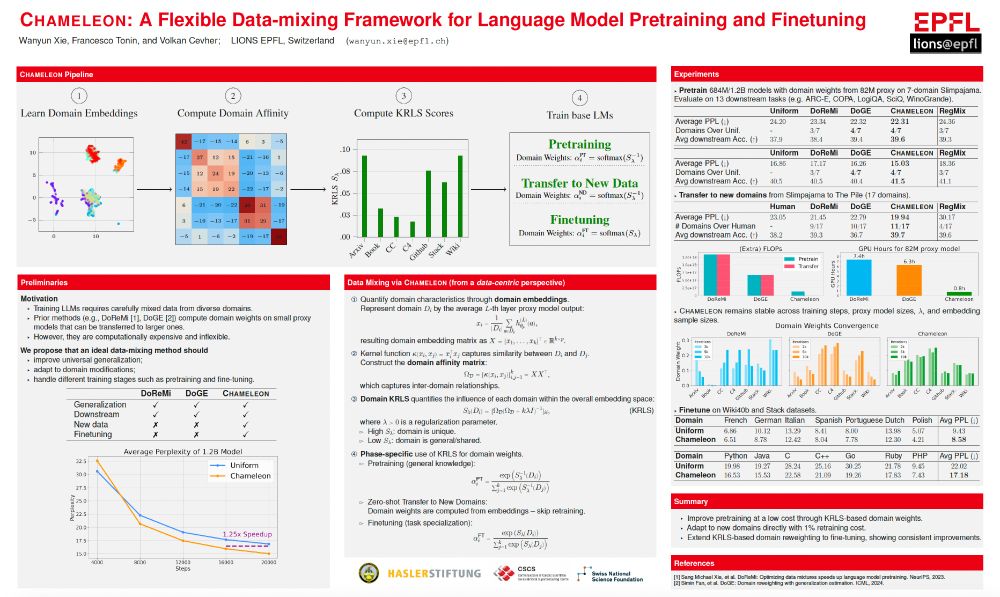

GitHub - LIONS-EPFL/Chameleon: Chameleon: A Flexible Data-mixing Framework for Language Model Pretraining and Finetuning, ICML 2025

Chameleon: A Flexible Data-mixing Framework for Language Model Pretraining and Finetuning, ICML 2025 - LIONS-EPFL/Chameleon

github.com

Wanyun Xie

@wanyunxie.bsky.social

· Jul 16

Wanyun Xie

@wanyunxie.bsky.social

· Jul 16

Reposted by Wanyun Xie

Reposted by Wanyun Xie

Wanyun Xie

@wanyunxie.bsky.social

· Dec 11

SAMPa: Sharpness-aware Minimization Parallelized

Sharpness-aware minimization (SAM) has been shown to improve the generalization of neural networks. However, each SAM update requires \emph{sequentially} computing two gradients, effectively doubling ...

arxiv.org

Wanyun Xie

@wanyunxie.bsky.social

· Dec 11

Wanyun Xie

@wanyunxie.bsky.social

· Dec 11