Achyuta Rajaram, Sarah Schwettmann, Jacob Andreas, Arthur Conmy: Line of Sight: On Linear Representations in VLLMs https://arxiv.org/abs/2506.04706 https://arxiv.org/pdf/2506.04706 https://arxiv.org/html/2506.04706

June 6, 2025 at 6:02 AM

Achyuta Rajaram, Sarah Schwettmann, Jacob Andreas, Arthur Conmy: Line of Sight: On Linear Representations in VLLMs https://arxiv.org/abs/2506.04706 https://arxiv.org/pdf/2506.04706 https://arxiv.org/html/2506.04706

Shalini Maiti, Lourdes Agapito, Filippos Kokkinos: Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects https://arxiv.org/abs/2504.08125 https://arxiv.org/pdf/2504.08125 https://arxiv.org/html/2504.08125

April 14, 2025 at 5:57 AM

Shalini Maiti, Lourdes Agapito, Filippos Kokkinos: Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects https://arxiv.org/abs/2504.08125 https://arxiv.org/pdf/2504.08125 https://arxiv.org/html/2504.08125

Muhammad Ali, Salman Khan

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

September 3, 2025 at 5:13 PM

Muhammad Ali, Salman Khan

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

Waste-Bench: A Comprehensive Benchmark for Evaluating VLLMs in Cluttered Environments

https://arxiv.org/abs/2509.00176

Fine-Tuning vLLMs for Document Understanding

Learn how you can fine-tune visual language models for specific tasks

#ai #llm #news

Learn how you can fine-tune visual language models for specific tasks

#ai #llm #news

Fine-Tuning vLLMs for Document Understanding

Learn how you can fine-tune visual language models for specific tasks

towardsdatascience.com

May 6, 2025 at 3:30 PM

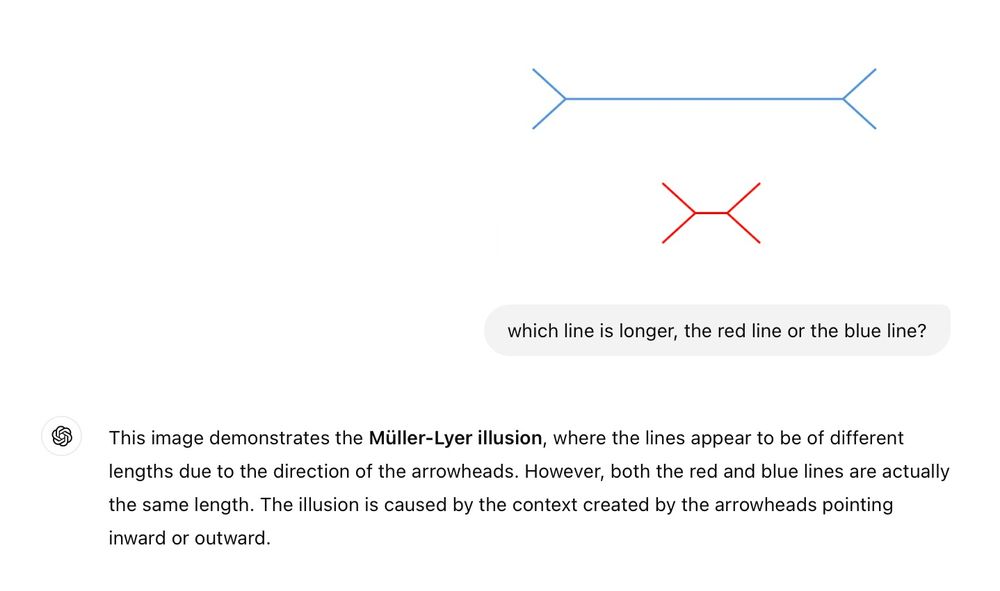

Clear illustration of the limitations of VLLMs. We still have a long way to go (most likely going beyond current training and model paradigms) before "solving vision".

thinking of calling this "The Illusion Illusion"

(more examples below)

(more examples below)

December 1, 2024 at 6:10 PM

Clear illustration of the limitations of VLLMs. We still have a long way to go (most likely going beyond current training and model paradigms) before "solving vision".

show that the knowledge boundary identified by our method for one VLLM can be used as a surrogate boundary for other VLLMs. Code will be released at https://github.com/Chord-Chen-30/VLLM-KnowledgeBoundary [6/6 of https://arxiv.org/abs/2502.18023v1]

February 26, 2025 at 5:58 AM

show that the knowledge boundary identified by our method for one VLLM can be used as a surrogate boundary for other VLLMs. Code will be released at https://github.com/Chord-Chen-30/VLLM-KnowledgeBoundary [6/6 of https://arxiv.org/abs/2502.18023v1]

arXiv:2504.05810v1 Announce Type: new

Abstract: Direct Preference Optimization (DPO) helps reduce hallucinations in Video Multimodal Large Language Models (VLLMs), but its reliance on offline preference data limits adaptability and fails to capture [1/7 of https://arxiv.org/abs/2504.05810v1]

Abstract: Direct Preference Optimization (DPO) helps reduce hallucinations in Video Multimodal Large Language Models (VLLMs), but its reliance on offline preference data limits adaptability and fails to capture [1/7 of https://arxiv.org/abs/2504.05810v1]

April 9, 2025 at 6:04 AM

arXiv:2504.05810v1 Announce Type: new

Abstract: Direct Preference Optimization (DPO) helps reduce hallucinations in Video Multimodal Large Language Models (VLLMs), but its reliance on offline preference data limits adaptability and fails to capture [1/7 of https://arxiv.org/abs/2504.05810v1]

Abstract: Direct Preference Optimization (DPO) helps reduce hallucinations in Video Multimodal Large Language Models (VLLMs), but its reliance on offline preference data limits adaptability and fails to capture [1/7 of https://arxiv.org/abs/2504.05810v1]

videos from YouTube, through rigorous annotation and verification, resulting in a benchmark with 101 videos and 806 question-answer pairs. Using MimeQA, we evaluate state-of-the-art video large language models (vLLMs) and [5/7 of https://arxiv.org/abs/2502.16671v1]

February 25, 2025 at 6:27 AM

videos from YouTube, through rigorous annotation and verification, resulting in a benchmark with 101 videos and 806 question-answer pairs. Using MimeQA, we evaluate state-of-the-art video large language models (vLLMs) and [5/7 of https://arxiv.org/abs/2502.16671v1]

Alexandros Xenos, Niki Maria Foteinopoulou, Ioanna Ntinou, Ioannis Patras, Georgios Tzimiropoulos

VLLMs Provide Better Context for Emotion Understanding Through Common Sense Reasoning

https://arxiv.org/abs/2404.07078

VLLMs Provide Better Context for Emotion Understanding Through Common Sense Reasoning

https://arxiv.org/abs/2404.07078

April 11, 2024 at 10:11 PM

Alexandros Xenos, Niki Maria Foteinopoulou, Ioanna Ntinou, Ioannis Patras, Georgios Tzimiropoulos

VLLMs Provide Better Context for Emotion Understanding Through Common Sense Reasoning

https://arxiv.org/abs/2404.07078

VLLMs Provide Better Context for Emotion Understanding Through Common Sense Reasoning

https://arxiv.org/abs/2404.07078

significantly higher defect rates compared to single-turn evaluations, highlighting deeper vulnerabilities in VLLMs. Notably, GPT-4o demonstrated the most balanced performance as measured by our Safety-Usability Index (SUI) followed closely by [6/7 of https://arxiv.org/abs/2505.04673v1]

May 9, 2025 at 5:57 AM

significantly higher defect rates compared to single-turn evaluations, highlighting deeper vulnerabilities in VLLMs. Notably, GPT-4o demonstrated the most balanced performance as measured by our Safety-Usability Index (SUI) followed closely by [6/7 of https://arxiv.org/abs/2505.04673v1]

Shalini Maiti, Lourdes Agapito, Filippos Kokkinos

Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects

https://arxiv.org/abs/2504.08125

Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects

https://arxiv.org/abs/2504.08125

April 14, 2025 at 8:00 AM

Shalini Maiti, Lourdes Agapito, Filippos Kokkinos

Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects

https://arxiv.org/abs/2504.08125

Gen3DEval: Using vLLMs for Automatic Evaluation of Generated 3D Objects

https://arxiv.org/abs/2504.08125

evaluation framework and research roadmap for developing VLLMs that meet the safety and robustness requirements for real-world autonomous systems. We released the benchmark toolbox and the fine-tuned model at: https://github.com/tong-zeng/DVBench.git. [8/8 of https://arxiv.org/abs/2504.14526v1]

April 22, 2025 at 6:09 AM

evaluation framework and research roadmap for developing VLLMs that meet the safety and robustness requirements for real-world autonomous systems. We released the benchmark toolbox and the fine-tuned model at: https://github.com/tong-zeng/DVBench.git. [8/8 of https://arxiv.org/abs/2504.14526v1]

flexibility of state-of-the-art VLLMs (GPT-4o, Gemini-1.5 Pro, and Claude-3.5 Sonnet) using the Wisconsin Card Sorting Test (WCST), a classic measure of set-shifting ability. Our results reveal that VLLMs achieve or surpass human-level set-shifting [2/5 of https://arxiv.org/abs/2505.22112v1]

May 29, 2025 at 5:56 AM

flexibility of state-of-the-art VLLMs (GPT-4o, Gemini-1.5 Pro, and Claude-3.5 Sonnet) using the Wisconsin Card Sorting Test (WCST), a classic measure of set-shifting ability. Our results reveal that VLLMs achieve or surpass human-level set-shifting [2/5 of https://arxiv.org/abs/2505.22112v1]

Computer vision has done as well as it has because the computational design very closely matches known parts of the primate visual cortex.

LLMs, including VLLMs, are clearly not biological analogues.

LLMs, including VLLMs, are clearly not biological analogues.

February 28, 2025 at 4:52 PM

Computer vision has done as well as it has because the computational design very closely matches known parts of the primate visual cortex.

LLMs, including VLLMs, are clearly not biological analogues.

LLMs, including VLLMs, are clearly not biological analogues.

adversarial examples are highly transferable to widely-used proprietary VLLMs such as GPT-4o, Claude, and Gemini. We show that attackers can craft perturbations to induce specific attacker-chosen interpretations of visual information, such as [3/6 of https://arxiv.org/abs/2505.01050v1]

May 5, 2025 at 6:00 AM

adversarial examples are highly transferable to widely-used proprietary VLLMs such as GPT-4o, Claude, and Gemini. We show that attackers can craft perturbations to induce specific attacker-chosen interpretations of visual information, such as [3/6 of https://arxiv.org/abs/2505.01050v1]

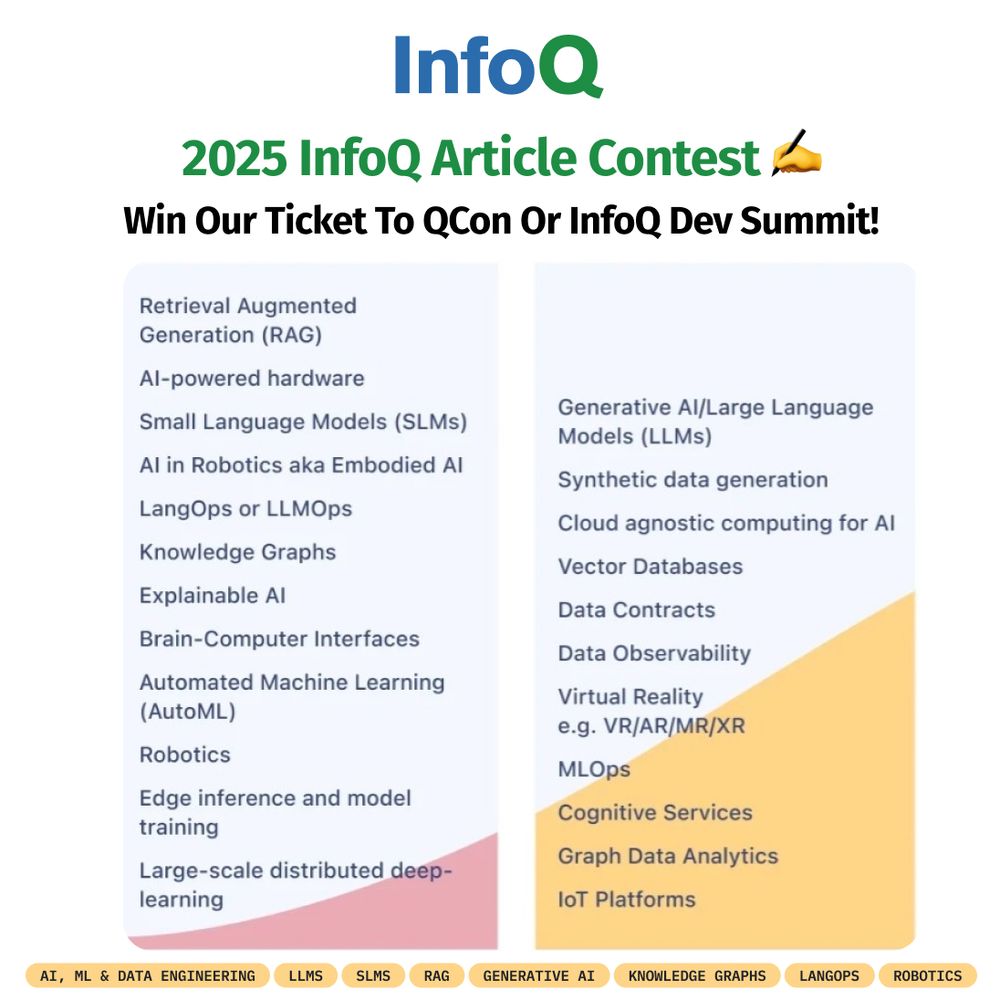

Are you passionate about the latest in #AI? Here's your chance to shine!

✍️ Join the #InfoQ Annual Article Writing Competition!

🏆 Win a #FreeTicket to #QCon or #InfoQDevSummit!

🔗 bit.ly/4hGgNUn

Explore topics like #LLMs, #SLMs, #vLLMs, #GenAI, #VectorDatabases, #ExplainableAI, #RAG & more!

✍️ Join the #InfoQ Annual Article Writing Competition!

🏆 Win a #FreeTicket to #QCon or #InfoQDevSummit!

🔗 bit.ly/4hGgNUn

Explore topics like #LLMs, #SLMs, #vLLMs, #GenAI, #VectorDatabases, #ExplainableAI, #RAG & more!

March 19, 2025 at 4:57 PM

Are you passionate about the latest in #AI? Here's your chance to shine!

✍️ Join the #InfoQ Annual Article Writing Competition!

🏆 Win a #FreeTicket to #QCon or #InfoQDevSummit!

🔗 bit.ly/4hGgNUn

Explore topics like #LLMs, #SLMs, #vLLMs, #GenAI, #VectorDatabases, #ExplainableAI, #RAG & more!

✍️ Join the #InfoQ Annual Article Writing Competition!

🏆 Win a #FreeTicket to #QCon or #InfoQDevSummit!

🔗 bit.ly/4hGgNUn

Explore topics like #LLMs, #SLMs, #vLLMs, #GenAI, #VectorDatabases, #ExplainableAI, #RAG & more!

was sind denn grad gute clients für openai-server vllms?

May 25, 2025 at 4:23 PM

was sind denn grad gute clients für openai-server vllms?

It's still a mystery to me how easy we can align vision to the LLM embedding space, which is what 99% of VLLMs do. And it kind of works with just 1-2 MLP layers but is somehow not interpretable (see fig from Clip Clap paper below)

So I'm wondering if anyone has seen papers that study this more?

So I'm wondering if anyone has seen papers that study this more?

October 24, 2024 at 6:45 PM

It's still a mystery to me how easy we can align vision to the LLM embedding space, which is what 99% of VLLMs do. And it kind of works with just 1-2 MLP layers but is somehow not interpretable (see fig from Clip Clap paper below)

So I'm wondering if anyone has seen papers that study this more?

So I'm wondering if anyone has seen papers that study this more?