Arkil Patel

@arkil.bsky.social

270 followers

400 following

9 posts

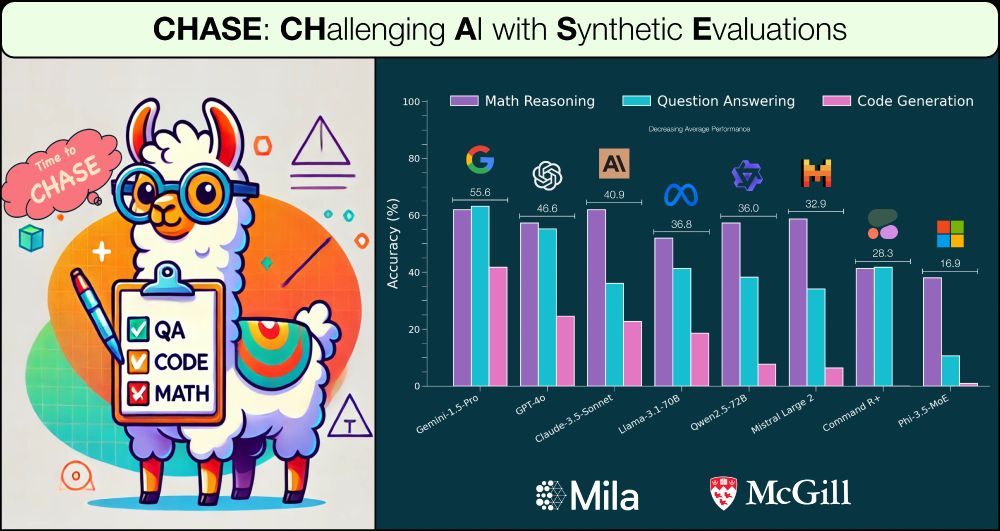

PhD Student at Mila and McGill | Research in ML and NLP | Past: AI2, MSFTResearch

arkilpatel.github.io

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Arkil Patel

Reposted by Arkil Patel

Arkil Patel

@arkil.bsky.social

· Feb 21

Arkil Patel

@arkil.bsky.social

· Feb 21

Arkil Patel

@arkil.bsky.social

· Feb 21