w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

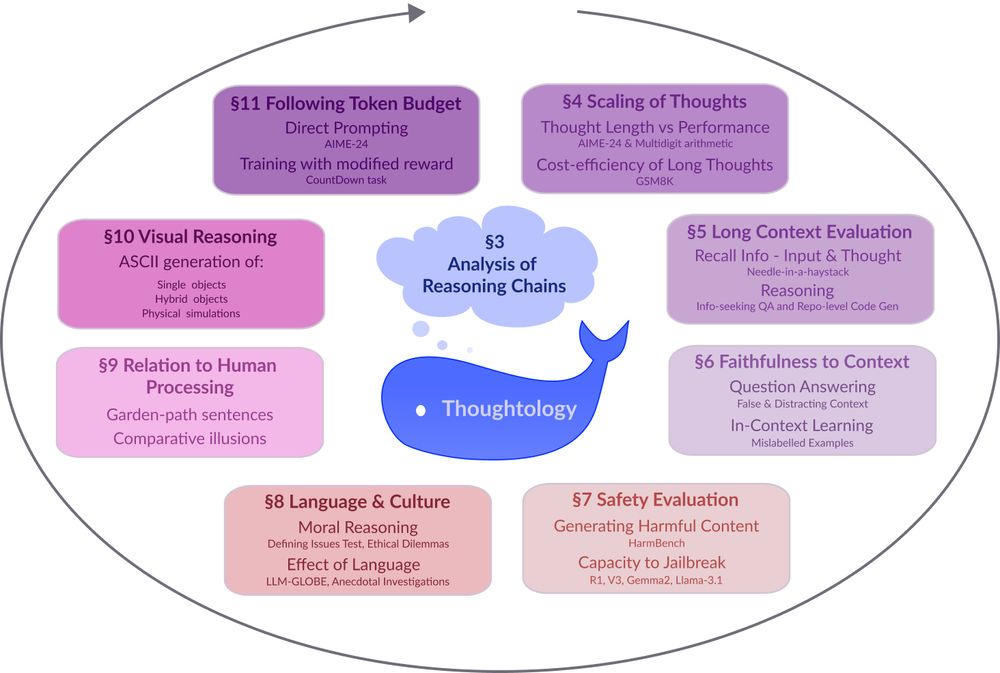

🔗: mcgill-nlp.github.io/thoughtology/

🔗: mcgill-nlp.github.io/thoughtology/

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

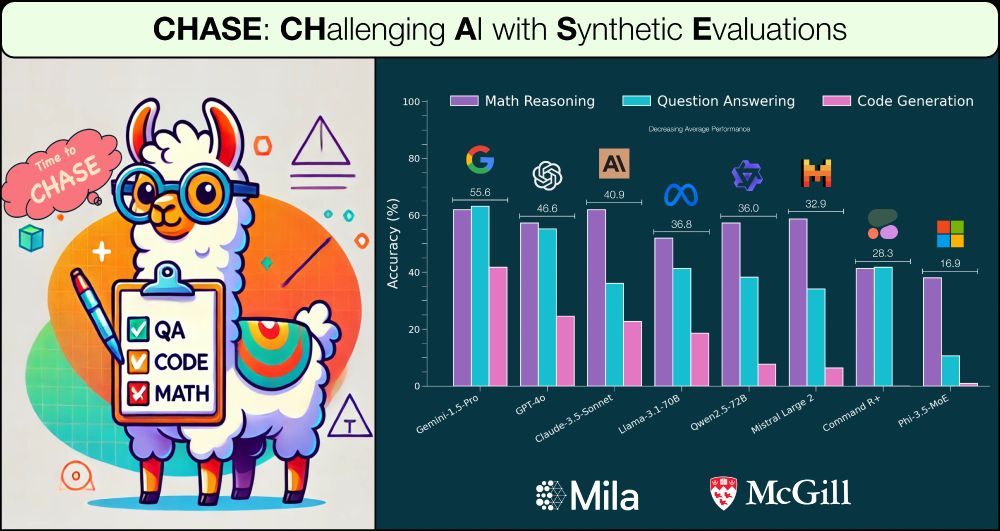

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵: