Marcel Hussing

@marcelhussing.bsky.social

2.9K followers

340 following

130 posts

PhD student at the University of Pennsylvania. Prev, intern at MSR, currently at Meta FAIR. Interested in reliable and replicable reinforcement learning, robotics and knowledge discovery: https://marcelhussing.github.io/

All posts are my own.

Posts

Media

Videos

Starter Packs

Pinned

Marcel Hussing

@marcelhussing.bsky.social

· Nov 10

Reposted by Marcel Hussing

Marcel Hussing

@marcelhussing.bsky.social

· Sep 11

Marcel Hussing

@marcelhussing.bsky.social

· Aug 23

Marcel Hussing

@marcelhussing.bsky.social

· Aug 16

Marcel Hussing

@marcelhussing.bsky.social

· Jul 17

Reposted by Marcel Hussing

Reposted by Marcel Hussing

Claas Voelcker

@cvoelcker.bsky.social

· Jun 19

Anastasiia Pedan

@pedanana.bsky.social

· Jun 19

Reposted by Marcel Hussing

Marcel Hussing

@marcelhussing.bsky.social

· Apr 25

Reposted by Marcel Hussing

Reposted by Marcel Hussing

Marcel Hussing

@marcelhussing.bsky.social

· Feb 22

Reposted by Marcel Hussing

Reposted by Marcel Hussing

Marcel Hussing

@marcelhussing.bsky.social

· Feb 11

Claas Voelcker

@cvoelcker.bsky.social

· Feb 11

Reposted by Marcel Hussing

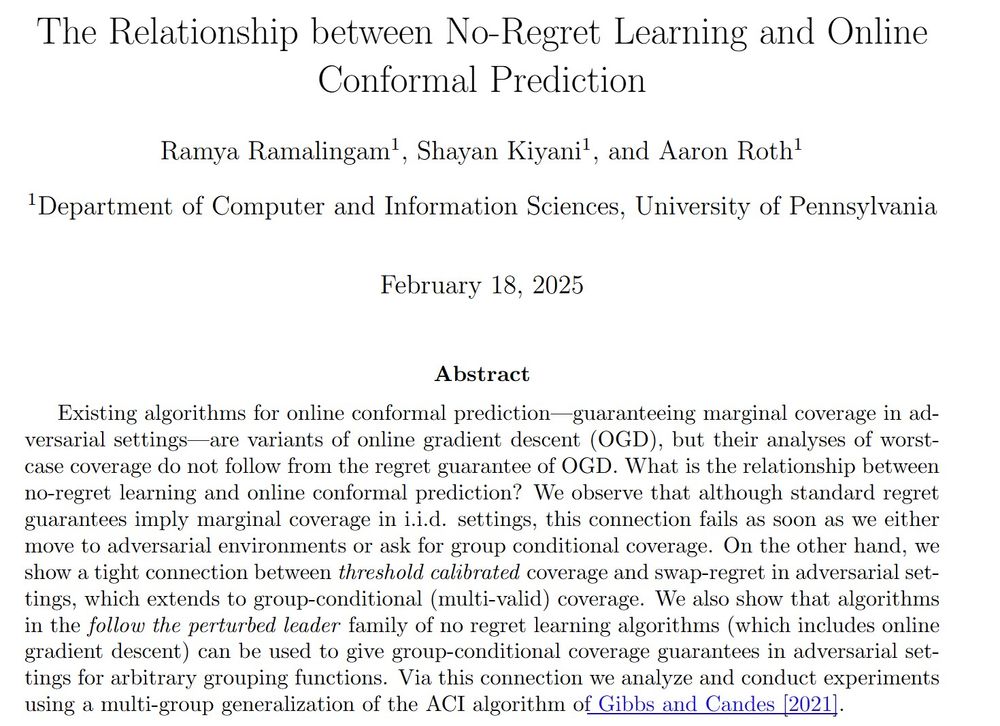

Aaron Roth

@aaroth.bsky.social

· Feb 8

Reposted by Marcel Hussing

Marcel Hussing

@marcelhussing.bsky.social

· Jan 29