Sergio Izquierdo

@sizquierdo.bsky.social

52 followers

66 following

11 posts

PhD candidate at University of Zaragoza.

Previously intern at Niantic Labs and Skydio.

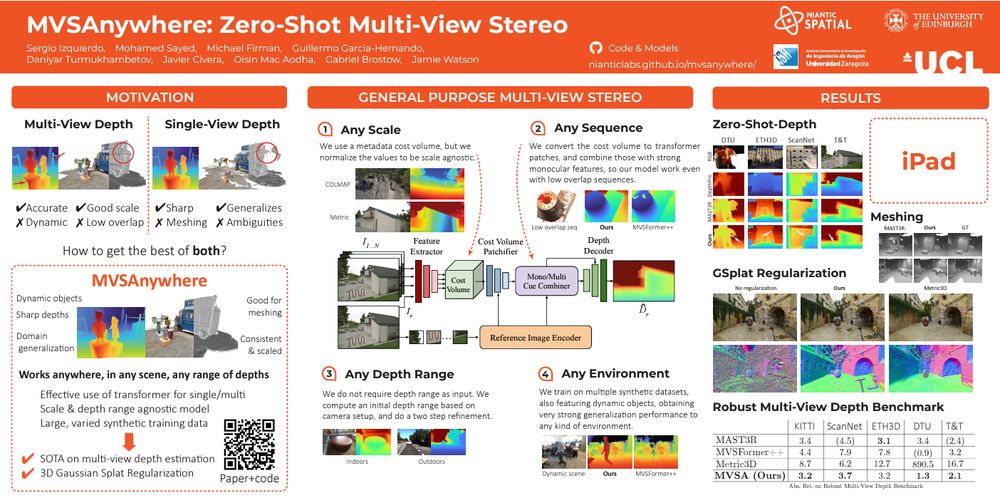

Working on 3D reconstruction and Deep Learning.

serizba.github.io

Posts

Media

Videos

Starter Packs

Reposted by Sergio Izquierdo

Sergio Izquierdo

@sizquierdo.bsky.social

· Jun 14

Sergio Izquierdo

@sizquierdo.bsky.social

· Jun 14

Sergio Izquierdo

@sizquierdo.bsky.social

· Mar 31

Reposted by Sergio Izquierdo

Reposted by Sergio Izquierdo