I think this is why LLMs often feel 'fixated on the wrong thing' or 'overly literal'—they are usually responding using the most relevant single thing they remember, not the aggregate of what was said

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

I think this is why LLMs often feel 'fixated on the wrong thing' or 'overly literal'—they are usually responding using the most relevant single thing they remember, not the aggregate of what was said

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

A gentle and comprehensive introduction to the DeltaNet

Part 1: sustcsonglin.github.io/blog/2024/de...

Part 2: sustcsonglin.github.io/blog/2024/de...

Part 3: sustcsonglin.github.io/blog/2024/de...

A gentle and comprehensive introduction to the DeltaNet

Part 1: sustcsonglin.github.io/blog/2024/de...

Part 2: sustcsonglin.github.io/blog/2024/de...

Part 3: sustcsonglin.github.io/blog/2024/de...

Can embedding models capture this? We study this in the context of fanfiction!

Can embedding models capture this? We study this in the context of fanfiction!

Can embedding models capture this? We study this in the context of fanfiction!

We designed this class to give a broad view on the space, from more classical decoding algorithms to recent methods for LLMs, plus a wide range of efficiency-focused work.

website: phontron.com/class/lminfe...

We designed this class to give a broad view on the space, from more classical decoding algorithms to recent methods for LLMs, plus a wide range of efficiency-focused work.

website: phontron.com/class/lminfe...

🧵1/9

🧵1/9

Finally analyzed my PhD time tracking data so you can plan your own research journey more effectively: mxij.me/x/phd-learning-dynamics

For current students: I hope this helps put your journey into perspective. Wishing you all the best!

Finally analyzed my PhD time tracking data so you can plan your own research journey more effectively: mxij.me/x/phd-learning-dynamics

For current students: I hope this helps put your journey into perspective. Wishing you all the best!

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

What is a MaterialsGPT? Where does that idea come from? I got to spend a lot of time thinking about that second question with @davidthewid.bsky.social and Lucy Suchman (!) working on this:

@siree.sh, Lucy Suchman, and I examine a corpus of 7,000 US Military grant solicitations to ask what the world’s largest military wants with to do with AI, by looking at what it seeks to fund. #STS

📄: arxiv.org/pdf/2411.17840

We find…

@siree.sh, Lucy Suchman, and I examine a corpus of 7,000 US Military grant solicitations to ask what the world’s largest military wants with to do with AI, by looking at what it seeks to fund. #STS

📄: arxiv.org/pdf/2411.17840

We find…

🌟 In our new paper, we rethink how we should be controlling for these factors 🧵:

🌟 In our new paper, we rethink how we should be controlling for these factors 🧵:

In this work we take the first steps towards asking whether LLMs can cater to diverse cultures in *user-facing generative* tasks.

[1/7]

✨linguistically+cognitively motivated evaluation

✨NLP for low-resource+endangered languages

✨figuring out what features of language data LMs are *actually* learning

I'll be presenting two posters 🧵:

✨linguistically+cognitively motivated evaluation

✨NLP for low-resource+endangered languages

✨figuring out what features of language data LMs are *actually* learning

I'll be presenting two posters 🧵:

But guess what? We won. All the sleazy scammers are doing natural language processing now.

You can try out recipes👩🍳 iterate on ✨vibes✨ but we can't actually test all possible combos of tweaks,,, right?? 🙅♂️WRONG! arxiv.org/abs/2410.15661 (1/n) 🧵

You can try out recipes👩🍳 iterate on ✨vibes✨ but we can't actually test all possible combos of tweaks,,, right?? 🙅♂️WRONG! arxiv.org/abs/2410.15661 (1/n) 🧵

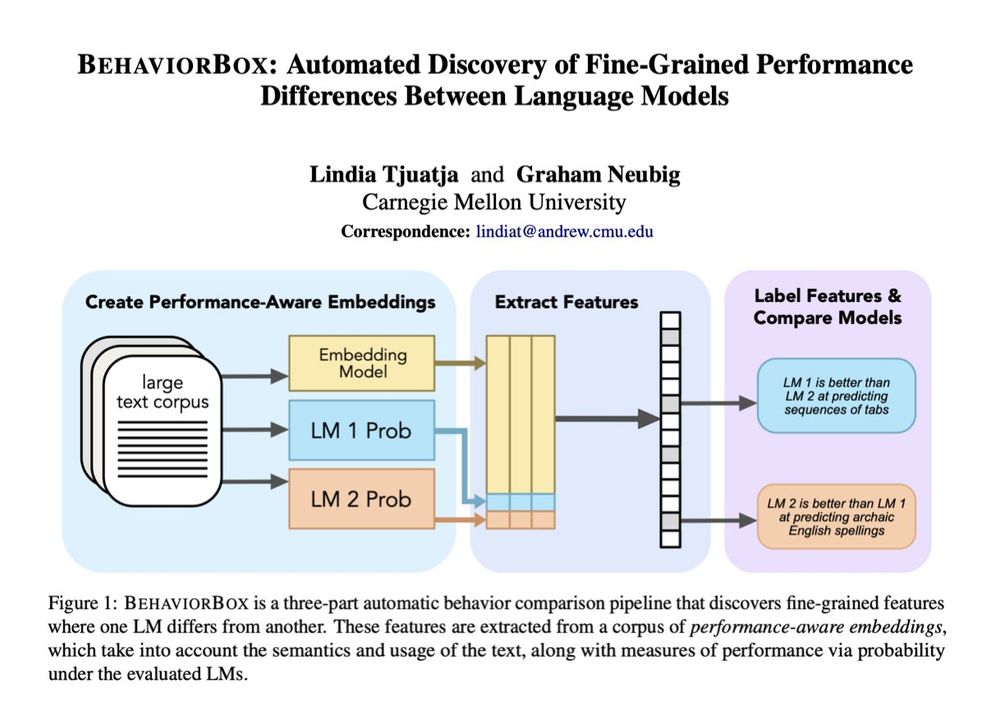

To appear at EMNLP 2023: arxiv.org/abs/2310.07715

To appear at EMNLP 2023: arxiv.org/abs/2310.07715

Talking to the youths in 2043: did you know that "tweet" comes from a pun on Twitter, a precursor to various shortform social media

Talking to the youths in 2043: did you know that "tweet" comes from a pun on Twitter, a precursor to various shortform social media