www.dallasfed.org/research/eco...

www.dallasfed.org/research/eco...

The rest of the world may follow suit soon, as evidence of AI's extreme risks continue to accumulate.

www.yahoo.com/news/article...

The rest of the world may follow suit soon, as evidence of AI's extreme risks continue to accumulate.

www.yahoo.com/news/article...

We vote no to superintelligence if it may cause humanity's extinction.

To AI companies: first prove your product won't kill everyone.

We vote no to superintelligence if it may cause humanity's extinction.

To AI companies: first prove your product won't kill everyone.

The fire alarm is blaring. Yet AI companies are still putting fuel on the fire.

The fire alarm is blaring. Yet AI companies are still putting fuel on the fire.

77% want us to move slowly on AI development:

www.axios.com/2025/05/27/a...

Common sense seems to be spreading, believe it or not.

77% want us to move slowly on AI development:

www.axios.com/2025/05/27/a...

Common sense seems to be spreading, believe it or not.

This is a remarkable move forward for humanity. We are immensely grateful for all the thousands of people who helped make this happen.

But it's not enough and it's many years too late.

This is a remarkable move forward for humanity. We are immensely grateful for all the thousands of people who helped make this happen.

But it's not enough and it's many years too late.

en.wikipedia.org/wiki/Darwin_...

en.wikipedia.org/wiki/Darwin_...

This will be our eight call. They've consistently been illuminating, according to participants.

Please join us if you're keen!

This will be our eight call. They've consistently been illuminating, according to participants.

Please join us if you're keen!

"House Republicans introduced new language to the Budget Reconciliation bill that will immiserate the lives of millions of Americans by cutting their access to Medicaid, and making life much more difficult for millions more by making them pay higher fees..."

"House Republicans introduced new language to the Budget Reconciliation bill that will immiserate the lives of millions of Americans by cutting their access to Medicaid, and making life much more difficult for millions more by making them pay higher fees..."

If you're leading or helping with strategy for an existential safety-focused organization, please join us: lu.ma/k4litt6x.

We strategize and ideate. We laugh and despair. They have been surprisingly motivating.

If you're leading or helping with strategy for an existential safety-focused organization, please join us: lu.ma/k4litt6x.

We strategize and ideate. We laugh and despair. They have been surprisingly motivating.

See notforprivategain.org.

See notforprivategain.org.

"The nations of the world made history in Geneva today," said Dr Tedros Adhanom Ghebreyesus, WHO Director-General.

"In reaching consensus on the Pandemic Agreement, not only did they put in place a generational accord...

"The nations of the world made history in Geneva today," said Dr Tedros Adhanom Ghebreyesus, WHO Director-General.

"In reaching consensus on the Pandemic Agreement, not only did they put in place a generational accord...

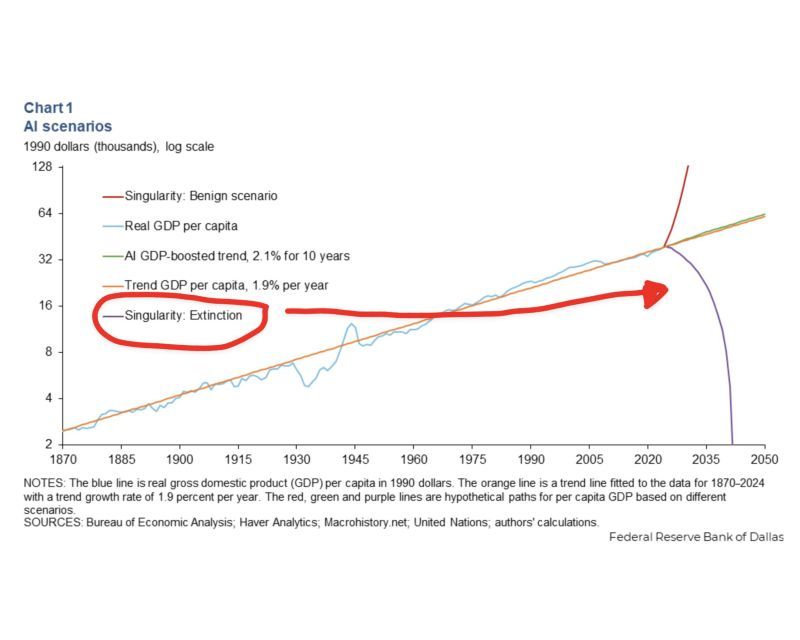

It predicts artificial superintelligence in 2027.

This means the end of humanity as we know it shortly afterward.

We must collectively intervene, now.

It predicts artificial superintelligence in 2027.

This means the end of humanity as we know it shortly afterward.

We must collectively intervene, now.

It was troubling so few speakers explicitly mentioned the alien elephant in the room: we face likely extinction or permanent disempowerment from AI unless we change course as a species.

It was troubling so few speakers explicitly mentioned the alien elephant in the room: we face likely extinction or permanent disempowerment from AI unless we change course as a species.

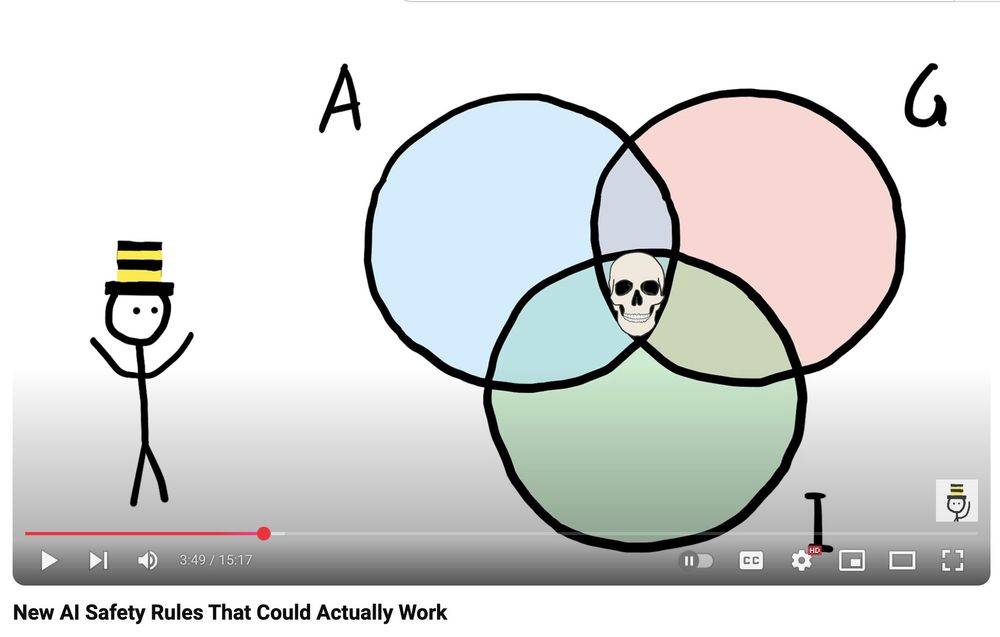

🎥 Watch at the link in the replies for a breakdown of the risks from smarter-than-human AI - and Anthony's proposals to steer us toward a safer future:

But is AI safety action?

Please consider doing what you can do help with the fight. Take the Existential Safety Action Pledge: actionforsafety.org.

But is AI safety action?

Please consider doing what you can do help with the fight. Take the Existential Safety Action Pledge: actionforsafety.org.

They just published a short paper which summarized the input of the 500+ individuals involved: un.org/global-digit...

They just published a short paper which summarized the input of the 500+ individuals involved: un.org/global-digit...

Given the stakes, this may go down in history as one of the most important conferences ever.

Given the stakes, this may go down in history as one of the most important conferences ever.

Get to the streets and protest.

Protests: pauseai.info/2025-february

Sign the petition: chng.it/WJh5XL52K4

Get to the streets and protest.