In search of statistical intuition for modern ML & simple explanations for complex things👀

Interested in the mysteries of modern ML, causality & all of stats. Opinions my own.

https://aliciacurth.github.io

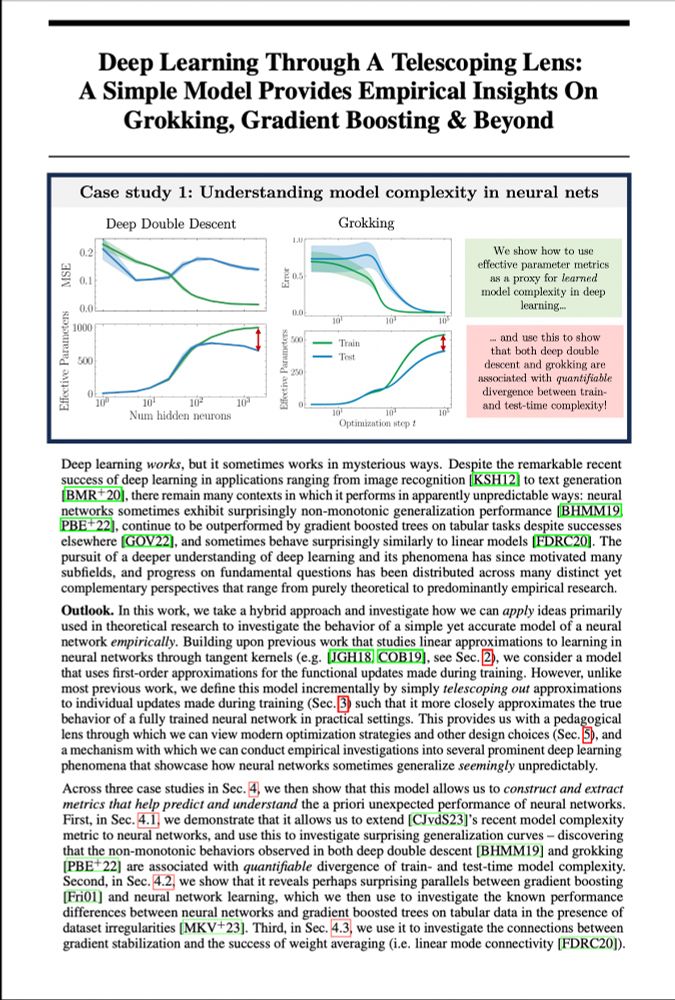

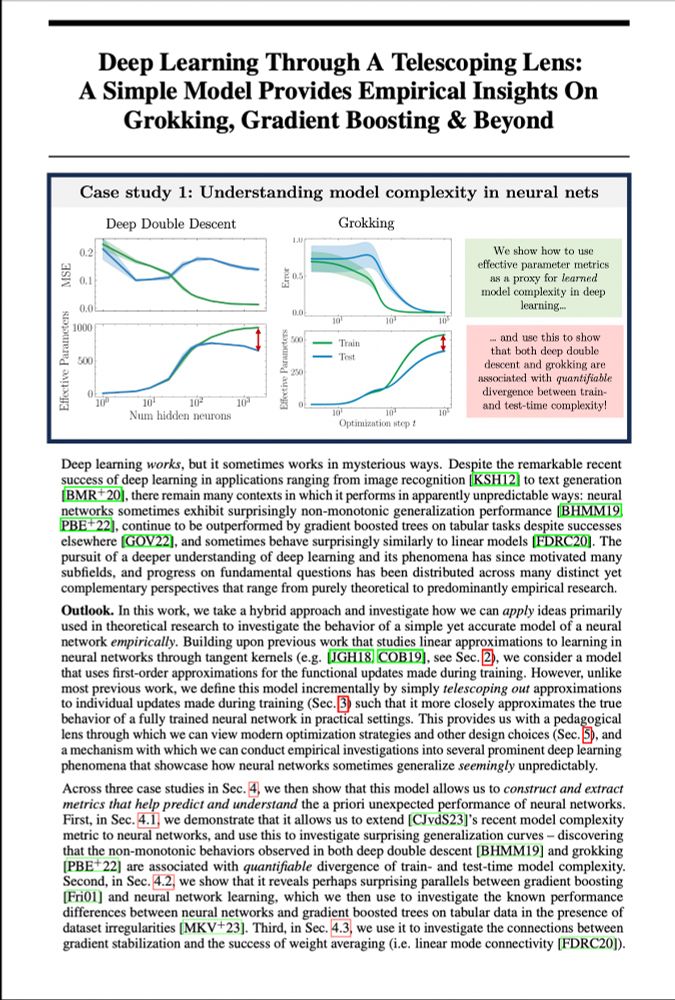

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n

@alanjeffares.bsky.social & I suspected that answers to this are obfuscated by the 2 being considered very different algs🤔

Instead we show they are more similar than you’d think — making their diffs smaller but predictive!🧵1/n

(True story: the origin of this case study is that @alanjeffares.bsky.social[big EoSL nerd] looked at the neural net eq&said “kinda looks like GBTs in EoSL Ch10”&we went from there)

Surely this diff in kernel must account for at least some of the observed performance differences… 🤔7/n

(True story: the origin of this case study is that @alanjeffares.bsky.social[big EoSL nerd] looked at the neural net eq&said “kinda looks like GBTs in EoSL Ch10”&we went from there)

@alanjeffares.bsky.social & I suspected that answers to this are obfuscated by the 2 being considered very different algs🤔

Instead we show they are more similar than you’d think — making their diffs smaller but predictive!🧵1/n

@alanjeffares.bsky.social & I suspected that answers to this are obfuscated by the 2 being considered very different algs🤔

Instead we show they are more similar than you’d think — making their diffs smaller but predictive!🧵1/n

If it’s causal, we will make you.

Strong conclusion for n=3 but I’m willing to use a pretty strong prior here…

If it’s causal, we will make you.

understanding double descent, random forests, neural network complexity, and now causal inference — smoothers are just at your service when you need them

Smoothers, we need smoothers!!! Outcome nuisance parameters have to be estimated using methods like (post-selection) OLS, (kernel) (ridge) or series regressions, tree-based methods, …

Check out @aliciacurth.bsky.social for nice references and cool insights using smoothers in ML.

understanding double descent, random forests, neural network complexity, and now causal inference — smoothers are just at your service when you need them

1. Recipe to write estimators as weighted outcomes

2. Double ML and causal forests as weighting estimators

3. Plug&play classic covariate balancing checks

4. Explains why Causal ML fails to find an effect of 1 with noiseless outcome Y = 1 + D

5. More fun facts

arxiv.org/abs/2411.11559

1. Recipe to write estimators as weighted outcomes

2. Double ML and causal forests as weighting estimators

3. Plug&play classic covariate balancing checks

4. Explains why Causal ML fails to find an effect of 1 with noiseless outcome Y = 1 + D

5. More fun facts

arxiv.org/abs/2411.11559

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n

So: Hi, I’m Alicia, Machine Learning Researcher at MSR (since last month)! Prev I was a PhD student in Cambridge trying to make sense of the mysteries of modern Machine Learning (— to be continued!!) :)

So: Hi, I’m Alicia, Machine Learning Researcher at MSR (since last month)! Prev I was a PhD student in Cambridge trying to make sense of the mysteries of modern Machine Learning (— to be continued!!) :)