Théophile Lambert @jsunn-y.bsky.social @francesarnold.bsky.social

www.biorxiv.org/content/10.1...

Théophile Lambert @jsunn-y.bsky.social @francesarnold.bsky.social

www.biorxiv.org/content/10.1...

Théophile Lambert @jsunn-y.bsky.social @francesarnold.bsky.social

www.biorxiv.org/content/10.1...

Théophile Lambert @jsunn-y.bsky.social @francesarnold.bsky.social

www.biorxiv.org/content/10.1...

Achilles Coffee Roasters Gaslamp, San Diego.

4:45pm - 6:30pm

I will be announcing one more meetup later this week if you can't make it to this one. Stay tuned!

Achilles Coffee Roasters Gaslamp, San Diego.

4:45pm - 6:30pm

I will be announcing one more meetup later this week if you can't make it to this one. Stay tuned!

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

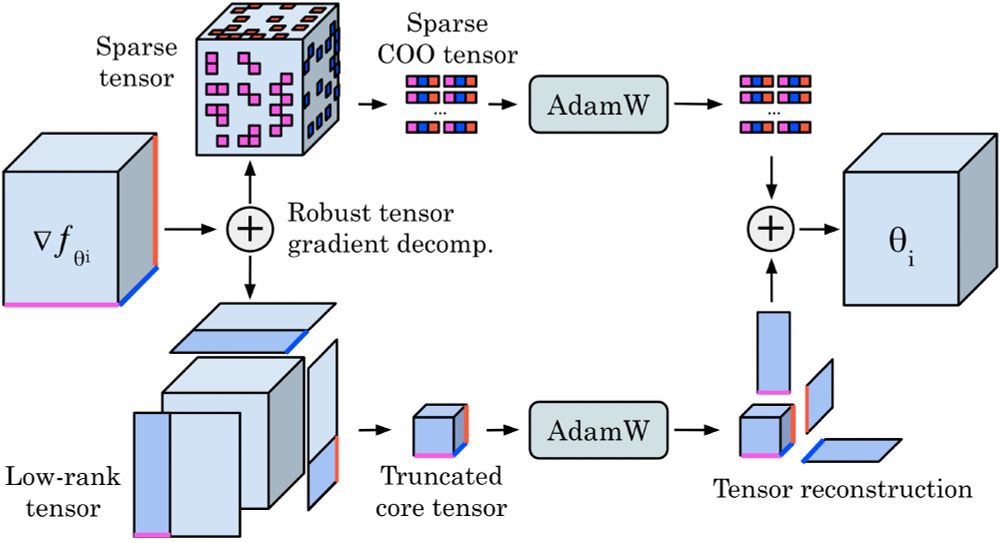

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

magazine.caltech.edu/post/ai-mach...

magazine.caltech.edu/post/ai-mach...

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

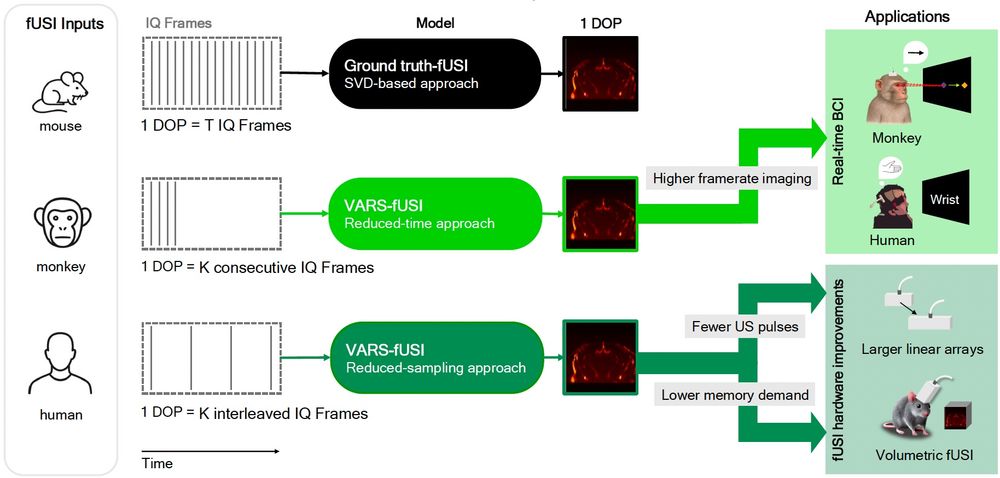

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/