Huge congratulations to the authors! 🎉

Huge congratulations to the authors! 🎉

@neuripsconf.bsky.social. Full schedule featuring Mila-affiliated researchers presenting their work at #NeurIPS2025 here mila.quebec/en/news/foll...

@neuripsconf.bsky.social. Full schedule featuring Mila-affiliated researchers presenting their work at #NeurIPS2025 here mila.quebec/en/news/foll...

Come to our poster to talk more about representation geometry in LLMs. 😃

🗓️ Friday 4:30-7:30 pm session

📍 Exhibit Hall C, D, E

🏁 Poster # 2502

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

Come to our poster to talk more about representation geometry in LLMs. 😃

🗓️ Friday 4:30-7:30 pm session

📍 Exhibit Hall C, D, E

🏁 Poster # 2502

Introducing our new framework: MUPI (Embedded Universal Predictive Intelligence) which provides a theoretical basis for new cooperative solutions in RL.

Preprint🧵👇

(Paper link below.)

Introducing our new framework: MUPI (Embedded Universal Predictive Intelligence) which provides a theoretical basis for new cooperative solutions in RL.

Preprint🧵👇

(Paper link below.)

#neuroskyence

www.thetransmitter.org/this-paper-c...

I have been asked this when talking about our work on using powerlaws to study representation quality in deep neural networks, glad to have a more concrete answer now! 😃

www.biorxiv.org/content/10.1...

I have been asked this when talking about our work on using powerlaws to study representation quality in deep neural networks, glad to have a more concrete answer now! 😃

His “epigenetic landscape” is a diagrammatic representation of the constraints influencing embryonic development.

On his 50th birthday, his colleagues gave him a pinball machine on the model of the epigenetic landscape.

🧪 🦫🦋 🌱🐋 #HistSTM #philsci #evobio

Funded by @ivado.bsky.social and in collaboration with the IVADO regroupement 1 (AI and Neuroscience: ivado.ca/en/regroupem...).

Interested? See the details in the comments. (1/3)

🧠🤖

Funded by @ivado.bsky.social and in collaboration with the IVADO regroupement 1 (AI and Neuroscience: ivado.ca/en/regroupem...).

Interested? See the details in the comments. (1/3)

🧠🤖

Previously, we show that neural representations for control of movement are largely distinct following supervised or reinforcement learning. The latter most closely matches NHP recordings.

We used a combination of neural recordings & modelling to show that RL yields neural dynamics closer to biology, with useful continual learning properties.

www.biorxiv.org/content/10.1...

Previously, we show that neural representations for control of movement are largely distinct following supervised or reinforcement learning. The latter most closely matches NHP recordings.

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

🚨 New preprint! 🚨

Excited and proud (& a little nervous 😅) to share our latest work on the importance of #theta-timescale spiking during #locomotion in #learning. If you care about how organisms learn, buckle up. 🧵👇

📄 www.biorxiv.org/content/10.1...

💻 code + data 🔗 below 🤩

#neuroskyence

This group got it working!

arxiv.org/abs/2506.17768

May be a great way to reduce AI energy use!!!

#MLSky 🧪

This group got it working!

arxiv.org/abs/2506.17768

May be a great way to reduce AI energy use!!!

#MLSky 🧪

Can't wait to read in detail.

It's a pleasure to share our paper at @cp-cell.bsky.social, showing how mice learning over long timescales display key hallmarks of gradient descent (GD).

The culmination of my PhD supervised by @laklab.bsky.social, @saxelab.bsky.social and Rafal Bogacz!

Can't wait to read in detail.

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

@aggieinca.bsky.social+gang for developing Rankme and Lidar, respectively.

Reptrix incorporates these representation quality metrics. 🚀

Let's make it easier to select good SSL/foundation models. 💪

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

@aggieinca.bsky.social+gang for developing Rankme and Lidar, respectively.

Reptrix incorporates these representation quality metrics. 🚀

Let's make it easier to select good SSL/foundation models. 💪

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

It formalizes a lot of ideas I have been mulling over the past year, and connects tons of historical ideas neatly.

Definitely worth a read if you are working/interested in mechanistic interp and neural representations.

arxiv.org/abs/2503.01824

It formalizes a lot of ideas I have been mulling over the past year, and connects tons of historical ideas neatly.

Definitely worth a read if you are working/interested in mechanistic interp and neural representations.

@tyrellturing.bsky.social for supporting me through this amazing journey! 🙏

Big thanks to all members of the LiNC lab, and colleagues at mcgill University and @mila-quebec.bsky.social. ❤️😁

@tyrellturing.bsky.social for supporting me through this amazing journey! 🙏

Big thanks to all members of the LiNC lab, and colleagues at mcgill University and @mila-quebec.bsky.social. ❤️😁

can-acn.org/scientist-se...

can-acn.org/scientist-se...

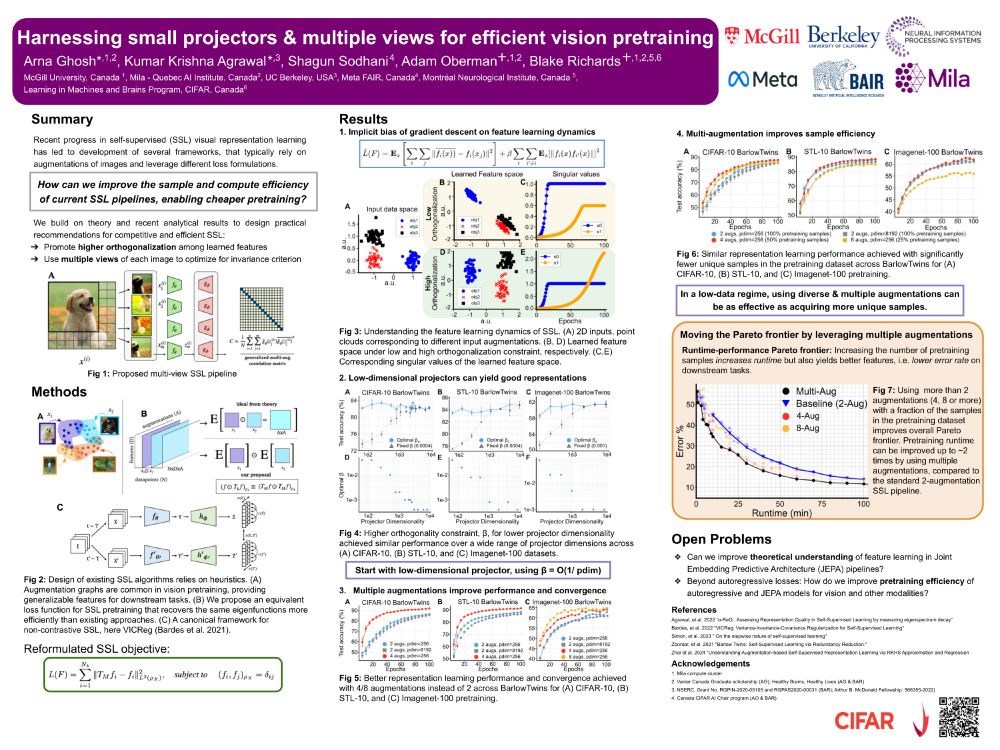

#NeurIPS2024

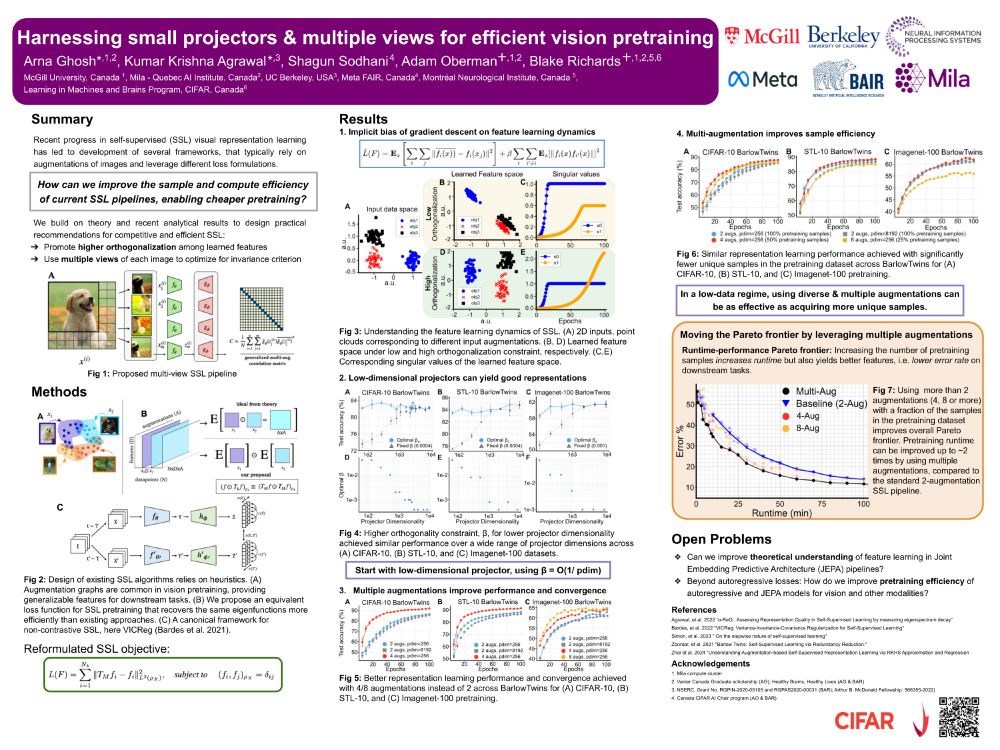

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8

#NeurIPS2024

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8

arxiv.org/abs/2412.03215

arxiv.org/abs/2412.03215