🤖 AI tinkerer 🏗️ Building tech communities

🇪🇺 UK 🇬🇧🇩🇪🇻🇪 | Check bio: axelgarciak.com/bio

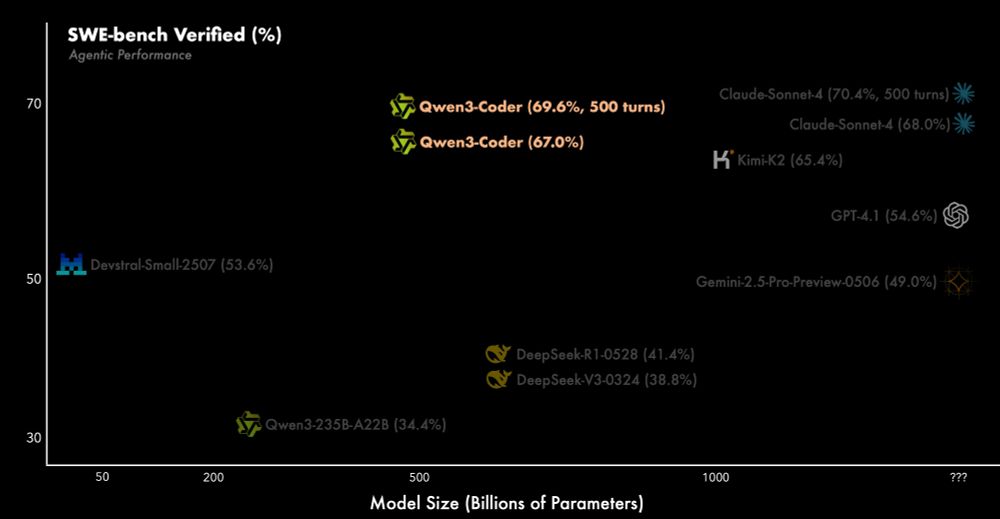

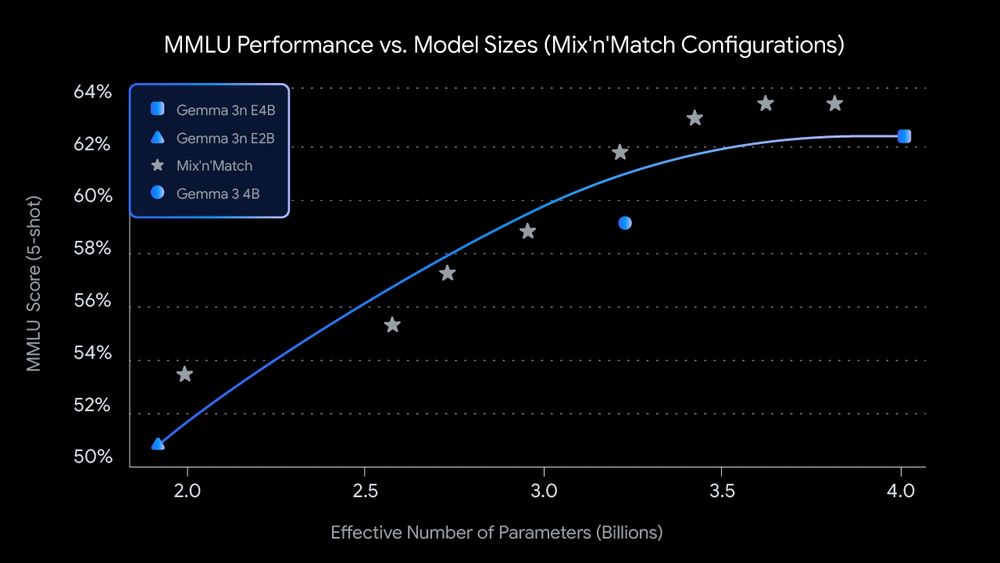

Only 3B active parameters and almost as good (in benchmarks) as Qwen3-235B-A22B and Qwen3-32B.

Only 3B active parameters and almost as good (in benchmarks) as Qwen3-235B-A22B and Qwen3-32B.

I keep a close-eye to small models, and this one is a great win.

I've seen some tests and it is clever enough despite its size, but the main purpose is to fine-tune it to do specialized tasks.

I keep a close-eye to small models, and this one is a great win.

I've seen some tests and it is clever enough despite its size, but the main purpose is to fine-tune it to do specialized tasks.

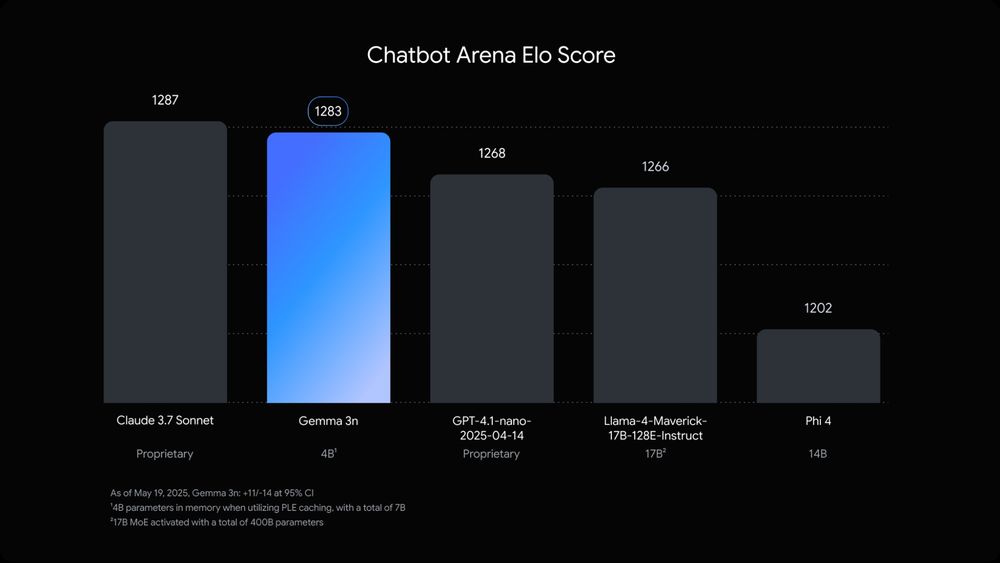

I like Gemma 3n. They are decent lightweight models to run offline on smartphones.

They have 5B and 8B raw parameters but are comparable with the memory footprint of 2B to 4B models!

I like Gemma 3n. They are decent lightweight models to run offline on smartphones.

They have 5B and 8B raw parameters but are comparable with the memory footprint of 2B to 4B models!

That way they don't have to compare themselves to Qwen3 or DeepSeek r2 and distill versions.

The more they wait, the more embarrassing it will be when they don't compare their model to those two. 😅

That way they don't have to compare themselves to Qwen3 or DeepSeek r2 and distill versions.

The more they wait, the more embarrassing it will be when they don't compare their model to those two. 😅

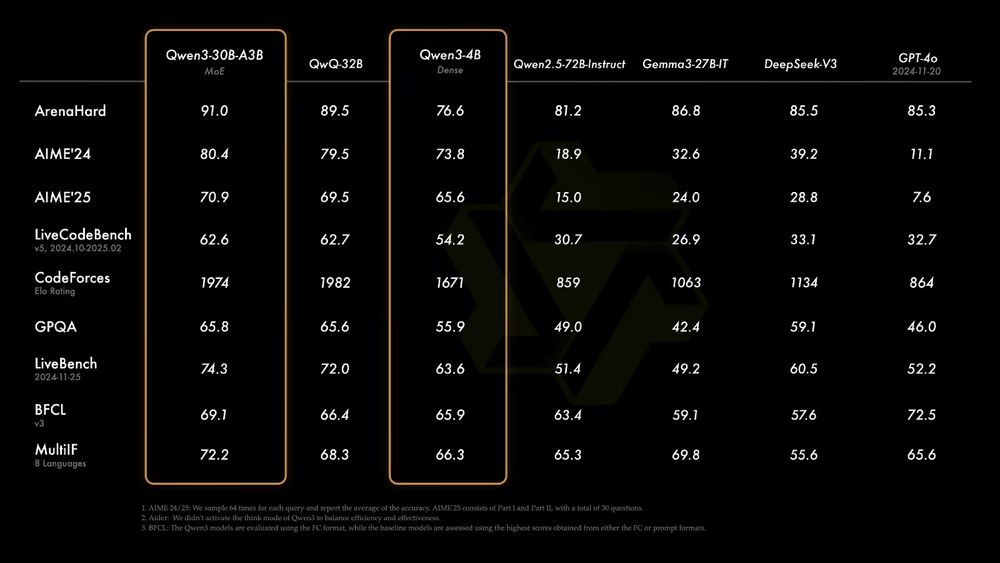

Qwen3 4B and 8B are good for many use cases. Even Qwen3 0.6B seems coherent.

Qwen3-30B-A3 can be run with 4GB VRAM with enough system RAM.

I ran it on 4GB VRAM and got 12tok/s!

Qwen3 4B and 8B are good for many use cases. Even Qwen3 0.6B seems coherent.

Qwen3-30B-A3 can be run with 4GB VRAM with enough system RAM.

I ran it on 4GB VRAM and got 12tok/s!

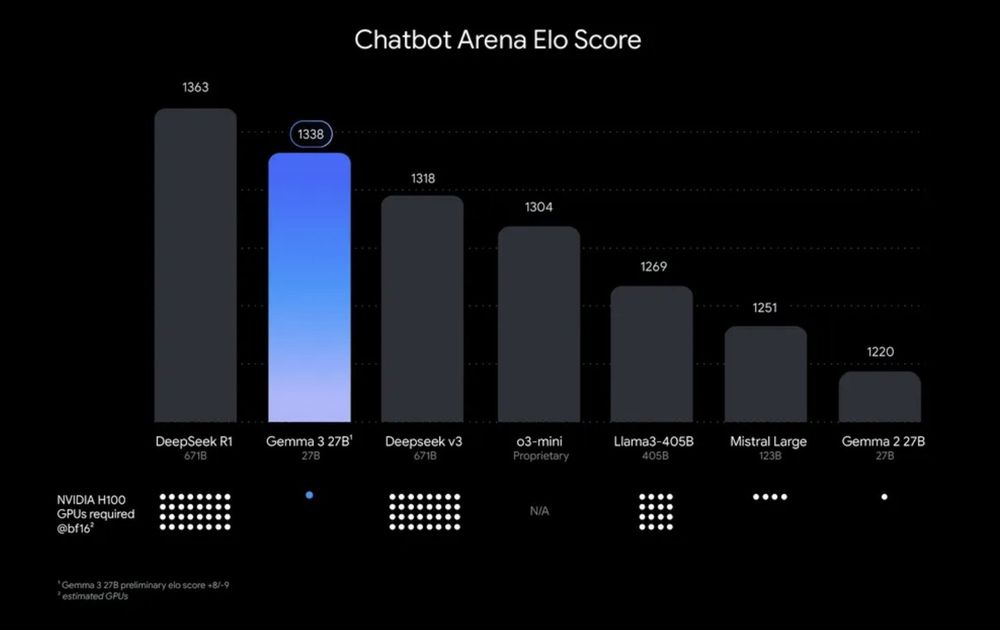

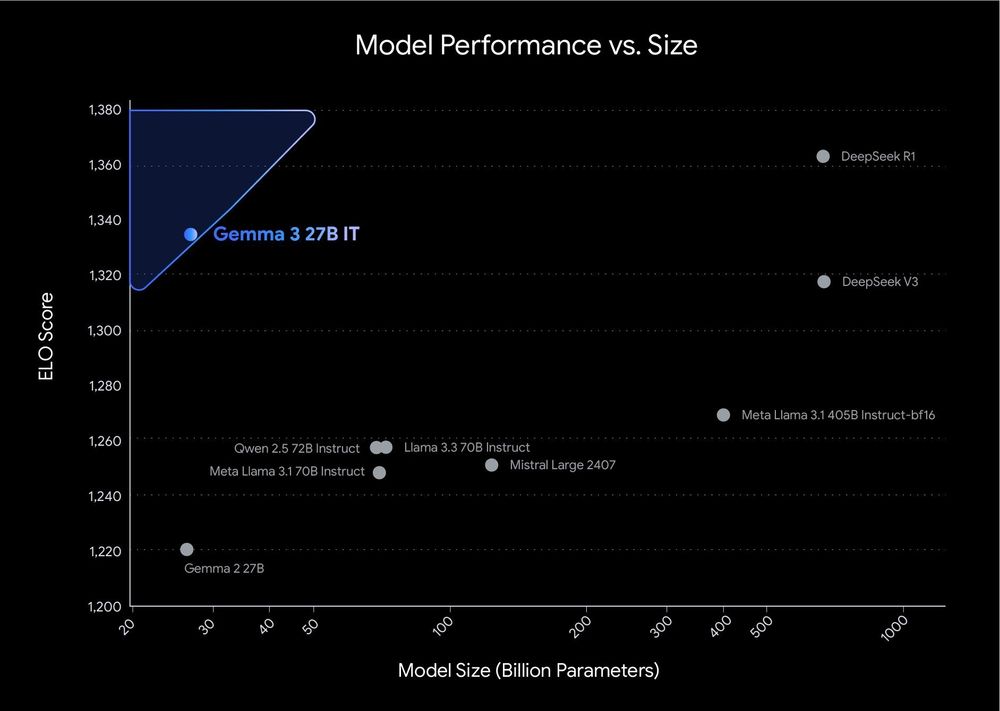

Gemma 3 27B by Google DeepMind was released with performance between DeepSeek V3 and R1.

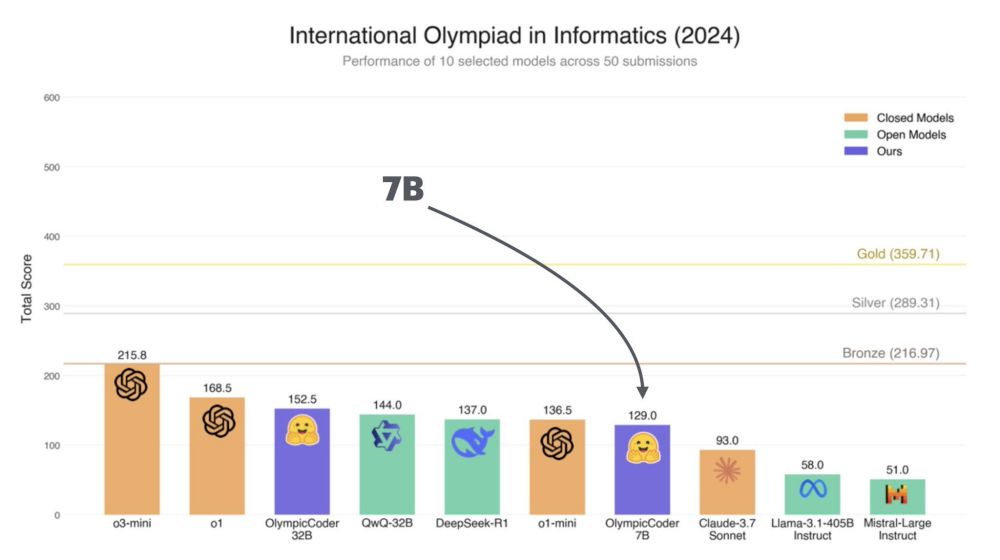

OlympicCoder 7B by Hugging Face with better performance than Claude 3.7 in Olympiad-Level Programming Problems.

Gemma 3 27B by Google DeepMind was released with performance between DeepSeek V3 and R1.

OlympicCoder 7B by Hugging Face with better performance than Claude 3.7 in Olympiad-Level Programming Problems.

Anyone who's actually used AI for longer than a few minutes knows the truth is far less sensational.

Anyone who's actually used AI for longer than a few minutes knows the truth is far less sensational.

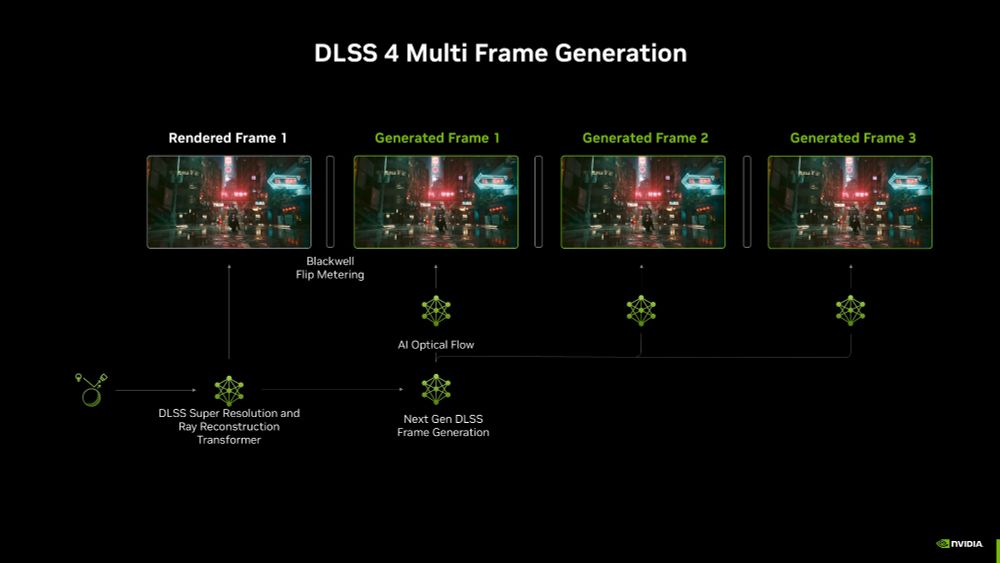

DLSS stands for Deep Learning Super Sampling.

Games use DLSS AI to predict multiple frames and improve image quality/upscaling.

That allows lower-spec GPUs to play at higher resolutions/FPS!

DLSS stands for Deep Learning Super Sampling.

Games use DLSS AI to predict multiple frames and improve image quality/upscaling.

That allows lower-spec GPUs to play at higher resolutions/FPS!

Tech CEO Replaces Dev Team with AI, Only to Be Ousted by Ex-Employee's AI-Powered SAAS One Month Later.

Tech CEO Replaces Dev Team with AI, Only to Be Ousted by Ex-Employee's AI-Powered SAAS One Month Later.

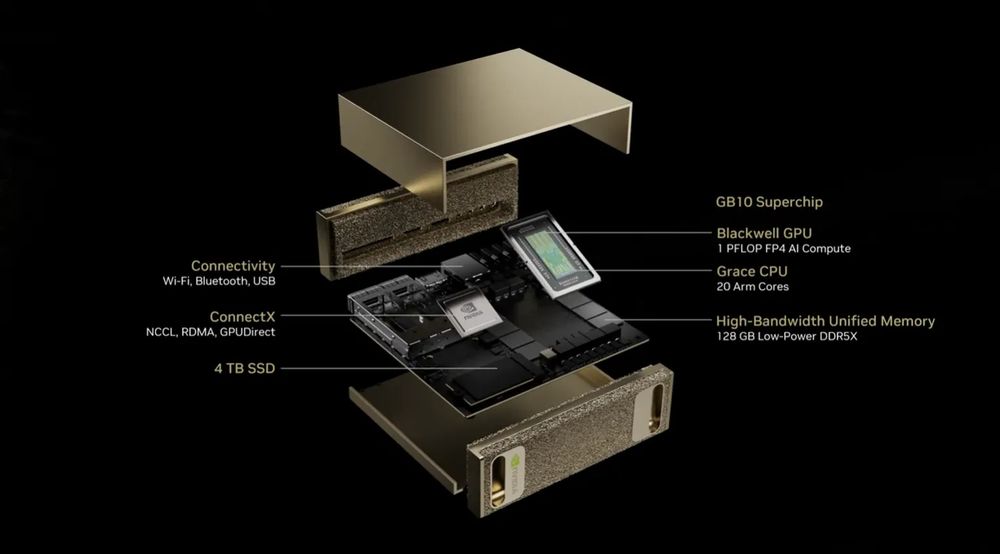

- Powered by GB10 Grace Blackwell Superchip.

- 128GB of unified memory.

- Can be linked to run bigger models.

It's kind of the Linux version of Mac Mini with 2x memory but 1.5x price.

- Powered by GB10 Grace Blackwell Superchip.

- 128GB of unified memory.

- Can be linked to run bigger models.

It's kind of the Linux version of Mac Mini with 2x memory but 1.5x price.

Senior Overengineer.

Why use one line of code when you can use 100?

- Turns simple tasks into complex microservices.

- Believes every app needs AI, blockchain, and a custom-built framework.

- Thinks “scalability” is more important than functionality.

Senior Overengineer.

Why use one line of code when you can use 100?

- Turns simple tasks into complex microservices.

- Believes every app needs AI, blockchain, and a custom-built framework.

- Thinks “scalability” is more important than functionality.

Agile Stand-Up Philosopher.

Turns 15-minute meetings into existential debates.

Agile Stand-Up Philosopher.

Turns 15-minute meetings into existential debates.

I seem to find more interesting content here and it is not a coincidence as the feeds are easy to customize.

I seem to find more interesting content here and it is not a coincidence as the feeds are easy to customize.

No build tools, node.js, npm... just write code and see it work in the browser.

You could inspect the source and understand everything.

vue.js at least still makes it possible to write an app without a build system.

No build tools, node.js, npm... just write code and see it work in the browser.

You could inspect the source and understand everything.

vue.js at least still makes it possible to write an app without a build system.

45² = 2025

This will happen again in 2116.

It's also the sum of the cubes of all single digit numbers:

0³ + 1³ + 2³ + 3³ + 4³ + 5³ + 6³ + 7³ + 8³ + 9³ = 2025.

45² = 2025

This will happen again in 2116.

It's also the sum of the cubes of all single digit numbers:

0³ + 1³ + 2³ + 3³ + 4³ + 5³ + 6³ + 7³ + 8³ + 9³ = 2025.

It works for colon cancer, could work for others, and may reduce side effects and recurrence.

The technology has been transferred to BioRevert Inc. for practical development.

It works for colon cancer, could work for others, and may reduce side effects and recurrence.

The technology has been transferred to BioRevert Inc. for practical development.

Key takeaways:

- Fine-tuned with semiconductor specific datasets.

- Collaborative and scalable for diverse use cases.

- Attempts to address the industry challenge of knowledge gaps when experts retire.

Key takeaways:

- Fine-tuned with semiconductor specific datasets.

- Collaborative and scalable for diverse use cases.

- Attempts to address the industry challenge of knowledge gaps when experts retire.

- Processes raw bytes, no tokenization

- Dynamic patching reduces compute costs by 50%

- Excels in multilingual, noisy, and low-resource tasks

- Extends to image/audio applications

Hopefully we see BLT models soon!

- Processes raw bytes, no tokenization

- Dynamic patching reduces compute costs by 50%

- Excels in multilingual, noisy, and low-resource tasks

- Extends to image/audio applications

Hopefully we see BLT models soon!

It's interesting as the models ranked there usually fit inside 4GB VRAM or less and run relatively well without GPU as well.

EXAONE from LG is on the lead. I tested the 3B model before and it did well for its size.

Here's the link:

It's interesting as the models ranked there usually fit inside 4GB VRAM or less and run relatively well without GPU as well.

EXAONE from LG is on the lead. I tested the 3B model before and it did well for its size.

Here's the link:

It is a blazingly fast terminal emulator written by the legendary Mitchell Hashimoto in the Zig programming language.

Check it out: ghostty.org

It is a blazingly fast terminal emulator written by the legendary Mitchell Hashimoto in the Zig programming language.

Check it out: ghostty.org

According to benchmarks it is as good as GPT-4o/ Claude 3.5 Sonnet, but open source.

Here's a summary:

According to benchmarks it is as good as GPT-4o/ Claude 3.5 Sonnet, but open source.

Here's a summary:

Scrum Claus.

🎷 Making a list, checking it twice, gonna find out who's naughty and nice.

- Runs daily stand-ups where everyone pretends they’re on track.

- Believes every sprint should end with a Christmas miracle.

Scrum Claus.

🎷 Making a list, checking it twice, gonna find out who's naughty and nice.

- Runs daily stand-ups where everyone pretends they’re on track.

- Believes every sprint should end with a Christmas miracle.