Lab account for science only.

https://www.caian.uni-bonn.de/en

www.cogneurosociety.org/schedule-of-...

#Neuroscience #AcademicChatter

www.cogneurosociety.org/schedule-of-...

#Neuroscience #AcademicChatter

We are excited to announce that over the course of the next three years, Dominik Bach will install and establish an infrastructure for #OPMEG research at University of Bonn's medical campus.

© Foto: Robert Seymour

We are excited to announce that over the course of the next three years, Dominik Bach will install and establish an infrastructure for #OPMEG research at University of Bonn's medical campus.

👉Visit our website for more information: www.caian.uni-bonn.de/en

👉Visit our website for more information: www.caian.uni-bonn.de/en

👉Visit our website for more information: www.caian.uni-bonn.de/en

👉Visit our website for more information: www.caian.uni-bonn.de/en

👉Visit our website for more information: www.caian.uni-bonn.de/en

Follow the link below to meet the first confirmed speakers. More to come!

👉 bernstein-network.de/bernstein-co...

#BernsteinNetwork #CompNeuro

Follow the link below to meet the first confirmed speakers. More to come!

👉 bernstein-network.de/bernstein-co...

#BernsteinNetwork #CompNeuro

[1/4] This Wednesday, October 29 at 1 p.m., we are launching a new, hybrid series of guest talks organized the Center of Artificial Intelligence and Neuroscience (CAIAN) in Bonn.

[1/4] This Wednesday, October 29 at 1 p.m., we are launching a new, hybrid series of guest talks organized the Center of Artificial Intelligence and Neuroscience (CAIAN) in Bonn.

A consortium of 21 learning experts surveyed the literature and expert community for robust empirical phenomena, which we graded by reproducibility and generality.

A consortium of 21 learning experts surveyed the literature and expert community for robust empirical phenomena, which we graded by reproducibility and generality.

#mathematicalpsychology #calibration #simulation

www.sciencedirect.com/science/arti...

#mathematicalpsychology #calibration #simulation

www.sciencedirect.com/science/arti...

Join us at UCL to conduct an MEG study of reward & memory, exploring how brain signals link to variability in apathy and anhedonia.

🔗 apply from next week: lmdlab.github.io

🚨closes September 12th!

Join us at UCL to conduct an MEG study of reward & memory, exploring how brain signals link to variability in apathy and anhedonia.

🔗 apply from next week: lmdlab.github.io

🚨closes September 12th!

We propose an unsupervised skeleton-based temporal action segmentation framework. If you are interested in exploring actions in skeleton sequences without human annotations, check it out:

Paper: www.arxiv.org/abs/2508.04513

Project page: uzaygokay.github.io/SMQ/

We propose an unsupervised skeleton-based temporal action segmentation framework. If you are interested in exploring actions in skeleton sequences without human annotations, check it out:

Paper: www.arxiv.org/abs/2508.04513

Project page: uzaygokay.github.io/SMQ/

www.caian.uni-bonn.de/en/news

www.caian.uni-bonn.de/en/news

🔎 Find out more about requirements and deadlines here: www.caian.uni-bonn.de/en/team/jobs

🔎 Find out more about requirements and deadlines here: www.caian.uni-bonn.de/en/team/jobs

Apply by June 15.

More information: www.caian.uni-bonn.de/en/team/oppo...

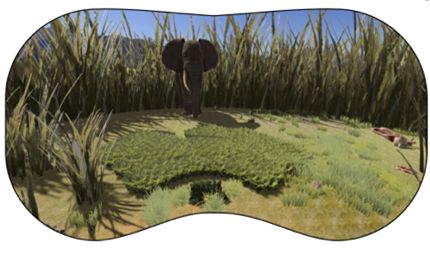

#vrthreat #PhDposition #jobalert #researchjob #neuroscience #psychology

Apply by June 15.

More information: www.caian.uni-bonn.de/en/team/oppo...

#vrthreat #PhDposition #jobalert #researchjob #neuroscience #psychology

Apply by June 15.

More information: www.caian.uni-bonn.de/en/team/oppo...

#vrthreat #PhDposition #jobalert #researchjob #neuroscience #psychology